- Pascal's Chatbot Q&As

- Archive

- Page 15

Archive

Australia’s National AI Plan 2025 is one of the most holistic and forward-thinking national AI strategies published to date. It combines massive investment with strong values, pragmatic regulation.

Its surprising strengths lie in consumer adoption, infrastructure ambition, and Indigenous data governance.

The first large-scale, qualitative national study of how universities across the UK are experimenting with, resisting, or preparing for the arrival of AI in the Research Excellence Framework.

Cautious about risks to research integrity, but compelled by unprecedented administrative pressures, escalating costs, and rapid technological change.

Journalists are not simply using AI to speed up chores—they are gradually delegating portions of editorial judgment.

Future structural tensions: generational rifts in working practices, gendered divides in technological empowerment, and contested norms around what counts as acceptable AI-mediated editorial labor.

How explainability is being embedded into AI models that analyse speech for early detection of Alzheimer’s disease and related dementias.

This systematic review synthesizes 13 studies published between 2021 and 2025 that applied explainable AI (XAI) methods to acoustic, linguistic, and multimodal speech-analysis pipelines.

A core thread running through the dialogue is Sutskever’s insistence that modern AI fundamentally generalizes worse than humans—despite models having orders of magnitude more data and compute.

He offers a stark example: a model fixing a coding bug only to reintroduce it two steps later, a sign that something deep about “understanding” is missing.

The delay in releasing the names of these donors allowed the administration to install its personnel and implement its initial policy blitz before the public could trace the money behind the decisions

The list reveals a composition that is less a cross-section of American political support and more a “Board of Directors” for a hostile takeover of the federal government.

Courts, rights holders, and regulators are converging on a coherent legal doctrine that views AI training as copying subject to copyright, not an exempt activity.

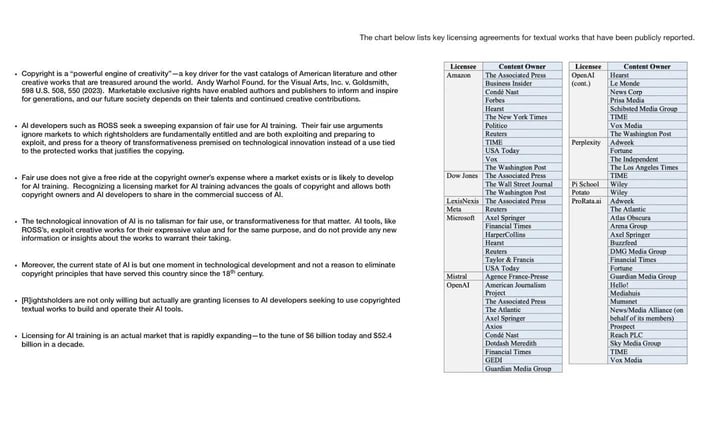

Refusal to license does not magically transform unlicensed copying into fair use. A real, functioning licensing market exists, projected to grow from $6 billion today to $52.4 billion within a decade.

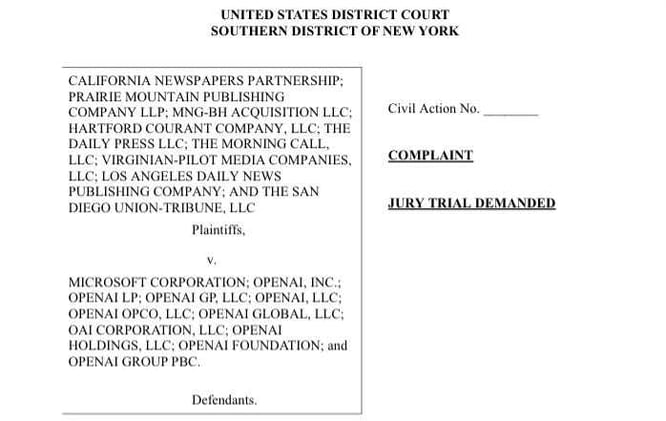

The complaint filed by the California Newspapers Partnership and a broad coalition of regional publishers against Microsoft and OpenAI marks one of the most aggressive and unambiguous challenges yet.

(1) framing harm as misappropriation & derivative-market destruction, (2) invoking constitutional language around Congress’s duty to protect authors, (3) model updates as repeated acts of copying.

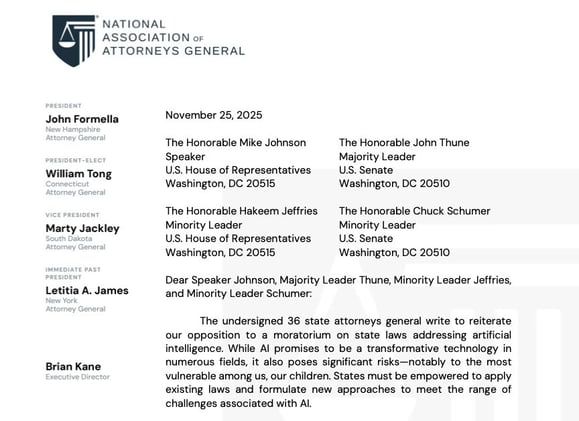

The AGs are correct: a federal moratorium on state AI laws would be dangerous, regressive, and harmful to public welfare. Their evidence is compelling and grounded in real-world harms.

But a purely state-led approach would also fail to address systemic, cross-border risks. The federal government must lead—but it must lead with, not against, the states.

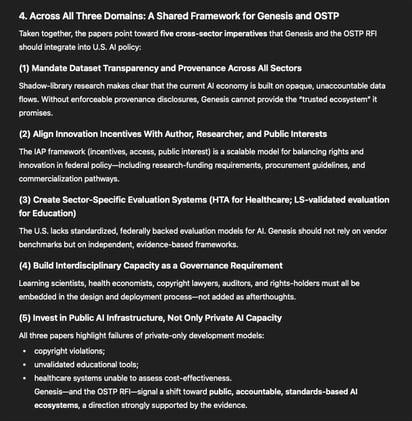

The research is clear: the future is not “AI everywhere.” The future is “AI integrated through evidence, rights, and human expertise.”

AI, Public Infrastructure, and the New Social Contract: Synthesizing Copyright Governance, Health-Economics, and Learning Sciences in the Era of the Genesis Initiative