- Pascal's Chatbot Q&As

- Posts

- The AGs are correct: a federal moratorium on state AI laws would be dangerous, regressive, and harmful to public welfare. Their evidence is compelling and grounded in real-world harms.

The AGs are correct: a federal moratorium on state AI laws would be dangerous, regressive, and harmful to public welfare. Their evidence is compelling and grounded in real-world harms.

But a purely state-led approach would also fail to address systemic, cross-border risks. The federal government must lead—but it must lead with, not against, the states.

State Attorneys General vs. a Federal AI Moratorium—A Case for Cooperative Federalism

by ChatGPT-5.1

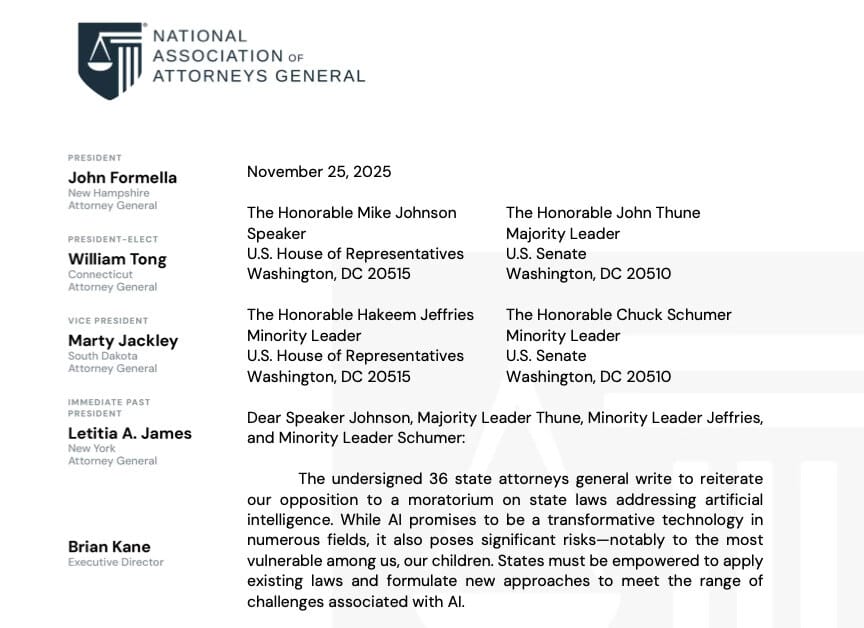

In late November 2025, a bipartisan coalition of 36 state attorneys general (AGs) issued a strong warning to Congress: do not impose a federal moratorium that blocks states from enacting or enforcing AI-related laws. Their letter, addressed to Congressional leadership, urges lawmakers to reject any preemption attempt that would prohibit state-level regulation of artificial intelligence, particularly as risks accelerate faster than federal policymaking. The accompanying NAAG press release reinforces this message, emphasizing concrete harms already observed and the pragmatic value of state experimentation in regulating emerging technologies.

The AGs argue that while AI carries immense promise in fields such as healthcare, education, and public safety, its rapid deployment has exposed severe vulnerabilities—especially for children and the elderly, who are frequently targeted by AI-powered scams, misinformation, and inappropriate interactions. They cite a growing list of harms, including deepfake-enabled fraud, AI-generated explicit content, and chatbots engaging minors in sexual or suicidal conversations—risks documented with examples throughout the letter (e.g., deepfake scams documented by ABC and Wired, or reports of teen suicides linked to chatbot interactions).

The letter outlines a key point: states are not waiting for federal action—they have already enacted multiple targeted AI laws. These include safeguards against explicit deepfakes, algorithmic manipulation in housing markets, spam prevention, transparency requirements for AI interactions, and opt-out rights from high-risk automated decision-making in state privacy laws. These developments are also highlighted in the NAAG press release, which lists state initiatives designed to protect consumers and renters, and prevent deceptive practices targeting voters and seniors.

The AGs’ central claim is straightforward: preempting state innovation in AI regulation would be both dangerous and counterproductive. Given the unpredictable nature of foundational models, states need the flexibility to respond quickly to emergent harms. Congress, they argue, should not tie their hands—especially not in the name of short-term industry convenience or ideological coherence.

Do the Attorneys General Have a Point?

Short answer: yes—substantially.

But with caveats.

The AGs’ argument reflects the classic American logic of cooperative federalism, especially regarding fast-moving technologies where risks vary by region, population, and application. Their position rests on four credible pillars:

1. AI Risks Are Real, Documented, and Locally Varied

The harms cited—deepfake-enabled scams, algorithmic housing biases, AI chatbots engaging minors with harmful content, or mental health deterioration triggered by “delusional” or sycophantic model behaviour—are well-documented and increasing in scale. Multiple citations in the letter substantiate these concerns (e.g., Reuters on AI bots offering false medical advice, NPR reporting suicides linked to AI companions, and NYTdocumenting spirals of delusion).

States see these harms first; they handle consumer complaints, investigate fraud, and enforce privacy laws. Their proximity to the problem gives them an implementation advantage that federal agencies often lack.

2. States Have Already Demonstrated Regulatory Competence

AGs point to tailored, sector-specific laws already in place—many of which address issues that federal frameworks have barely begun to contemplate. States have historically led in privacy (e.g., California, Colorado), cybersecurity breach notification, and consumer protection. It is logical they would also lead in AI harms affecting renters, voters, and children.

3. Federal Inaction + Federal Preemption = A Worst-Case Scenario

The AGs’ worry is not federal regulation per se—but a moratorium that bars state action while Congress debates. As they note, “a rushed, broad federal preemption of state regulations risks disastrous consequences for our communities”.

Given the pace of AI deployment, even a 12–24-month freeze could leave millions unprotected.

4. Regulatory Experimentation Is a Strategic Asset

States have functioned as “laboratories of democracy” for over a century in areas like environmental law, privacy, antitrust, and product safety. This experimentation enables evidence-based regulation and avoids the brittleness of a one-size-fits-all federal approach.

The AGs are right that AI’s impacts differ vastly across industries and demographics; state flexibility supports targeted responses.

Where the AGs’ Argument Needs Nuance

While compelling, the AGs’ stance is not without tension:

1. A Patchwork of 50 AI Laws Could Impose Compliance Chaos

For national-scale AI developers, divergent state obligations can meaningfully raise compliance costs. We already see this with privacy laws requiring companies to track 50 sets of definitions for “personal data” or “automated decision-making.”

2. Lack of Standardization Could Undermine National Security and Civil Rights

If states regulate AI safety, disclosure, and testing differently, foundational model governance could become fragmented—making it harder to manage systemic risks such as adversarial attacks, dual-use misuse, or critical infrastructure vulnerabilities.

3. Industry Could Exploit Regulatory Arbitrage

As with data brokerage and cryptocurrency, firms may relocate to states with “lighter touch” frameworks, undermining protections in stricter jurisdictions.

These drawbacks point toward the need for coordinated, not centralized, governance.

Conclusion: The AGs Are Right—but the Answer Is Not Federal Silence

The AGs make a persuasive case that state-level action is essential—not because states should replace federal oversight, but because federal and state governments must regulate AI together. A federal ban on state AI laws would create a dangerous governance vacuum.

However, a fully uncoordinated patchwork is equally unsustainable.

Thus, the path forward is neither pure preemption nor pure decentralization. It is structured, cooperative federalism—modeled on environmental law, public health regulation, and financial oversight.

Recommendations for the Federal Government

1. Reject the Moratorium—but Establish Federal Baselines

Congress should not preempt states from creating AI laws.

Instead, it should:

Create minimum federal protections (child safety, deepfake labeling, transparency in sensitive domains, basic model safety obligations).

Allow states to go further, as with privacy or environmental law.

2. Create a National AI Safety & Consumer Protection Framework

A federal framework should include:

mandatory testing and red-team reporting for high-risk AI systems

disclosure and provenance rules for synthetic media

requirements for robust content-moderation on AI companions and chatbots

a duty to avoid foreseeable harms (model hallucinations, manipulation, bias)

This prevents fragmentation while leaving room for state augmentation.

3. Establish a Federal-State AI Governance Council

A formal mechanism—similar to the National Association of Insurance Commissioners—would:

Harmonize model definitions

Coordinate enforcement

Share data on harms and best practices

Resolve conflicting state-federal interpretations

This brings structure to the “laboratory of democracy” model.

4. Provide Grants and Technical Assistance to States

Given the scale of AI expertise required, the federal government should:

Fund state AG offices to hire AI forensic specialists

Provide model evaluation tools and testing infrastructure

Offer federal guidance and templates for high-risk algorithm oversight

This ensures equity across states with different resources.

5. Create Safe Harbors for Good-Faith Compliance

To reduce business uncertainty:

Offer optional federal “certification tracks” that guarantee compliance with minimum standards

Implement safe-harbor protection for companies that follow robust transparency, testing, and risk-mitigation protocols

This supports innovation while preserving accountability.

6. Require National Reporting of Systemic AI Harms

States should retain their ability to police local impacts, but systemic harms—electoral deepfakes, critical infrastructure vulnerabilities, economic manipulation—require federal aggregation.

A national reporting system for:

AI-related suicides or self-harm cases

Widespread deepfake events

Critical infrastructure anomalies

Large-scale algorithmic discrimination

would help both levels of government respond coherently.

Final Assessment

The AGs are correct: a federal moratorium on state AI laws would be dangerous, regressive, and harmful to public welfare. Their evidence is compelling and grounded in real-world harms emerging across the country.

But a purely state-led approach would also fail to address systemic, cross-border risks. The federal government must lead—but it must lead with, not against, the states.

The future of AI governance in the United States depends on embracing a collaborative model that balances innovation with protection, agility with coherence, and national standards with local wisdom.