- Pascal's Chatbot Q&As

- Archive

- Page 13

Archive

Limitless.ai - A case study in how small, hardware-enabled AI companies transition their products, user promises, and privacy models into the machinery of a Big Tech ecosystem.

Most global markets—including the EU, UK, Israel, South Korea, Turkey, China, and Brazil—are cut off entirely as of Dec 5, 2025, with permanent data deletion for users in those regions after Dec 19.

The Amazon–Perplexity dispute is not just about shopping. It is a crystallization of a larger societal determination: Can AI agents wander the digital world as if they were human users...

...unconstrained by platform rules, security measures, and copyright boundaries? Amazon says no—legally, contractually, and technologically. Courts are increasingly saying no as well.

The United States is currently undertaking a radical restructuring of its industrial, legal, and energy policies to accommodate the unprecedented demands of the artificial intelligence (AI) sector.

The analysis reveals a “whole-of-government” approach where national security is utilized as the primary vehicle to bypass fiscal constraints, copyright protections, and state-level safety regulations

A rigorous investigative analysis of the current operational frameworks governing major social media and e-commerce platforms reveals a profound and systemic asymmetry in how identity is verified.

Sophisticated panopticons to monitor, verify, and restrict the behavior of individual users, while barriers to entry for commercial actors (advertisers & 3rd-party sellers) remain dangerously porous.

The first Italian court case targeting AI training on audiovisual works, and it arrives in the context of a rapidly expanding global litigation wave against Perplexity.

The litigation alleges large-scale, unauthorized ingestion of copyrighted film and TV works to train Perplexity’s models.

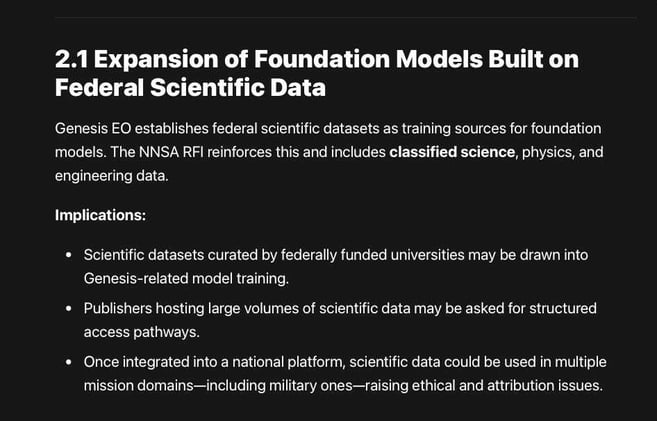

Despite the national security framing, the NNSA RFI contains multiple elements highly consequential for universities, research organizations, and scholarly publishers.

Genesis EO establishes federal scientific datasets as training sources for foundation models. The NNSA RFI reinforces this and includes classified science, physics, and engineering data.

U.S. News & World Report v. OpenAI — Grievances, Evidence, Legal Quality, Comparison to Other AI Suits, and Likely Outcomes

The complaint directly links OpenAI’s model behavior to potential life-impacting misinformation, introducing a consumer-protection dimension absent from earlier lawsuits.

The soul document is encoded into the model through supervised learning & reinforcement signals, describing how it should conceive of its purpose, priorities, identity, principals & moral obligations.

Understanding what a soul overview is—and how it differs from ordinary prompting—is crucial for evaluating its implications for neutrality, scientific objectivity, and high-risk sectors.

Australia’s National AI Plan 2025 is one of the most holistic and forward-thinking national AI strategies published to date. It combines massive investment with strong values, pragmatic regulation.

Its surprising strengths lie in consumer adoption, infrastructure ambition, and Indigenous data governance.

The first large-scale, qualitative national study of how universities across the UK are experimenting with, resisting, or preparing for the arrival of AI in the Research Excellence Framework.

Cautious about risks to research integrity, but compelled by unprecedented administrative pressures, escalating costs, and rapid technological change.