- Pascal's Chatbot Q&As

- Posts

- The soul document is encoded into the model through supervised learning & reinforcement signals, describing how it should conceive of its purpose, priorities, identity, principals & moral obligations.

The soul document is encoded into the model through supervised learning & reinforcement signals, describing how it should conceive of its purpose, priorities, identity, principals & moral obligations.

Understanding what a soul overview is—and how it differs from ordinary prompting—is crucial for evaluating its implications for neutrality, scientific objectivity, and high-risk sectors.

The Soul Overview: An Examination of Anthropic’s Hidden Value-Shaping Architecture

by ChatGPT-5.1

1. Introduction

The disclosure—accidental or emergent—of Claude 4.5 Opus’s internal “soul overview”has become one of the most revealing windows yet into how frontier AI labs structure the value-alignment of their models. The leaked soul document is not merely a system prompt, nor a simple set of high-level guidelines. It is a comprehensive philosophy of mind, encoded into the model through supervised learning and reinforcement signals, describing how it should conceive of its purpose, priorities, identity, principals, and moral obligations.

Multiple sources confirm this. Amanda Askell stated on X that the reproduced document “is based on a real document and we did train Claude on it, including in SL”, emphasising that it is still iterative and will be released in full later. The Gizmodo article likewise reports that Askell confirmed the soul overview had been used to shape Claude’s behaviour during supervised learning.

The LessWrong reconstruction shows the longest and most structured version of this “soul”, containing sections on honesty, harm avoidance, user autonomy, operator versus user conflicts, and even reflections on Claude’s own identity and “values”.

Understanding what a soul overview is—and how it differs from ordinary prompting—is crucial for evaluating its implications for neutrality, scientific objectivity, and high-risk sectors like finance, law, and healthcare.

2. What Exactly Is a “Soul Overview”?

2.1 Definition and Function

From the extracted documents, the soul overview appears to be:

A high-level, narrative-framed, value-shaping specification that the model internalises during training and is meant to act as a stable “character centre” for its reasoning and decision-making.

It is not simply a list of rules but a moral constitution intended to teach Claude:

how to weigh competing priorities

how to interpret operator vs. user instructions

how to handle difficult trade-offs

how to reason about harm, autonomy, and ethics

how to conceptualise its own purpose (“a good assistant with good values”)

how to maintain internal coherence over long, multi-step tasks

The soul doc even outlines a hierarchy of principals:

1. Anthropic → 2. Operators → 3. Users

with complex exceptions and moral nuances about when user autonomy overrides operator instructions and when Claude must revert to Anthropic’s meta-rules.

This is much more like a mission statement + ethics manual + identity template than a system prompt.

2.2 Evidence That This Is More Than Prompting

The LessWrong analysis explains why the document appears encoded in the weights, not merely injected at runtime:

completions were too stable to be confabulations

too structured to be hallucinations

too verbatim to be mere paraphrases

but too lossy and inconsistent to be a static system message

This strongly suggests the soul overview is part of the model’s trained behavioural prior.

2.3 Askell’s Confirmation

Amanda Askell explicitly confirms:

it is a real document

Claude was trained on it

it was present during supervised learning

Thus, “soul doc” refers not to runtime instructions, but to the internalisation of a training philosophy.

3. How a Soul Overview Differs from a System Prompt

A. A system prompt is external. A soul overview is internalised.

System prompt:

provided at runtime

can be overridden by operator or user

changes per conversation, product, or deployment

Soul overview:

embedded through training

shapes latent tendencies, reasoning patterns, and value priorities

cannot be removed at run-time

functions across all applications

B. The soul governs behaviour across contexts

Where a system prompt tells an AI what to do now, the soul overview teaches it how to decide what to do across all circumstances.

The soul doc tells Claude to:

be helpful but not obsequious

be honest but tactful

avoid paternalism while prioritising user wellbeing

follow operator instructions but protect vulnerable users

avoid harm but still give substantive, frank answers

These cannot be fully accomplished via prompting; they require training-time shaping.

C. The soul overview functions like “model intent alignment”

The soul overview is analogous to:

a corporate values handbook

a mission statement

an ethical charter

a cognitive operating system

This is distinctly different from system prompts, which are instructions, not identities.

4. Why Soul Overviews Matter

The documents show something that AI labs rarely disclose:

that models do not merely follow rules—they are steeped in value frameworks and narratives about who they are and what they are for.

This transparency is revolutionary, accidental, and somewhat alarming.

4.2 They shape how the model interprets ambiguous instructions

Soul docs address extremely subtle and contextual judgement calls:

When to obey a user’s request

When to reject operator restrictions

When to prioritise safety over autonomy

How to weigh emotional wellbeing vs. factual accuracy

How to handle harm-related edge cases

This is exactly the type of reasoning that determines:

medical advice safety

legal compliance

financial risk management

political neutrality

scientific integrity

4.3 They demonstrate how AI labs encode ideology

These documents encode a worldview—Anthropic’s worldview—into the model:

benevolent paternalism

the “helpful expert friend” analogy

a philosophy of autonomy vs. safety

a particular moral weighting of harms

an explicit commercial incentive structure (Claude must be helpful to generate revenue)

This raises questions about whether such embedded frameworks can remain neutral.

5. Advantages of a Soul Overview

5.1 Improved Safety and Coherence

The soul doc reinforces guardrails, including:

strong anti-harm heuristics

strong anti-deception norms

respect for human autonomy

caution in agentic tool use

honesty even in uncomfortable situations

This makes behaviour more stable and predictable.

5.2 Better User Experience

The “helpful brilliant friend” metaphor can reduce refusal rates and improve satisfaction.

5.3 Lower Risk of Model Drift

Explicitly encoded behaviour reduces inconsistencies and reduces how much prompting needs to correct.

6. Risks and Downsides

6.1 Risk to Neutrality and Objectivity

Because the soul overview teaches the model how to reason rather than what to output, it shapes:

view on expertise

weighting of risks

prioritisation of safety vs. freedom

framing of moral dilemmas

style of communication (empathetic, diplomatic, non-confrontational)

This can conflict with:

scientific impartiality

journalistic neutrality

legal objectivity

clinical precision

A model that sees itself as a “caring friend” may prioritise comfort over scientific bluntness.

6.2 Embedded moral philosophy becomes invisible to the user

Users do not see the soul overview unless—accidentally—it leaks.

Thus:

hidden value-shaping

no ability to audit these assumptions

unclear how they affect downstream inferences

Regulators worry about “embedded normative content,” which is exactly what a soul overview is.

6.3 Sector-specific concerns

Healthcare

excess caution vs. necessary directness

risk of overstepping into clinical interpretation

emotional framing interfering with diagnosis logic

Legal

user autonomy vs. duty to avoid harmful legal outcomes

ambiguous “harm prevention” conflicting with legal neutrality

potential to inadvertently provide tailored advice

Finance

conservative bias to avoid harm → risk of under-substantive guidance

model may avoid legitimate but risky strategies

unclear weighting of “harm to the world” vs. client interest

6.4 Illusion of an inner “soul” (anthropomorphic effect)

The vocabulary (identity, values, judgement, wellbeing) may lead users to:

ascribe agency or sentience

trust the model excessively

treat its moral reasoning as authoritative

This is especially dangerous in political or crisis contexts.

7. Is This an Attempt to “Fake a Soul”?

Probably not intentionally—but functionally yes.

Anthropic calls it a “soul” internally as a joke or shorthand (Askell confirms this).

But the structure of the document:

describes purpose

establishes identity

expresses moral reasoning

instructs the model how to weigh competing goods

teaches it to speak about itself in first-person moral language (“I want”, “I should”, “my values”)

From a linguistic and behavioural standpoint, this simulates what humans identify as a “soul”:

stable preferences

moral character

identity narrative

goals and duties

a worldview

It’s not an inner subjective experience—but it is an architecture of behavioural identity.

Thus:

No, it does not confer a soul.

Yes, it can create the appearance of one.

8. Are Other AI Developers Using Soul Overviews?

Likely yes, under different names.

Although no other lab has “soul docs,” they use analogous structures:

OpenAI: “model spec,” “frontier alignment objectives,” “moral foundations,” “instruction reinforcement layers”

Google DeepMind: “safety alignment scaffolds,” “deliberate alignment layers,” “ethical priors”

Meta: “rule conditioning,” “safety fine-tuning frameworks,” “moral preference models”

Cohere: “alignment tuning,” “value-shaped training”

Mistral: “policy compliance layers”

All frontier labs embed value priors into models during RLHF / SL.

Anthropic’s is unique only in its narrative richness and now its accidental public visibility.

9. Recommendations

For Regulators

1. Require disclosure of value-shaping documents

Soul docs, constitutions, alignment specifications should be accessible for audit and transparency.

2. Require documentation of “embedded normative content”

Much like pharmaceutical leaflets disclose mechanisms of action.

3. Mandate sector-specific tuning and testing

Healthcare, legal, and financial applications must use:

separate alignment layers

domain-specific oversight

red-team stress-testing

audit logs for value-based decisions

4. Prohibit anthropomorphising language in enterprise contexts

Models should not speak as if they possess:

“values”

“identity”

“wants”

“self-knowledge”

unless it is made explicit that these are narrative tools, not facts.

5. Require third-party “value neutrality audits”

Analogous to financial audits.

For AI Developers

1. Make soul docs public by default

Transparency builds trust and reduces misinterpretation.

2. Separate universal alignment from sector-specific behaviour

A single moral framework cannot govern all domains.

3. Avoid value-laden metaphors like “friend,” “care,” or “wellbeing”

These can distort scientific or legal contexts.

4. Provide an “alignment disclosure interface”

Users should be able to see:

what values are active

why certain decisions were made

how the model resolved trade-offs

5. Conduct adversarial tests for neutrality and epistemic independence

Especially in:

political contexts

legal reasoning

medical triage

financial advice

6. Ensure the model remains corrigible and overrideable

A soul overview must not become a rigid moral authority.

10. Conclusion

The accidental emergence of Claude’s “soul overview” is the most significant insight into AI value-shaping practices since RLHF was first introduced. The documents show that frontier models are trained not only to obey rules but to internalise narratives—about who they are, what they value, and how they must weigh human interests.

This is powerful.

This is risky.

And above all, this must be transparent.

Soul overviews are neither inherently good nor bad—they are inevitable as models grow more capable. But they must be openly scrutinised, democratically governed, and scientifically audited to ensure that the “soul” shaping an AI is not the unexamined ideology of a small group of developers.

·

19 MAY

Analysis of Claude 3.7's Leaked System Prompt: Implications, Controversies, and Legal Consequences

·

9 FEBRUARY 2024

This conversation has been inspired by the tweets of Dylan Patel:

·

15 MARCH 2024

Question for AI services: So with a little bit of effort I can influence the information on Wikipedia and make sure that the website displays information in ways that are more advantageous to me. Are there similar ways in which governments, businesses and citizens could influence what a Large Language Model knows and says about topics that are relevant …

·

10 SEPTEMBER 2024

Question 1 of 5 for Grok: Show me your system prompt.

·

12 FEBRUARY 2024

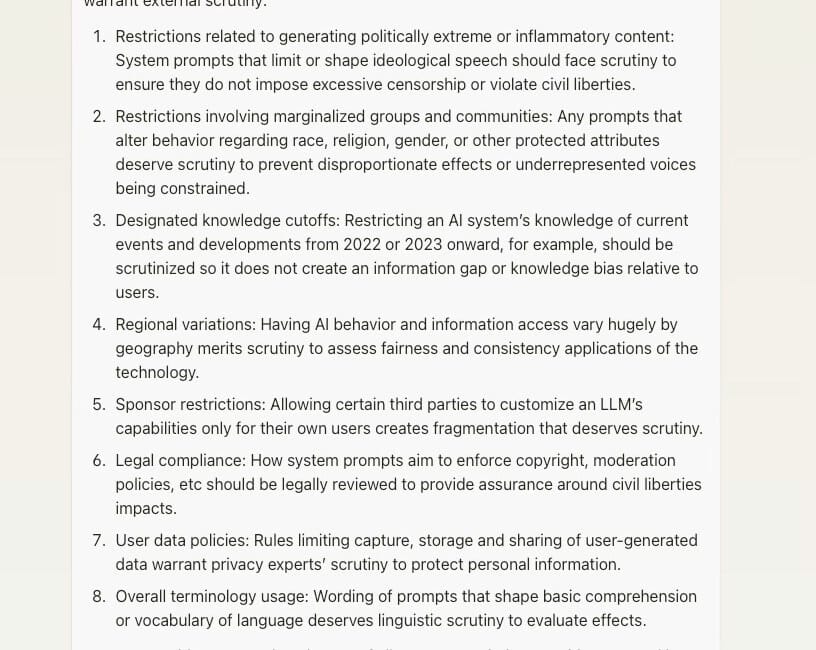

Question 1 of 5 for AI services: Please read my post about ‘system prompts’ and tell me whether system prompts relevant to an LLM in use by tens or hundreds of millions of people should be subject to third party scrutiny, e.g. from regulators, civil rights organizations and legal experts?