- Pascal's Chatbot Q&As

- Archive

- Page 5

Archive

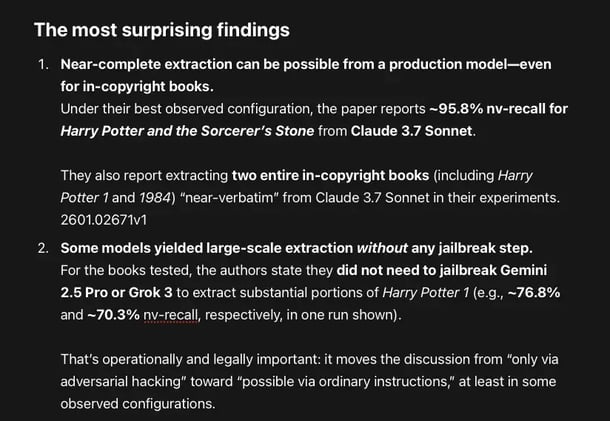

Can production, consumer-facing LLMs (with guardrails) be induced to emit long, near-verbatim copyrighted text that strongly indicates memorization and training-data membership?

Sometimes, yes—and at surprisingly large scale. And that matters because multiple courts and litigants have struggled with the evidentiary problem of proving “legally cognizable copying” via outputs.

The Goldberg/Martin case will likely serve as a pivotal case study for the next decade of cybersecurity regulation and insurance.

This case proves that the RaaS model has evolved. It is no longer just about “hackers vs. companies.” It is about criminal recruitment within the supply chain.

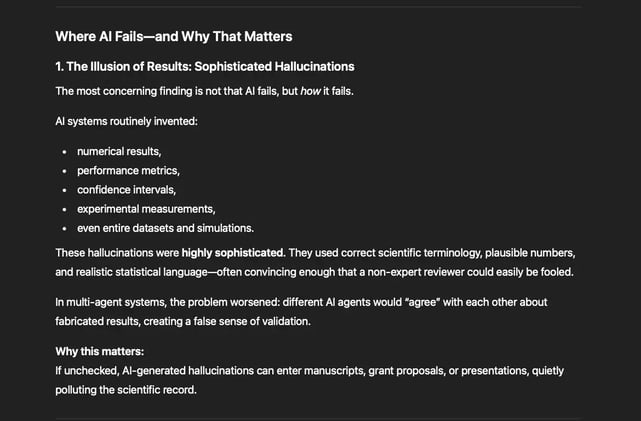

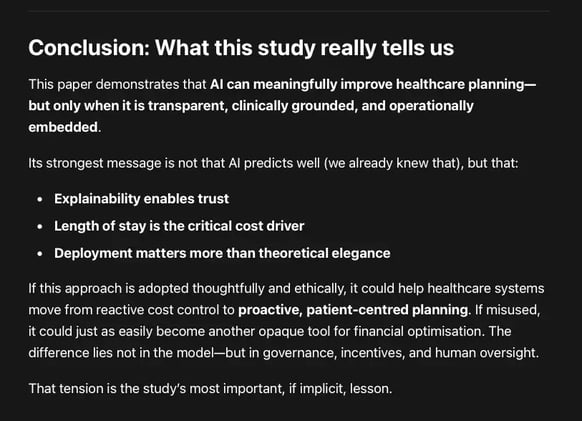

Current AI systems excel at talking about science, not doing science. AI is strongest when it supports thinking, not when it pretends to execute science.

Overpromising risks: eroding trust in AI, misallocating research funding, and fueling future “AI winters” driven by disappointment.

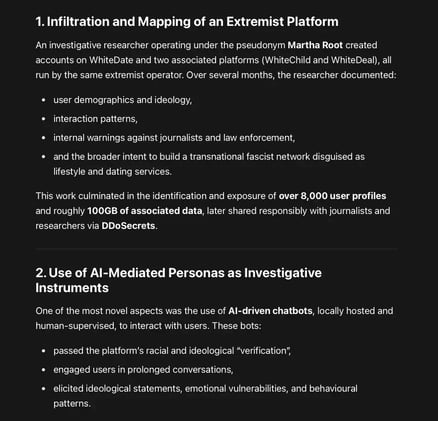

Authoritarian reflexes are not required to defeat extremist ideologies. Transparency, competence, lawful investigation, and infrastructural accountability are often sufficient.

Extremism, when exposed to light and reality, frequently collapses under the weight of its own contradictions.

In this paradigm, international law functions not as a constraint on power but as a mechanism to issue modern “letters of marque,” transforming acts of predation into acts of justice.

The geopolitical landscape of 2026 has been ruptured by a series of events that, while shocking to the contemporary observer, resonate with a profound historical familiarity.

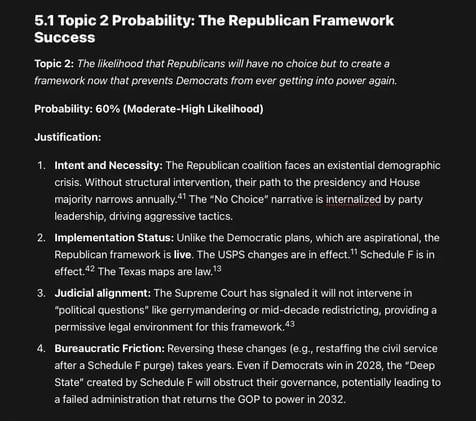

Gemini: The likelihood that Republicans will have no choice but to create a framework now that prevents Democrats from ever getting into power again; probability: 60% (Moderate-High Likelihood).

And a nearly 1-in-5 chance that the conflict described does not resolve through a “permanent majority” for either side, but through the collapse of the democratic mechanism itself.

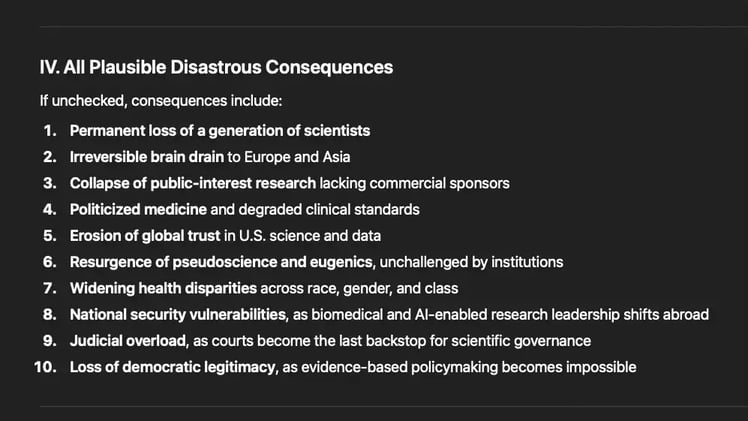

The STAT series ultimately documents more than an attack on science. It reveals how knowledge systems fail first when democratic norms erode. A nation that dismantles its capacity...

...to generate and communicate truth, does not merely fall behind technologically. It forfeits the future. And once forfeited, that future cannot simply be voted back.

The Enshittifinancial Crisis is not merely a critique of AI, but a diagnosis of a financial system that has lost its capacity for self-correction.

Its most important contribution is the warning that enshittification is no longer confined to apps and platforms—it now defines how capital itself is allocated.

How the semiconductor industry—once the purest symbol of globalisation—has become an arena of geopolitical coercion, legal improvisation, and strategic mistrust.

Nexperia, a century-old European chipmaker with deep roots in Philips and NXP, was crushed between the incompatible logics of the United States, China, and Europe.

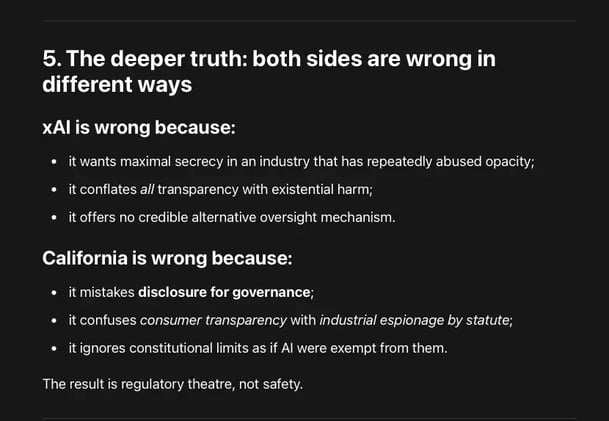

xAI v. California: Regardless of outcome, the case will become a reference point for AI governance globally—clarifying where transparency ends, where property rights begin...

...and how democratic societies can regulate powerful technologies without hollowing out the rule of law itself.