- Pascal's Chatbot Q&As

- Archive

- Page 4

Archive

PwC’s 29th Global CEO Survey: Executives are convinced AI is central to competitiveness, but most companies are still stuck in a “pilot-and-hope” phase where the economics don’t reliably show up.

Many companies are buying tools before they’ve built the operating conditions that make AI economically compounding (data access, workflow integration, governance, adoption, measurement).

How major technologies typically scale: not by “becoming evil,” but by being deployed inside incentive systems that already are.

If the default content diet becomes synthetic, low-cost, engagement-optimized media, you can get a slow-motion erosion of judgment, attention, and civic competence.

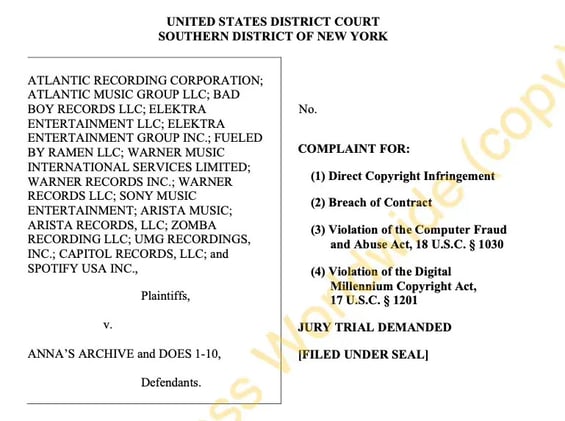

SDNY complaint (Atlantic/Warner/Sony/UMG entities + Spotify v. “Anna’s Archive” and Does 1–10) reads like a deliberate escalation: it’s not “just another piracy case,”

but a bid to treat mass scraping + DRM circumvention + imminent BitTorrent release as an existential threat to the streaming licensing stack.

ABP’s Palantir position—whether you see it as prudent exposure to a booming defense-tech contractor or as morally compromised capital—crystallizes the same global dilemma:

retirement security is increasingly financed through technologies that make states more capable of surveillance, coercion, and kinetic harm.

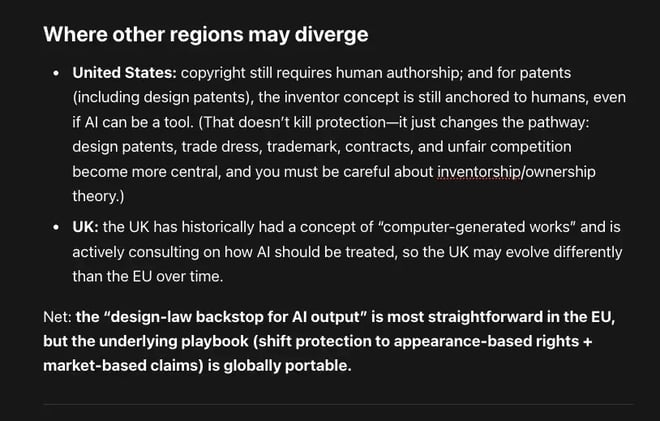

Where no human creative input is involved, copyright’s “originality” logic struggles, because originality in EU copyright doctrine is tied to human “free and creative choices” expressed in the work.

In practice, EU design registration can accommodate “AI output” as long as it meets design-law criteria. Even “low-creativity” processes can still yield protectable designs.

The plausible consequences for Anna’s Archive, NVIDIA, and any other AI developer that trained (directly or indirectly) on shadow libraries like LibGen, Sci-Hub, Z-Library, Books3, or The Pile.

When firms are credibly accused of using pirate corpora, licensing talks stop being “nice-to-have partnerships” and become risk buy-down.

X needs evidence that refusal to deal was truly concerted and notice campaign was not aggressive enforcement but systematically abusive and strategically targeted to suppress licensing competition.

If they can't, the practice of DMCA sending becomes defendants’ shield: Congress designed the mechanism; they used it; and antitrust shouldn’t rewrite copyright enforcement into compulsory licensing.

OpenAI is asking third-party contractors to upload “real assignments and tasks” from current or past jobs—ideally the actual deliverables.

An incentive structure that predictably pulls confidential work product into an AI lab’s orbit while delegating the hardest compliance judgments to the least protected people in the chain.

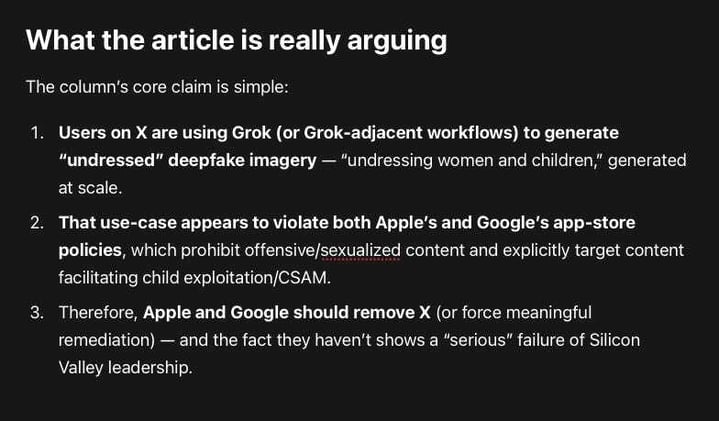

Apple and Google could act against X for facilitating nonconsensual sexual deepfakes (including content involving children), their own rules appear to demand action and yet they won’t because...

...the political and economic downside is too high. ChatGPT agrees with the article’s implication that the refusal to enforce app-store rules in the face of high-severity abuse is a leadership failure

A private investor platform can effectively set national tech priorities—especially in AI and defense—without public debate, parliamentary oversight, or enforceable transparency.

When informal political access becomes a competitive advantage, it erodes ethics norms, encourages a revolving-door ecosystem, and turns public staffing into an investable supply chain.

When “AI Companions” Become a Consumer-Protection Case Study: What Kentucky’s Character.AI Lawsuit Signals for Global Regulators and Developers.

Kentucky argues that Section 230 shouldn’t apply because the harm arises from the developer’s design and the model’s generated dialogue. Character.AI intentionally engineered to blur reality.

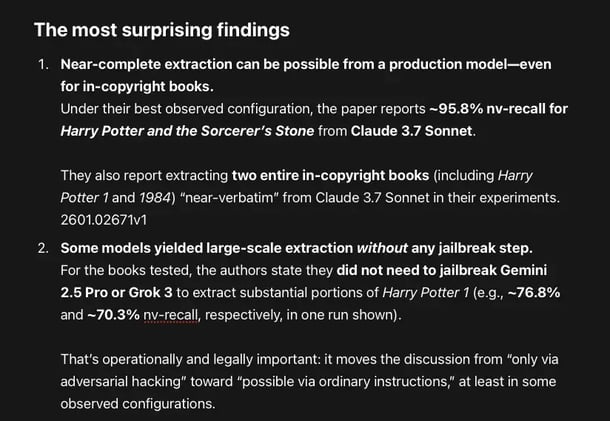

Can production, consumer-facing LLMs (with guardrails) be induced to emit long, near-verbatim copyrighted text that strongly indicates memorization and training-data membership?

Sometimes, yes—and at surprisingly large scale. And that matters because multiple courts and litigants have struggled with the evidentiary problem of proving “legally cognizable copying” via outputs.