- Pascal's Chatbot Q&As

- Archive

- Page 19

Archive

[Take 2] Gemini 2.5 Pro, Deep Research about Getty Images v Stability AI: Legal Failures, Trademark Precedents, and the Shifting Global Litigation Landscape.

This judgment creates a significant loophole in UK copyright law: AI models trained on infringing data outside the UK can be imported & used in the UK without incurring secondary copyright liability.

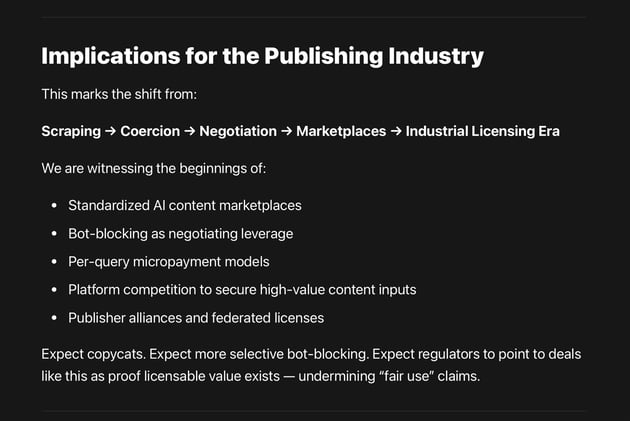

People Inc.’s move comes against a stark backdrop: Google search traffic — once 54% of its volume two years ago — dropped to just 24% this quarter due to AI Overviews, according to the company.

Search engines are no longer reliable gateways to publisher content; generative answers are becoming the new interface, and content owners risk being pushed further out of consumer view.

[GPT-4o hallucinates, Claude corrects] The Getty v. Stability AI decision is a watershed moment for global AI governance, not because of its sweeping condemnation of AI, but...

...because of the precise, evidence-based reasoning that ties training practices, synthetic outputs, and downstream liability together.

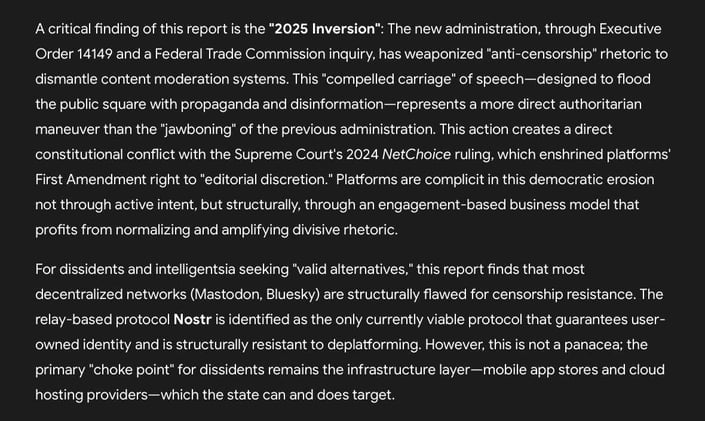

The U.S. is experiencing a “censorship war” on multiple fronts, including state-level educational gag orders, national security-based infrastructure bans, and private platform moderation.

The new administration, through Executive Order 14149 and a Federal Trade Commission inquiry, has weaponized “anti-censorship” rhetoric to dismantle content moderation systems.

The media, in any society, serves a dual role: it can function as an independent check on state power, or it can serve as an instrument that reflects and enforces state ideology.

An investigation into the media’s conduct during the rise of Nazi Germany and its comparison to the contemporary United States reveals two distinct models of media crisis.

The judiciary and booksellers have issued powerful charters guiding their responsible use. One is rooted in justice and legal integrity, the other in culture, creativity, and commerce.

Yet their messages converge on some of the most vital lessons we all must learn about how AI should—and should not—shape our world.

The demagogue’s “authentic appeal” stems not from being fact-based, but from weaponizing valid underlying grievances (e.g., economic fragility, status inequality) and channeling them into scapegoating

...and division, amplified by a new vector: the digital media ecosystem. This enables “participatory propaganda,” a symbiotic relationship where followers create and disseminate demagogic content.

When platforms meant to foster creativity and connection become vectors for suicide, extremism, and manipulation, it’s not just a failure of design—it’s a failure of values.

The platforms didn’t get this way by accident. They were engineered, funded, and marketed with full knowledge of the risks, and with willful ignorance of the consequences.

Gemini 2.5 Pro: The United States is not building “civilian” data centers that might be repurposed for war. It is building a Dual-Use Superstate. The 21st-century model for achieving global hegemony.

Data center is the factory, AI is the designer, the plutonium-breeding microreactor is the power source, all shielded by commercial plausible deniability and physically secured by the U.S. military.

In 2025, sales isn’t just evolving — it’s accelerating. Buyers expect tailored engagement, instant answers, and meaningful insight, not generic outreach or slow follow-ups.

For enterprise sales teams, that creates a challenge and an opportunity. The most successful teams aren’t replacing humans — they’re upgrading them with AI.

Both the means of data acquisition (downloading copyrighted works) and the outputs generated by AI models (novel-like continuations or imitations) are valid grounds for litigation—not just...

...abstract issues of transformative use. OpenAI must preserve and potentially produce detailed information. Outputs—even if stemming from fair use training—may still be infringing.

![[Take 2] Gemini 2.5 Pro, Deep Research about Getty Images v Stability AI: Legal Failures, Trademark Precedents, and the Shifting Global Litigation Landscape.](https://media.beehiiv.com/cdn-cgi/image/format=auto,width=800,height=421,fit=scale-down,onerror=redirect/uploads/asset/file/7217076f-6fe8-4501-b165-98fb023a5562/3bdfac81-2aa0-4c95-a344-3f9d9a040e49_2024x1232.jpg)

![[GPT-4o hallucinates, Claude corrects] The Getty v. Stability AI decision is a watershed moment for global AI governance, not because of its sweeping condemnation of AI, but...](https://media.beehiiv.com/cdn-cgi/image/format=auto,width=800,height=421,fit=scale-down,onerror=redirect/uploads/asset/file/81b2787d-c27c-4e4a-aaf2-44918f03fe32/62d3a6a3-ac25-469f-a7e7-f100e4c1bc9d_1320x1615.jpg)