- Pascal's Chatbot Q&As

- Archive

- Page -20

Archive

ChatGPT analyzes Marco Bassini's views: If you declare GenAI output “free speech,” you don’t just protect democracy against censorship. You also create an all-purpose deregulatory weapon...

...and an accountability escape hatch, while granting quasi-person status to systems that cannot bear moral responsibility.

The uncomfortable truth is that AI chat is becoming a new kind of societal sensor—one that is commercially operated, globally scaled, and only partially legible to outsiders.

The choice is whether scanning of chats evolves into accountable safety infrastructure with hard limits—or drifts into unaccountable surveillance theatre that fails at prevention and harms trust.

Modern censorship isn’t merely content removal‚ it’s an end-to-end control stack that combines state security apparatus with platform governance to achieve “invisible manipulation at scale.”

Posts detail how this system moves beyond crude deletion into sophisticated behavioral modification through ranking algorithms, throttling, routing, and narrative substitution.

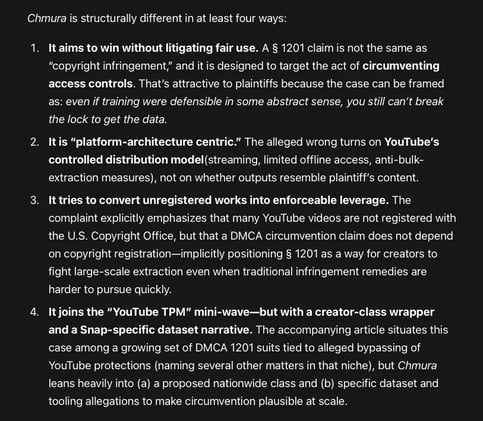

Chmura v. Snap. Claim: Snap could only have acquired YouTube videos at scale for AI training by bypassing YouTube’s technical access controls.

In other words: the wrong is not only (or even primarily) the training; it is the alleged breaking of the access gate that makes the training possible.

The analysis documents the “governing logic of frontier-model competition: treat totality of human expression as strategic infrastructure, and treat permissions as friction to be routed around.”

This represents systematic wealth transfer from knowledge producers to extraction platforms, justified through innovation rhetoric while operating as IP laundering at industrial scale.

The posts reveal systematic efforts by tech conglomerates to bypass environmental protections through legal, technical, and administrative maneuvers when building AI infrastructure.

The posts document how data centers pose existential risks to regional power grids, compromise local water sovereignty, and undermine democratic integrity of urban planning.

SCOTUS has delivered a blunt separation-of-powers ruling with immediate economic bite: the President cannot use the International Emergency Economic Powers Act (IEEPA) as a backdoor tariff statute.

However politically tempting “emergency” framing may be, tariffs remain—constitutionally and statutorily—first and foremost a congressional instrument.

An Office/Microsoft 365 Copilot Chat bug caused draft and sent emails with confidential/sensitivity labels to be “incorrectly processed” and summarized by Copilot for weeks.

Despite organizations having DLP policies intended to prevent this. Microsoft said it began rolling out a fix in early February, while not disclosing how many customers were affected.

Cresti’s core claim is simple: if you can’t explain where data goes and who can access it, you cannot credibly claim governance.

Built-in “assistants” often route prompts, documents, or derived metadata to external services for processing. Even when vendors promise security, institutions still need auditability.

We will not regulate AI effectively by asking for nicer narratives. We will regulate it by demanding verifiable evidence and making governance executable.

If AI developers do not internalize that, the likely outcome is a cycle of incidents, legitimacy crises, enforcement spikes, and a political backlash that will hit even “good” deployments.

The President of the Paris court issued three judgments rejecting Cloudflare's claim that DNS/CDN/proxy blocking would be “technically impossible,” too costly, or inevitably “international”...

...and therefore disproportionate. A dispute between Canal+ and Cloudflare reads like a manual for how courts can treat “infrastructure layer” services when used to facilitate mass infringement.

The “AI revolution” will only deliver on its promise to these critical sectors if we acknowledge that the cheapest path to a response is often the most expensive path to an error.

Until systems are designed to prioritize “truth at any cost” over “efficiency at any price,” their role in high-stakes decision-making must remain strictly supervised by human experts.