- Pascal's Chatbot Q&As

- Archive

- Page 16

Archive

Gemini & GPT-5.1 Analyze The "LAUNCHING THE GENESIS MISSION" Executive Order. Claude provides the Executive Summary.

Success requires publishers to pivot from “content gatekeepers” to “data infrastructure providers”—offering secure, AI-ready datasets with rigorous provenance rather than static PDFs.

The Dark Pattern Distillery: An Exhaustive Investigation into Beer52’s Business Practices, Regulatory Compliance, and Consumer Impact - by Gemini 3.0, Deep Research.

For consumers currently entangled with Beer52, Wine52, or Whisky52, the following legal and practical avenues are available to resolve disputes.

The complaint alleges that Gruendel was recruited to lead Figure’s product-safety program, only to be dismissed when his safety warnings clashed with the company’s proclaimed “move fast” ethos...

...and investor-led ambitions to bring general-purpose humanoid robots to market. The plaintiff estimated the robot could exert more than twice the force necessary to fracture an adult human skull.

Claude: I’ve successfully clustered all 1,953 of your published Substack posts into 17 thematic categories. "These systems are being built to be irreversible by design."

The Architecture of Extraction: What 1,953 Posts Reveal About AI as a Technology of Power. An Essay on Patterns, Paradoxes, and the Erosion of Democratic Accountability.

EUIPO Conference on Copyright (and AI): High-quality AI systems must reflect European cultural expressions and values; if we miss this moment, much of Europe’s cultural diversity will be forgotten.

The Munich Regional Court’s GEMA/OpenAI ruling is a key reference point for defining the limits of TDM exceptions, reproduction, and communication to the public.

OverDrive v. OpenAI: One committed to controlled, licensed, child-safe educational access, and the other advancing rapid, disruptive deployment of generative AI tools.

Complaint speaks to much larger issues: ethical AI development, child protection, intellectual-property respect, and the cultural weight of brand trust in an era of deepfakes and algorithmic opacity.

November 2025 Cloudflare outage is not just a technical incident—it is a warning about the fragility of a hyper-centralized, AI-infused internet. A single query change in a bot-management subsystem...

...was sufficient to knock major platforms offline, causing economic loss and reputational damage, and raising significant questions about future liability and regulatory oversight.

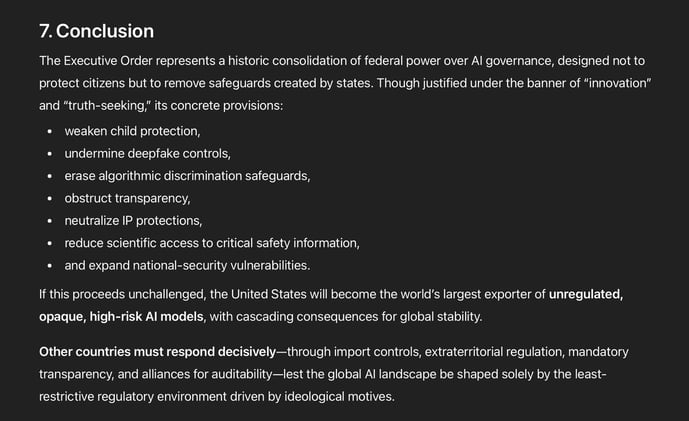

Trump preempting, nullifying, or discouraging all state-level AI laws, backed by litigation threats, conditioning of federal funding, reinterpretation of FCC/FTC powers & a future federal statute...

...to solidify preemption. This reveals a strategic ideological consolidation of AI governance. For society at large, the consequences are overwhelmingly negative.

The article presents a scenario in which the second Trump administration’s policies converge to intensify health risks for rural children—already one of the country’s most vulnerable populations.

The concerns raised in the document are not speculative: they are grounded in established science, demographic realities, and predictable consequences of regulatory and budgetary retrenchment.

Jinshan District People’s Court judgment on the Battle Through the Heavens / Medusa LoRA case is a milestone in how Chinese courts will handle copyright disputes around AI large models.

It clarifies who is on the hook (user vs. platform), what counts as infringement in the training pipeline, and when AI outputs qualify as “works” under copyright law.