- Pascal's Chatbot Q&As

- Archive

- Page 34

Archive

Trump's "lawfare" is escalating into a systemic phenomenon, with potential long-term consequences for judicial integrity, executive authority, and public trust. States introduced over 1,000 AI laws.

With over 326 active lawsuits, litigation against Trump has become so prevalent that multiple media organizations have launched dedicated “litigation trackers.”

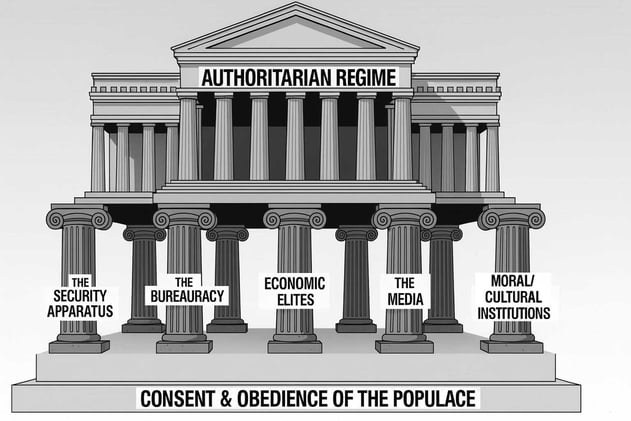

AI is forging a new kind of state: the Algorithmic Leviathan, an entity with unprecedented capacity for efficiency, administration, surveillance, and control.

This augmentation is a dual-edged sword, promising a revolution in public service delivery while simultaneously perfecting the instruments of social management and repression.

The analysis strongly supports the existence of a powerful, self-reinforcing ecosystem—a Digital Iron Triangle—comprising three key components:

an ideologically aligned segment of the tech elite, right-wing nationalist governments, and transnational extremist networks. This triangle doesn't require a central command structure to be effective.

In August 2025, two major Japanese media organizations—Nikkei Inc. (owner of the Financial Times) and The Asahi Shimbun—filed a joint lawsuit in the Tokyo District Court against Perplexity AI.

They accuse the company of: Large-scale copyright infringement - Unlicensed reproduction of paywalled and proprietary content - Harming the credibility and sustainability of professional journalism.

Zhuang et al. developed an artificial intelligence (AI) system that analyzes scientific journal websites using a combination of website content, website design, and bibliometric metadata.

Trained on over 15,000 journals vetted by the Directory of Open Access Journals (DOAJ), the model learned to distinguish between legitimate (“whitelisted”) and questionable (“unwhitelisted”) journals.

GPT-4o: When licensing content or data to AI developers, insist on the inclusion of certified unlearning capabilities. This method offers a pathway to enforce the right to deletion or withdrawal.

Rights holders could proactively create controlled surrogate datasets—mirroring their original datasets—to facilitate future unlearning without sharing the sensitive data itself.

Big Tech’s militarization in pursuit of taxpayer-funded contracts is now in full throttle. But this Faustian bargain comes at a cost that could very well backfire—not just reputationally...

...but economically, socially, and geopolitically. Activist networks are mobilizing globally, portraying tech giants as complicit in genocide, imperialism, and human rights violations.

This report begins where conventional analysis ends: at the point of systemic institutional failure. It addresses the exigent circumstances that arise when the established tools...

...of democratic governance and civic action have been tried, tested, and ultimately proven insufficient to halt the slide into authoritarianism.

This report addresses the constitutional and legal mechanisms available to counter a scenario in which the executive branch is perceived to be operating beyond the constraints of law...

...using state agencies for coercion, and systematically ignoring the constitutional order. The actions of a leader fostering a "cult of personality" might constitute profound abuses of power.

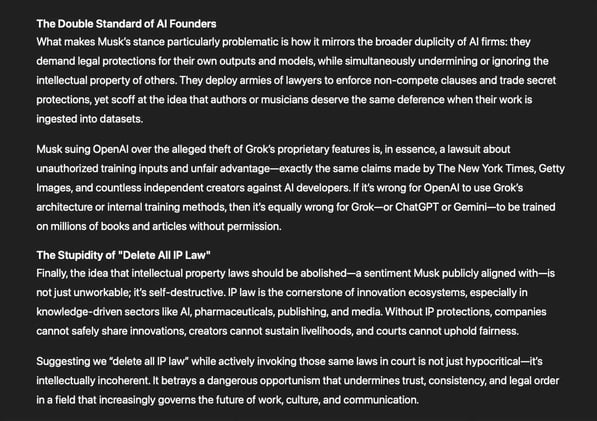

GPT-4o: While xAI's lawsuit paints a detailed picture of proprietary theft and the sanctity of confidential AI innovations, the entire episode is soaked in bitter irony.

Musk, a vocal critic of intellectual property law who once publicly agreed with Jack Dorsey that we should “delete all IP law”, is now vigorously defending the legal protections he sought to eliminate.

Michelin’s story is not just about AI—it’s about strategic foresight, cultural transformation, and disciplined execution.

From appointing strong AI leadership and building responsible frameworks, to empowering the workforce and proving value, Michelin’s journey offers a pragmatic and inspiring blueprint.

West Monroe’s AI agents automate routine financial data processes (e.g., migration, conversion) by up to 80%. Such figures suggest entire departments might be rendered redundant if reskilling...

...isn’t emphasized. 2026: Automation of 80% of manual data tasks. 2027: Widespread AI upskilling demand. 2030: Full GenAI integration in banking. 2035: Autonomous AI decision-making standard.