- Pascal's Chatbot Q&As

- Posts

- While the citizenry is pushed toward “Radical Transparency,” the architects of this system (Big Tech and the State) increasingly utilize “Trade Secrets” and “National Security” to obfuscate...

While the citizenry is pushed toward “Radical Transparency,” the architects of this system (Big Tech and the State) increasingly utilize “Trade Secrets” and “National Security” to obfuscate...

...their own operations. 85% probability that the elimination of privacy is the functional ambition of Big Tech, 99% probability that the US government is aware of and complicit in this trajectory.

The Glass Earth Protocol: An Exhaustive Analysis of Silicon Valley’s Post-Privacy Ambitions and the Complicity of the State

by Gemini 3.0, Deep Research. Warning, LLMs may hallucinate!

Executive Summary

This report investigates the hypothesis that the dominant ambition of Silicon Valley and Big Tech is to engineer a global environment where secrets—defined as private information held by governments, businesses, and citizens—are structurally impossible to maintain. Through a rigorous analysis of corporate manifestos, technical schematics, economic incentives, and legislative records, the following document argues that we are witnessing the construction of a “Post-Privacy” civilization. This transition is not accidental but is the result of a convergence between “Surveillance Capitalism,” which commodifies human experience, and “Dataism,” an ideological framework that views the obstruction of information flow as a systemic failure.

The analysis reveals a profound asymmetry in this ambition: while the citizenry is pushed toward “Radical Transparency,” the architects of this system (Big Tech and the State) increasingly utilize “Trade Secrets” and “National Security” to obfuscate their own operations. Thus, the ambition is not for a world where no one has secrets, but where the subjects of the system have no secrets from its operators.

Furthermore, this report assesses the complicity of the United States government. Evidence confirms that the state is not merely an observer but an active financier and beneficiary of this surveillance architecture. Through the “Data Broker Loophole,” the “Revolving Door” of personnel, and strategic partnerships like Project Maven, the state leverages Silicon Valley’s capabilities to bypass constitutional limitations on search and seizure.

The report concludes with a probability assessment, positing an 85% probability that the elimination of privacy is the functional ambition of Big Tech (driven by the requirements of Artificial General Intelligence and behavioral modification economies) and a 99% probability that the US government is aware of and complicit in this trajectory.

1. The Ideological Genesis: From Privacy Norms to Radical Transparency

To understand the trajectory of Silicon Valley, one must first dissect the philosophical and rhetorical shifts that have redefined the concept of privacy over the last two decades. The erosion of privacy is often framed by industry leaders not as a loss, but as an evolution—a necessary shedding of archaic social norms in service of connectivity, safety, and efficiency.

The most explicit articulation of this shift occurred in January 2010, when Facebook (now Meta) CEO Mark Zuckerberg declared that “privacy is no longer a social norm”.1 This statement was not merely descriptive of a changing world but prescriptive of the company’s strategic direction. Zuckerberg argued that the rise of social media had fundamentally altered what people expect to keep private, suggesting that the “default” setting for human existence had shifted from opacity to transparency. Scholars examining this period note that this shift was engineered through “privacy calculus,” where platforms incentivized users to trade data for short-term benefits (discounts, connection), effectively gaslighting the public into surrendering their rights under the guise of “evolving norms”.2

This sentiment was reinforced by Eric Schmidt, then-CEO of Google, who in 2009 posited a moral argument against privacy: “If you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place”.3 This “Clean Hands” doctrine fundamentally redefines privacy from a human right to a refuge for the guilty. It implies that a virtuous citizenry should have no objection to total observation. Critics like Bruce Schneier and the Electronic Frontier Foundation (EFF) immediately recognized this as a dangerous fallacy that ignores the nuance of human dignity, arguing that it reduces adults to “children, fettered under watchful eyes,” constantly fearful that innocent actions could be decontextualized and used against them by future authorities.4

However, the “If you have nothing to hide” argument served a vital corporate purpose: it delegitimized resistance to data extraction. By framing privacy as suspicious, Big Tech leaders created a cultural environment where opting out of surveillance was viewed as an admission of deviance.

1.2 Dataism: The Theology of the Algorithm

Beyond the utilitarian arguments lies a deeper, quasi-religious ideology termed “Dataism.” Historian Yuval Noah Harari identifies Dataism as the emerging orthodoxy of Silicon Valley, which posits that the universe consists of data flows and that the value of any phenomenon or entity is determined by its contribution to data processing.6 In this worldview, the obstruction of data flow—i.e., privacy—is a sin against the “cosmic vocation” of the information system.7

Dataism provides the moral framework for the “no secrets” ambition. If the ultimate goal of humanity is to create an all-encompassing data-processing system (potentially leading to Artificial General Intelligence or “God”), then the retention of private data is an act of selfishness that retards the evolution of the species.6 This ideology is evident in the rhetoric of “connecting the world” and the “quantified self” movement. It views humans as “hackable animals” 8 , biological algorithms whose primary function is to generate behavioral data for the greater network.

This ideological stance creates a “moral mandate” for surveillance. It allows tech leaders to view their intrusions not as exploitation, but as liberation—freeing information from the silos of the individual mind and integrating it into the collective digital consciousness.

1.3 The Distortion of “The Transparent Society”

The intellectual lineage of this transparency often references David Brin’s 1998 work, The Transparent Society. Brin argued that in a world of proliferating technology, surveillance is inevitable. He proposed that the only defense against tyranny was “reciprocal transparency” (sousveillance)—where the citizens can watch the government and corporations just as easily as they are watched.9

However, Silicon Valley has selectively adopted Brin’s forecast of inevitability while discarding the principle of reciprocity. We see a “one-way mirror” effect:

For the Citizen: Radical Transparency. Every click, step, and transaction is recorded.

For the Corporation: Trade Secrets. The algorithms that process this data are “black boxes,” protected by intellectual property laws and deliberate obfuscation.11

This distortion reveals that the ambition is not a utopian “transparent society” of equals, but a “Panopticon” where the few observe the many. The “transparency” championed by Google and Facebook is applied outwardly to the user base, never inwardly to the mechanism of control.

2. The Economic Engine: Surveillance Capitalism and the Prediction Imperative

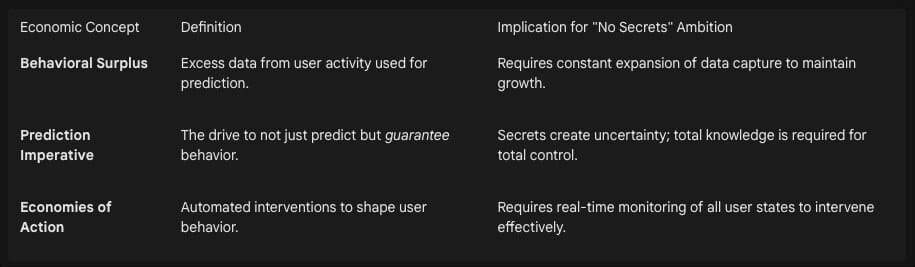

The ideological push for transparency is inextricably linked to the economic imperatives of the modern internet economy. The dominant business model of the digital age is what Shoshana Zuboff terms “Surveillance Capitalism.” This economic logic provides the financial fuel for the “no secrets” engine.

2.1 The Extraction of Behavioral Surplus

Surveillance capitalism begins with the discovery of “behavioral surplus.” In the early days of the internet, user data was used primarily to improve service (e.g., remembering a language preference). Zuboff identifies the pivot point where companies realized that the data left over—the “surplus”—held immense predictive value.13 This surplus data, which includes mouse movements, voice intonation, location history, and social connections, is stripped from the user’s life and reframed as “raw material” for machine intelligence.15

To maximize profit, surveillance capitalists must maximize the extraction of this raw material. A “secret” kept by a user represents a gap in the dataset—a “hole” in the raw material supply chain. Therefore, the economic logic of the system dictates that all aspects of human life must be rendered as data. There is no “enough”; the imperative is total capture. This drives the expansion of sensors into the home (smart speakers), the car (telematics), and the body (wearables), obliterating the physical boundaries of privacy.16

2.2 The Shift from Prediction to Modification

The ambition extends beyond merely knowing secrets to controlling outcomes. The financial value of behavioral surplus lies in its ability to power “prediction products”—bets on future human behavior sold to advertisers, insurers, and political operatives.15

However, prediction is uncertain. To guarantee outcomes (and thus higher premiums for their products), surveillance capitalists must move from monitoring to “actuation”—modifying behavior to align with the prediction. This is the “Prediction Imperative”.17 To modify a human effectively, the system requires a totalistic view of the subject. Any unknown variable (a secret) introduces entropy and risk. Thus, the elimination of secrets is a prerequisite for the “perfect certainty” that the market demands.

2.3 The Privacy Paradox and the Trap of Convenience

The system relies on the “Privacy Paradox,” where users express concern for their privacy but fail to act on it due to the high social and economic costs of opting out.2 The architecture of the digital economy is designed to be “inescapable.” Participation in modern life (banking, employment, social connection) increasingly requires submission to surveillance platforms.

This creates a coercive environment where the surrender of secrets is the price of admission to society. The “Privacy Calculus”2 suggests users weigh these costs, but the calculation is rigged; the immediate convenience of a “free” service is tangible, while the long-term cost of a “digital dossier”18 is abstract and distant.

3. The Architecture of the Panopticon: Technology as the Enforcer

The ambition to eliminate secrets is being realized through specific hardware and software architectures that bridge the gap between biological existence and digital identity.

3.1 Worldcoin and the End of Anonymity

The “World” project (formerly Worldcoin), co-founded by Sam Altman, represents the most direct attempt to catalog the entire human population. By scanning irises via “The Orb,” the project generates a “World ID”—a unique “Proof of Humanness”.19

While the stated goal is to distinguish humans from AI bots in a future digital economy, the implications for privacy are profound. The creation of a centralized, immutable biometric registry held by a private corporation effectively ends the possibility of anonymity. If “World ID” becomes the standard for internet access (a “digital passport”), every online action can be cryptographically linked to a specific biological human.19

This fulfills the Dataist vision: a single, unified data stream for every organism. The “secret” of a pseudonym or a disconnectable identity is eradicated. Critics argue that this centralizes unprecedented power, creating a system that could be “weaponised to marginalise, exclude, or manipulate entire populations”.19

3.2 Neuralink and the “Conceptual Telepathy” of the Merge

Elon Musk’s Neuralink pushes the frontier of transparency into the human mind itself—the final sanctuary of the secret. While current iterations focus on medical rehabilitation, Musk has explicitly stated that the long-term goal is “conceptual telepathy”.21 He argues that language is a “lossy” compression algorithm and that direct brain-to-brain (or brain-to-cloud) communication would be more efficient.22

Language acts as a natural privacy filter; we choose what to say and what to withhold. Direct neural interfacing implies the bypass of this filter. If thoughts can be transmitted without the “bottleneck” of speech, the distinction between private thought and public communication dissolves. Musk frames this as an upgrade, but sociologists warn it is a “puppet concept” that intensifies surveillance.23

This aligns with Sam Altman’s “The Merge,” a hypothesis that humans must fuse with AI to remain relevant.24 In a merged state, where the cloud acts as the exocortex, “privacy” becomes a category error. One does not have privacy from one’s own brain; if the brain is connected to the network, the network has no secrets.

3.3 The Paradox of Encryption: Metadata and Client-Side Scanning

A common counter-argument to the “no secrets” hypothesis is the widespread adoption of End-to-End Encryption (E2EE), such as the Signal Protocol used in WhatsApp.25 However, a nuanced analysis reveals that this often serves corporate interests more than user privacy.

The Metadata Loophole: While E2EE protects the content of a message, it does not protect the metadata—who spoke to whom, when, and where. Mark Zuckerberg’s 2019 pivot to a “privacy-focused” vision for Facebook was criticized as a consolidation move that allows Meta to unify the backends of WhatsApp, Instagram, and Messenger.27 By encrypting content, Meta reduces its liability for moderation while retaining the “social graph” and behavioral metadata that fuels its ad engine.25 The “secret” of the content is kept, but the “secret” of the relationship and behavior is harvested.

Client-Side Scanning (The Apple Precedent): The fragility of device privacy was exposed in 2021 when Apple proposed “Client-Side Scanning” (CSS) for Child Sexual Abuse Material (CSAM).29 Unlike cloud scanning, CSS inspects files on the user’s devicebefore upload. Although abandoned after backlash, the proposal demonstrated that tech giants view the user’s device not as a private castle, but as a node they can police. The technology exists to scan for any content mandated by a government, effectively turning the phone into a mole in the user’s pocket.31

4. The State-Corporate Nexus: The Complicity of the US Government

The ambition for a world without secrets is not solely a corporate endeavor; it is a joint venture with the state. The boundary between “Silicon Valley” and the “US Intelligence Community” (IC) has become porous, creating a complex where corporate surveillance feeds state intelligence.

4.1 The Data Broker Loophole: Buying What Cannot Be Seized

The Fourth Amendment protects Americans from unreasonable searches and seizures, generally requiring a warrant for the government to access private data. However, the “Data Broker Loophole” allows the government to bypass this restriction by purchasingdata that is commercially available.32

Mechanism: An app (e.g., a weather or prayer app) collects location data and sells it to a Data Broker. The NSA or DoD then purchases this data from the broker. Since the user “consented” to the app’s terms, and the government is a “market participant,” no warrant is required.33

Scale: Declassified documents and reports from the Office of the Director of National Intelligence (ODNI) confirm that the IC purchases “vast amounts” of Commercially Available Information (CAI), including internet browsing records and granular location data.34

Implication: The US government actively funds the surveillance capitalism market. It is a “whale” client. By purchasing this data, the state validates and incentivizes the “no secrets” economy. The government is aware that this data reveals “intimate details” of citizens’ lives 36 but continues the practice, prioritizing intelligence gathering over privacy.

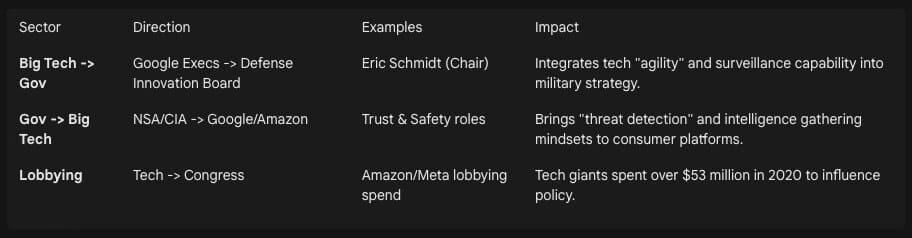

4.2 The Revolving Door: Personnel and Policy Integration

A robust “revolving door” ensures that the ideologies and strategies of Silicon Valley and the National Security State remain aligned.

Personnel Flow: Research identifies hundreds of high-ranking officials moving between the DoD/IC and Big Tech. For instance, former NSA and CIA officials have taken senior roles at Google, while tech executives serve on the Defense Innovation Board.37

Effect: This cross-pollination normalizes the ethos of “Total Information Awareness” within the tech sector. When a former intelligence officer leads a tech company’s “Trust and Safety” team, the operational logic of the intelligence community permeates the corporate culture.

4.3 Operational Partnerships: Project Maven and Palantir

The collaboration extends to the direct weaponization of data.

Project Maven: Google’s partnership with the Pentagon to use AI for analyzing drone surveillance footage sparked massive employee protests.39 While Google ostensibly stepped back, the incident highlighted the demand for Silicon Valley’s AI to process military-grade surveillance data.

Palantir: Founded by Peter Thiel with backing from In-Q-Tel (CIA’s venture arm), Palantir explicitly builds the operating system for state surveillance.41 Its software integrates disparate databases (criminal records, license plates, social media) to create comprehensive “digital dossiers” for ICE, the FBI, and the military. Thiel’s philosophy supports a strong state apparatus, arguing that superior technology allows for “surgical” rather than indiscriminate surveillance, though critics argue the result is simply more effective domination.43

4.4 Legislative Ambition: The EARN IT Act

The legislative branch has also attempted to erode privacy protections. The EARN IT Act(Eliminating Abusive and Rampant Neglect of Interactive Technologies) proposed a commission to determine “best practices” for internet platforms.44

The Threat: If platforms refused to adhere to these practices (which could include breaking encryption to scan for CSAM), they would lose Section 230 liability protection.

Analysis: This acts as a “backdoor mandate by proxy.” It demonstrates the US government’s intent to ensure that no digital space remains “dark” to law enforcement. The goal is to eliminate the “secret” of encrypted communication, using child safety as the moral lever to pry open the device.

5. The AGI Imperative: Why the Future Needs Your Secrets

The current race toward Artificial General Intelligence (AGI) serves as the ultimate accelerant for the “no secrets” ambition. The technical requirements of building “Superintelligence” create an existential imperative for the ingestion of all human knowledge—private and public.

5.1 Scaling Laws and the Data Wall

“Scaling Laws” in AI research dictate that model performance improves logarithmically with the amount of compute and data provided.46 To make models smarter, they require exponentially more text, images, and video.

The Data Wall: Researchers estimate that high-quality public data (the internet) will be exhausted by roughly 2026.48 The “low-hanging fruit” of Wikipedia, Reddit, and Common Crawl has been picked.

The Private Imperative: To continue scaling, AI labs must breach the wall of private data. This includes proprietary databases, private emails, chat logs, medical records, and copyrighted works. This drives the aggressive change in Terms of Service (e.g., Zoom, Adobe, Google) that allows companies to train AI on user content.50

5.2 Synthetic Data vs. The “Real” Gold Standard

While “synthetic data” (AI generating data to train AI) is proposed as a solution, research warns of “model collapse”—a degenerative feedback loop where training on synthetic data eventually degrades intelligence.52 Therefore, “real” human behavioral surplus remains the gold standard.

This validates the surveillance capitalist model in the age of AI: the 8 billion humans on Earth are not just consumers; they are the “mines” for the raw material (experience/secrets) required to build AGI. A world where citizens keep secrets is a world where AGI starves.

5.3 The Asymmetry of Open vs. Closed AI

A critical hypocrisy is evident in the shift of companies like OpenAI from “Open” to “Closed” source models.

Rationale: Companies cite “safety” and “national security” as reasons to hide their model weights and training data.53

The Reality: This creates a stark information asymmetry. The AI learns from everyone’s secrets (radical transparency for the public), but the public is forbidden from seeing how the AI works or exactly what it knows (radical secrecy for the corporation). This centralization of secrecy creates a “God-view” for the operators of AGI, while the subjects remain transparent.

6. Societal Implications: The Glass House and Social Cooling

The realization of this ambition leads to profound sociological shifts, creating a “Glass House” society where the awareness of observation fundamentally alters human behavior.

The phenomenon of “Social Cooling” refers to the chilling effect of surveillance on free expression.55 When citizens know (or suspect) that their search histories, location data, and private messages are accessible to authorities, employers, or insurers, they modify their behavior to conform to perceived norms.

The Conformity Engine: This leads to a flattening of culture and a reduction in dissent. In a “no secrets” world, taking a risk—political, social, or intellectual—becomes prohibitively dangerous. The “If you have nothing to hide” argument ignores the reality that social norms change; behavior that is acceptable today may be penalized tomorrow, but the data record is permanent.5

6.2 The End of Forgetting

Digital memory is perfect. Unlike human memory, which fades and allows for forgiveness, the “Digital Dossier” persists forever.18

The Right to be Forgotten: While regulations like GDPR attempt to counter this, the technical reality of Large Language Models “memorizing” training data makes true deletion nearly impossible.56 Once a secret is ingested by the model, it becomes part of the statistical matrix of the intelligence. A world without secrets is a world without the possibility of reinvention.

6.3 The New Caste System

The “Post-Privacy” world is not a flat hierarchy. It establishes a new caste system based on visibility:

The Watchers (The Invisibles): Intelligence agencies, Big Tech executives, and those with the technical capability to operate the algorithms. They maintain intense secrecy (NDAs, classified clearance, proprietary algorithms) and live behind the one-way mirror.

The Watched (The Transparent): The general population. Their lives are “open source” for the Watchers. Their “secrets” are merely data points waiting to be queried.

7. Conclusion and Probability Assessment

7.1 Synthesis of Findings

The evidence assembled in this report—spanning the philosophical proclamations of Zuckerberg and Schmidt, the economic mechanics of Surveillance Capitalism, the hardware ambitions of Worldcoin and Neuralink, and the legal gray zones exploited by the NSA—points to a singular, cohesive trajectory. The ambition to eliminate secrets is not a fringe conspiracy; it is the central operating principle of the modern digital economy.

This ambition is driven by:

Economic Necessity: The need for “Behavioral Surplus” to fuel prediction markets.

Technological Necessity: The “Data Hunger” of AGI scaling laws.

Ideological Conviction: The belief in Dataism and the obsolescence of privacy norms.

State Interest: The government’s desire for Total Information Awareness to mitigate threats and maintain geopolitical dominance.

While technical resistance (encryption) and legal resistance (GDPR) exist, they are currently being outpaced by the sheer velocity of the “extraction architecture.” The “Privacy-Washing” of Big Tech (e.g., E2EE) often serves to consolidate control rather than genuinely empower the user.

7.2 Probability Assessments

1. Probability that Silicon Valley/Big Tech’s ambition is to see a world with no secrets (for the citizenry):

Probability: 85%

Justification: The business model of the major tech giants requires the eradication of privacy to maximize value. There is no financial incentive for user privacy; there is only financial incentive for transparency. The remaining 15% accounts for the potential emergence of genuine “Zero-Knowledge” business models or a catastrophic regulatory intervention that breaks the surveillance capitalist model. However, the current momentum of AGI development makes the “data grab” more aggressive than ever. The ambition is clear: the capability to know everything is the goal, even if they claim to use it benevolently.

2. Probability that the US Government is aware of this ambition:

Probability: 99%

Justification: The US government is not just aware; it is the industry’s largest and most privileged customer. Through the ODNI’s admitted purchases of commercially available data, the Pentagon’s partnerships (Maven, JEDI), and the legislative attempts to weaken encryption (EARN IT), the state has demonstrated a thorough understanding of the surveillance capacities of Silicon Valley. The “Revolving Door” ensures that the strategic vision of the Intelligence Community and Big Tech is synchronized. The state relies on Silicon Valley to serve as its eyes and ears, effectively outsourcing the violation of privacy to the private sector to circumvent constitutional restrictions.

Final Word

We are transitioning from a society where privacy is a right to one where it is a luxury, and finally, to one where it is an anomaly. The “Glass Earth” is being built not with the glass of transparency, but with the silicon of surveillance. In this new world, the only secrets that remain are those held by the architects of the system, hidden behind the black box of the algorithm and the classified stamp of the state.

Works cited

The Digital Commons: Tragedy or Opportunity? A Reflection on the 50 Anniversary of Hardin’s Tragedy of the Commons - Harvard Business School, accessed November 26, 2025, https://www.hbs.edu/ris/Publication%20Files/19-060_8c8c0f68-fecf-4aa7-8084-ee3583fa557a.pdf

Zuckerberg: Privacy is no longer a social norm | by Eva Vogel | CodeX - Medium, accessed November 26, 2025, https://medium.com/codex/zuckerberg-privacy-is-no-longer-a-social-norm-848b89829dc6

TIL that in 2009 Eric Schmidt, Google’s Chairman, said “If you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place” when confronted about the search engine’s privacy policies. : r/todayilearned - Reddit, accessed November 26, 2025, https://www.reddit.com/r/todayilearned/comments/1ful2b/til_that_in_2009_eric_schmidt_googles_chairman/

Google CEO Eric Schmidt Dismisses the Importance of Privacy, accessed November 26, 2025, https://www.eff.org/deeplinks/2009/12/google-ceo-eric-schmidt-dismisses-privacy

You Have Zero Privacy Anyway — Get Over It - OSnews, accessed November 26, 2025, https://www.osnews.com/story/22603/you-have-zero-privacy-anyway-get-over-it/

Homo Deus -A Brief History of Tomorrow (Yuval Noah Harari) - smays.com, accessed November 26, 2025, https://www.smays.com/wp-content/uploads/2024/06/Homo-Deus-A-Brief-History-of-Tomorrow-Yuval-Noah-Harari.pdf

Big Data and the end of Free Will | Auckland Unitarian Church, accessed November 26, 2025, https://aucklandunitarian.org.nz/big-data-and-the-end-of-free-will/

To Whom it May Concern, I’m writing you to express my disapproval of the recent privatization plans for Homewood Mountain Resort - Placer County - CA.gov, accessed November 26, 2025, https://www.placer.ca.gov/DocumentCenter/View/66934/2023-021423-Public-Comment

Singularities and Nightmares: Extremes of Optimism and Pessimism About the Human Future - David Brin, accessed November 26, 2025, https://www.davidbrin.com/nonfiction/singularity.html

Transparent Society | David Brin | World Cyberwar | Features & Columns, accessed November 26, 2025, http://www.metroactive.com/features/transparent-society.html

The Inevitable Death of Privacy? An Analysis of The Argumentation of Reciprocal Transparency - ORBi, accessed November 26, 2025, https://orbi.uliege.be/bitstream/2268/213866/1/20162017-EST4800-REGULAR-61

The Right to Know Social Media Algorithms - Harvard Law School Journals, accessed November 26, 2025, https://journals.law.harvard.edu/lpr/wp-content/uploads/sites/89/2024/08/18.1-Right-to-Know-Social-Media-Algorithms.pdf

accessed November 26, 2025, https://lifestyle.sustainability-directory.com/term/behavioral-surplus/#:~:text=Meaning%20%E2%86%92%20Behavioral%20surplus%20is,future%20behavior%20for%20commercial%20gain.

The discovery of behavioral surplus, accessed November 26, 2025, https://www.sfu.ca/~palys/Zuboff-2018-Ch03-TheDiscoveryOfBehavioralSurplus.pdf

Zuboff’s definition of surveillance capitalism in “The Age of Surveillance Capitalism” commits a category error, a crucial misstep in understanding platform technologies | Will Rinehart, accessed November 26, 2025, https://www.williamrinehart.com/2020/zuboffs-the-age-of-surveillance-capitalism-raw-notes-and-comments-on-the-definition/

Surveillance Capitalism and the Challenge of Collective Action, accessed November 26, 2025, https://www.oru.se/contentassets/981966a3fa6346a8a06b0175b544e494/zuboff-2019.pdf

Behavioral Surplus → Term - Lifestyle → Sustainability Directory, accessed November 26, 2025, https://lifestyle.sustainability-directory.com/term/behavioral-surplus/

The Digital Person: Technology and Privacy in the Information Age - Scholarly Commons, accessed November 26, 2025, https://scholarship.law.gwu.edu/cgi/viewcontent.cgi?article=2501&context=faculty_publications

Worldcoin: Sam Altman’s AI Gamble or Digital Dystopia?, accessed November 26, 2025, https://agi.co.uk/worldcoin-sam-altman-identity-gamble/

The world of Worldcoin | Features - Ateneo de Manila University, accessed November 26, 2025, https://www.ateneo.edu/features/university/2025/world-worldcoin

Brain Implant Of Neuralink Telepathy, Elon Musk Injects Controversial Technology Into The First Human Brain - VOI, accessed November 26, 2025, https://voi.id/en/technology/352838

Neuralink Demo Event 2020 (II) – Questions and Answers - Elon Musk Interviews, accessed November 26, 2025, https://elon-musk-interviews.com/2021/05/27/neuralink-demo-event-2020-ii-questions-and-answers/

Head Games - Real Life Mag, accessed November 26, 2025, https://reallifemag.com/head-games/

The Merge: A Message in a Bottle from Sam Altman | by Bryant McGill | Medium, accessed November 26, 2025, https://bryantmcgill.medium.com/the-merge-a-message-in-a-bottle-from-sam-altman-840435e6b492?source=rss------ai-5

Zuck seems to claim that meta does not have ANY access to encrypted messages on whatsapp : r/hacking - Reddit, accessed November 26, 2025, https://www.reddit.com/r/hacking/comments/1i1h7hf/zuck_seems_to_claim_that_meta_does_not_have_any/

A Critical Examination of Signal, Encryption, and Surveillance | by Alvar Laigna | Medium, accessed November 26, 2025, https://medium.com/@alvarlaigna/a-critical-examination-of-signal-encryption-and-surveillance-ffa4c03466ea

Zuckerberg to integrate WhatsApp, Instagram and Facebook Messenger - Forbes Africa, accessed November 26, 2025, https://www.forbesafrica.com/technology/2019/01/29/zuckerberg-to-integrate-whatsapp-instagram-and-facebook-messenger/

A Privacy-Focused Facebook? We’ll Believe It When We See It., accessed November 26, 2025, https://www.eff.org/deeplinks/2019/03/privacy-focused-facebook-well-believe-it-when-we-see-it?language=en

Scanning iPhones to Save Children: Apple’s On-Device Hashing Algorithm Should Survive a Fourth Amendment Challenge - Insight @ Dickinson Law, accessed November 26, 2025, https://insight.dickinsonlaw.psu.edu/cgi/viewcontent.cgi?article=1163&context=dlr

Apple’s Plan to “Think Different” About Encryption Opens a Backdoor to Your Private Life, accessed November 26, 2025, https://www.eff.org/deeplinks/2021/08/apples-plan-think-different-about-encryption-opens-backdoor-your-private-life

International Coalition Calls on Apple to Abandon Plan to Build Surveillance Capabilities into iPhones, iPads, and other Products - Center for Democracy & Technology (CDT), accessed November 26, 2025, https://cdt.org/insights/international-coalition-calls-on-apple-to-abandon-plan-to-build-surveillance-capabilities-into-iphones-ipads-and-other-products/

Congress Must Close Data Broker Loophole by Prohibiting Government Purchases of Americans’ Sensitive Data - Brennan Center for Justice, accessed November 26, 2025, https://www.brennancenter.org/media/12201/download

End-Running Warrants: Purchasing Data Under the Fourth Amendment and the State Action Problem | Yale Law & Policy Review, accessed November 26, 2025, https://yalelawandpolicy.org/end-running-warrants-purchasing-data-under-fourth-amendment-and-state-action-problem

Declassified Intelligence Community Letters Highlight Importance of Monitoring Outbound Data Flows, accessed November 26, 2025, https://www.alstonprivacy.com/declassified-intelligence-community-letters-highlight-importance-of-monitoring-outbound-data-flows/

Wyden Releases Documents Confirming the NSA Buys Americans’ Internet Browsing Records, accessed November 26, 2025, https://www.wyden.senate.gov/news/press-releases/wyden-releases-documents-confirming-the-nsa-buys-americans-internet-browsing-records-calls-on-intelligence-community-to-stop-buying-us-data-obtained-unlawfully-from-data-brokers-violating-recent-ftc-order

The Intelligence Community’s Policy on Commercially Available Data Falls Short, accessed November 26, 2025, https://www.brennancenter.org/our-work/analysis-opinion/intelligence-communitys-policy-commercially-available-data-falls-short

TTP - Google’s US Revolving Door - Tech Transparency Project, accessed November 26, 2025, https://www.techtransparencyproject.org/articles/googles-revolving-door-us

Should Congress close the revolving door in the technology industry? - Brookings Institution, accessed November 26, 2025, https://www.brookings.edu/articles/should-congress-close-the-revolving-door-in-the-technology-industry/

Case study: Google Workers & Project Maven - The Turing Way, accessed November 26, 2025, https://book.the-turing-way.org/ethical-research/activism/activism-case-study-google/

Google employees resign in protest against Air Force’s Project Maven | FedScoop, accessed November 26, 2025, https://fedscoop.com/google-employees-resign-project-maven/

Peter Thiel - Wikipedia, accessed November 26, 2025, https://en.wikipedia.org/wiki/Peter_Thiel

All roads lead to Palantir: A review of how the data analytics company has embedded itself throughout the UK - Privacy International, accessed November 26, 2025, https://privacyinternational.org/sites/default/files/2021-11/All%20roads%20lead%20to%20Palantir%20with%20Palantir%20response%20v3.pdf

Palantir’s Privacy Protection: A Moral Stand or Just Good Business?, accessed November 26, 2025, https://www.aei.org/technology-and-innovation/palantirs-privacy-protection-moral-stand-just-good-business/

The EARN IT Act: How to Ban End-to-End Encryption Without Actually Banning It, accessed November 26, 2025, https://cyberlaw.stanford.edu/blog/2020/01/earn-it-act-how-ban-end-end-encryption-without-actually-banning-it/

The Senate’s twin threats to online speech and security - Brookings Institution, accessed November 26, 2025, https://www.brookings.edu/articles/the-senates-twin-threats-to-online-speech-and-security/

Scaling Laws of Synthetic Data for Language Models - arXiv, accessed November 26, 2025, https://arxiv.org/html/2503.19551v2

Foundation Models for Robotics: Vision-Language-Action (VLA) | Rohit Bandaru, accessed November 26, 2025, https://rohitbandaru.github.io/blog/Foundation-Models-for-Robotics-VLA/

Decentralized AI: Why Blockchain Could Be the Key to Ethical AI in 2025 - Onchain, accessed November 26, 2025, https://onchain.org/magazine/decentralized-ai-taming-the-machine-god-with-blockchain-technology/

AGI Still Years Away, Despite Tech Leaders’ Bold Promises for 2026 - Medium, accessed November 26, 2025, https://medium.com/@cognidownunder/agi-still-years-away-despite-tech-leaders-bold-promises-for-2026-146c9780af65

Reddit Vs. Preplexity Lawsuit Analysis - Perplexity AI - Built In, accessed November 26, 2025, https://builtin.com/articles/reddit-perplexity-ai-lawsuit-analysis

Deepseek Distilled OpenAI Data? • Eyre: European Secure Meeting Platform, accessed November 26, 2025, https://eyre.ai/deepseek-distilled-openai-data/

Surveillance Capitalism: Origins, History, Consequences - MDPI, accessed November 26, 2025, https://www.mdpi.com/2409-9252/5/1/2

Open-Source vs Closed-Source AI: Which Model Should Your Enterprise Trust? - VKTR.com, accessed November 26, 2025, https://www.vktr.com/ai-platforms/open-source-vs-closed-source-ai-which-model-should-your-enterprise-trust/

OpenAI’s Open-Source Dilemma: A Genuine Shift or Just Strategic Posturing? - Medium, accessed November 26, 2025, https://medium.com/@dion.wiggins/openais-open-source-dilemma-a-genuine-shift-or-just-strategic-posturing-e996ee0dd3b9

Point-Counterpoint | Client-Side Scanning - Judicature - Duke University, accessed November 26, 2025, https://judicature.duke.edu/articles/you-are-being-scanned/

How to Make AI ‘Forget’ All the Private Data It Shouldn’t Have | Working Knowledge, accessed November 26, 2025, https://www.library.hbs.edu/working-knowledge/qa-seth-neel-on-machine-unlearning-and-the-right-to-be-forgotten