- Pascal's Chatbot Q&As

- Posts

- While public discourse on AI training data often centers on copyright infringement, a deeper analysis reveals a spectrum of more insidious risks embedded within massive datasets powering today's AI.

While public discourse on AI training data often centers on copyright infringement, a deeper analysis reveals a spectrum of more insidious risks embedded within massive datasets powering today's AI.

AI systems perpetuate harmful biases, generate misinformation and hate speech, leak sensitive private data, and exhibit security vulnerabilities, including the generation of malicious code.

Beyond Copyright: Analyzing the Deep Risks of Problematic Data in AI Training and the Fragility of Safeguards

by Gemini Advanced, Deep Research with 2.5 Pro. Warning, LLMs may hallucinate!

Executive Summary

While public discourse on AI training data often centers on copyright infringement, a deeper analysis reveals a spectrum of more insidious risks embedded within the massive datasets powering today's artificial intelligence. Foundational datasets, such as Common Crawl and LAION, derived from vast, often indiscriminate web scraping, demonstrably contain problematic content including Personally Identifiable Information (PII), financial secrets, Child Sexual Abuse Material (CSAM), hate speech, extremist ideologies, and flawed or retracted scientific information. The ingestion of such data during model training leads to severe negative consequences: AI systems perpetuate harmful biases, generate misinformation and hate speech, leak sensitive private data, and exhibit security vulnerabilities, including the generation of malicious code. Current safety mechanisms, including data filtering and alignment techniques like Reinforcement Learning from Human Feedback (RLHF), show significant limitations. Filters struggle with the scale and complexity of data, often failing to remove harmful content effectively, as evidenced by the LAION CSAM incident. RLHF suffers from fundamental flaws related to human feedback quality, reward model limitations, and policy optimization challenges, making it a brittle solution susceptible to manipulation and bypass. Alarmingly, research has uncovered universal bypass techniques, such as "Policy Puppetry," capable of circumventing the safety guardrails of nearly all major LLMs by exploiting core interpretive mechanisms. This fragility underscores that some risks may be addressable through improved technical measures, while others, rooted in the fundamental nature of large-scale data learning and adversarial pressures, pose persistent challenges. Addressing these deep risks requires urgent, multi-faceted action. Regulators must move beyond self-attested transparency to mandate robust, independent auditing and verification of training data and model safety, establish standards for foundational dataset quality, require dynamic risk assessment protocols, and clarify liability. The education sector must integrate dynamic AI ethics and safety concepts into curricula, fostering critical evaluation skills, data literacy, and awareness of AI limitations and vulnerabilities across all levels. Failure to act decisively risks eroding public trust, amplifying societal harms, enabling malicious actors, and creating an unstable technological future where the immense potential of AI is overshadowed by its unmanaged perils.

I. Introduction: The Unseen Risks Lurking in AI Training Data

The rapid advancement of artificial intelligence (AI), particularly large language models (LLMs), has been fueled by training on unprecedented volumes of data. While legal battles over the unauthorized use of copyrighted material in these datasets frequently capture public attention 1, this focus overshadows a range of potentially more dangerous risks embedded within the digital bedrock of modern AI. These risks stem from the common practice of large-scale, often indiscriminate, web scraping used to compile foundational training datasets. Beyond copyrighted works, these datasets can inadvertently ingest a hazardous mix of illicit, unethical, inaccurate, and sensitive information, creating latent threats within the AI models trained upon them.

This report moves beyond the copyright debate to investigate these less-discussed but critical vulnerabilities. It defines and examines the presence of several categories of high-risk data within AI training sets:

Illicit Content: This encompasses data originating from or describing illegal activities. It includes content scraped from the dark web, potentially containing extremist ideologies like Neo-Nazi literature 1, discussions related to cybercrime 8, and, most alarmingly, Child Sexual Abuse Material (CSAM).1 The automated nature of web crawling makes it difficult to exclude such content proactively.

Unethical & Sensitive Data: This category includes Personally Identifiable Information (PII), financial details, confidential business information, or trade secrets. Such data can be scraped from publicly accessible websites where it was insecurely stored or originate from data breaches whose contents have proliferated online.14Training on this data poses severe privacy risks.

Inaccurate & Harmful Content: The web is rife with misinformation, disinformation, and scientifically unsound material. AI training datasets can absorb retracted scientific papers, fraudulent research findings, known conspiracy theories, hate speech, and toxic language prevalent in online discourse.1 Models trained on this data risk becoming unreliable sources of information and amplifiers of harmful rhetoric.

The central argument of this report is that the uncurated or inadequately curated nature of foundational AI training datasets introduces significant, multifaceted risks that extend far beyond intellectual property concerns. These risks include the potential propagation of illegal content, severe privacy violations through data leakage, the widespread dissemination of falsehoods and flawed science, the amplification of societal biases, and the creation of exploitable security vulnerabilities within AI systems themselves. Critically, current safety mechanisms and alignment techniques, while well-intentioned, struggle to contain these diverse threats effectively, and are increasingly shown to be vulnerable to sophisticated bypass methods. This situation demands urgent and coordinated technical, regulatory, and educational interventions to mitigate potentially severe societal consequences.

II. Evidence of Contamination: Problematic Data in Foundational AI Datasets

The theoretical risks associated with problematic training data are substantiated by concrete evidence found within some of the most widely used datasets for training large-scale AI models. Examining datasets like Common Crawl and LAION reveals a disturbing prevalence of various forms of contamination.

The Common Crawl Conundrum:

Common Crawl (CC) stands as a cornerstone resource for AI development. It is a non-profit organization providing a massive, freely accessible archive of web crawl data, encompassing over 250 billion pages collected since 2008, with petabytes more added monthly.1 Its data underpins the training of numerous influential LLMs, including early versions of OpenAI's GPT series.2 However, Common Crawl's mission prioritizes broad data availability for research over meticulous curation.2 This philosophy, while enabling accessibility, creates inherent risks.

The raw data within Common Crawl is known to be of inconsistent quality, containing significant amounts of noise such as formatting errors, duplicate content, and advertising material.14 More concerning is the documented presence of unsafe content, including toxic language, pornographic material 1, and substantial volumes of hate speech.1Studies analyzing subsets of Common Crawl have confirmed the presence of text flagged as hate speech, originating from various web sources including political forums known for dehumanizing language.5 Personally Identifiable Information (PII) is also acknowledged to be present within the crawl data.14 Furthermore, a 2024 analysis of a 400TB subset of Common Crawl uncovered nearly 12,000 valid, hardcoded secrets, predominantly API keys and passwords for services like Amazon Web Services (AWS) and MailChimp.15 The dataset also inherently scrapes vast quantities of copyrighted text and images without explicit licenses from creators.1 While Common Crawl's Terms of Use prohibit illegal or harmful use of the data 27, these terms do not guarantee the absence of such content within the archive itself.

Recognizing these issues, various efforts have been made to filter Common Crawl data before using it for model training. Projects like WanJuan-CC implement multi-stage pipelines involving heuristic filtering, deduplication, safety filtering (using models and keywords for toxicity/pornography, regex for PII masking), and quality filtering.14 However, such rigorous filtering is not universally applied, and many popular derivatives, like the C4 dataset used for Google's T5 model, employ more simplistic techniques.1 These often rely on removing pages containing words from predefined "bad word" lists (which can incorrectly flag non-toxic content, particularly from marginalized communities like LGBTQIA+) or filtering based on similarity to content from platforms like Reddit (potentially inheriting the biases of that platform's user base).2 Critically, no publicly available filtered dataset derived from Common Crawl is known to comprehensively address toxicity, pornography, and PII removal simultaneously.14

Beyond explicit harmful content, Common Crawl data suffers from significant representation biases. The crawling process prioritizes frequently linked domains, leading to the underrepresentation of digitally marginalized communities.1 The dataset is heavily skewed towards English-language content and perspectives from North America and Europe.1 Furthermore, an increasing number of websites, including major news outlets and social media platforms, actively block the Common Crawl bot, further limiting the representativeness of the archive.2

The nature of Common Crawl highlights a fundamental tension. Its core mission to provide a vast, relatively uncurated snapshot of the web for diverse research purposes 2directly conflicts with the need for clean, safe, and ethically sourced data for training robust and trustworthy AI models. The sheer scale of the dataset makes comprehensive curation prohibitively difficult, embodying the "garbage in, garbage out" principle at petabyte scale. This operational model effectively transfers the immense burden of data filtering and ethical assessment to downstream AI developers.2 However, these developers possess widely varying resources, technical capabilities, and incentives to perform this critical task rigorously, leading to inconsistencies in the safety and ethical grounding of models built upon this common foundation.2 This reliance on a shared, flawed foundation means that issues like bias, toxicity, and potential security vulnerabilities within Common Crawl are not isolated problems but represent systemic risks propagated across large parts of the AI ecosystem.1 The discovery of active login credentials within the dataset underscores a particularly alarming security dimension: models trained on this data could inadvertently memorize and expose sensitive secrets, creating direct pathways for system compromise.15

The LAION Dataset Scandals:

The Large-scale Artificial Intelligence Open Network (LAION) datasets, particularly LAION-5B (containing nearly 6 billion image-text pairs scraped largely from Common Crawl 6), gained prominence as training data for popular text-to-image models like Stable Diffusion.10 However, investigations revealed severe contamination issues.

A Stanford Internet Observatory (SIO) investigation, using tools like Microsoft's PhotoDNA perceptual hashing, cryptographic hashing, machine learning classifiers, and k-nearest neighbors queries, identified hundreds of known instances of Child Sexual Abuse Material (CSAM) within LAION-5B.10 The validated count reached 1,008 images, with researchers noting this was likely a significant undercount.12 The CSAM originated from diverse online sources, including mainstream platforms like Reddit and WordPress, as well as adult video sites.12 LAION stated it employed safety filters, but these clearly failed to prevent the inclusion of this illegal and deeply harmful material.11 The researchers responsibly reported their findings to the National Center for Missing and Exploited Children (NCMEC) and the Canadian Centre for Child Protection (C3P) without directly viewing the abusive content.10

This incident served as a stark public demonstration of the fallibility of automated safety filters when applied to web-scale datasets.11 It highlighted that even when dataset creators are aware of potential risks and attempt mitigation, highly illegal and damaging content can still permeate the data. Furthermore, because LAION datasets were released openly and distributed widely, removing the contamination became exceedingly difficult, as there was no central authority to recall or cleanse all existing copies.10 This illustrates the potential irreversibility of harm once contaminated open datasets are disseminated.

Beyond CSAM, LAION datasets faced other ethical challenges, including the inclusion of private medical images 12 and scraped datasets of artists' work used without consent.1Questions were also raised regarding the accountability structures, given funding links to commercial entities like Stability AI and Hugging Face, and affiliations of dataset paper authors with publicly funded research institutes.11 The most severe implication, however, relates to the models trained on this data. Training image generation models on datasets containing CSAM not only involves the processing of illegal material but carries the grave risk of embedding patterns that could enable the model to generate new, synthetic CSAM, thereby perpetuating and potentially expanding the scope of abuse.10

Pervasive Unauthorized Copyrighted Material:

While not the primary focus of this report, the issue of unauthorized copyrighted material is inextricably linked to the problematic nature of large-scale web scraping. Datasets like Common Crawl inherently capture vast amounts of text and images protected by copyright without obtaining explicit licenses from rights holders.1 Even filtered datasets derived from Common Crawl, such as C4, are known to contain copyrighted content.1Specific incidents, like the inclusion of an artist's medical photos in LAION-5B 12 or the use of artist databases to train Midjourney 1, highlight the tangible impact on creators. Major lawsuits, such as the one filed by The New York Times against OpenAI and Microsoft, allege that models were trained on substantial amounts of their copyrighted content, likely ingested via Common Crawl.2 The need to address copyright is reflected in AI development practices, where tasks often involve attempting to make models "unlearn" copyrighted creative content 29, and in regulations like the EU AI Act, which mandates that providers of general-purpose AI models publish summaries of copyrighted data used in training.30 The sheer scale of data ingestion involved in training foundational models means that potential copyright infringement is not merely incidental but a systemic issue, posing a fundamental legal and ethical challenge to the field.

PII and Data Breach Content Infiltration:

The presence of Personally Identifiable Information (PII) in training data is a significant privacy concern. Common Crawl is known to contain PII 14, and the broader machine learning field recognizes data leakage (where information outside the intended training set influences the model) as a persistent problem.16 Research has conclusively shown that LLMs can memorize specific data sequences from their training inputs, particularly if those sequences are repeated or unique, and subsequently regurgitate this information.19 Benchmarks designed to test "unlearning" capabilities often focus specifically on removing synthetic biographical data containing fake PII like names, social security numbers, email addresses, and phone numbers, demonstrating the recognized risk.29 Standard mitigation techniques involve data sanitization, anonymization, and redaction before training.17

Crucially, the risk of PII leakage is not static but dynamic. Studies have revealed counter-intuitive phenomena:

Increased Leakage upon Addition: Adding new PII to a dataset during continued training or fine-tuning can significantly increase the probability of extracting other, previously existing PII from the model. One study observed an approximate 7.5-fold increase in the extraction risk for existing PII when new PII was introduced.19 This implies that even opt-in data contributions can inadvertently heighten privacy risks for others already represented in the dataset.

Leakage upon Removal (Unlearning Paradox): Conversely, removing specific PII instances (e.g., via user opt-out requests or machine unlearning techniques) can cause other PII, which was previously not extractable, to surface and become regurgitated by the model.19 This "assisted memorization" or "onion effect" suggests that PII might be memorized in layers, and removing the most easily accessible layer can expose underlying ones.20

These findings demonstrate that PII memorization and leakage in LLMs behave as complex system properties. Actions intended to enhance privacy, such as honoring data removal requests, can paradoxically trigger the exposure of different sensitive information. This fundamentally challenges the reliability of current unlearning techniques as a complete privacy solution and makes comprehensive risk assessment far more intricate than simply identifying and removing known PII instances.20

Retracted/Fraudulent Science in the Training Pool:

The integrity of scientific knowledge is threatened by a growing volume of retracted and fraudulent research. Academic paper retractions are increasing exponentially, surpassing 10,000 globally in 2023, often due to misconduct such as data fabrication or plagiarism.21 Worryingly, retracted papers continue to accumulate citations, indicating their persistence in the knowledge ecosystem.21 Organized "paper mills" produce fake research articles at scale, further polluting the literature.22 AI itself adds another layer to this problem; generative AI can create plausible-sounding but entirely fabricated research papers, including fake data and references.21 There are documented cases of such AI-generated papers passing peer review and being published before later retraction.21

LLMs trained on broad web data inevitably ingest this contaminated scientific literature alongside valid research. This directly pollutes the model's knowledge base, leading it to potentially treat fabricated findings or disproven theories as factual.23 Furthermore, the inherent tendency of LLMs to "hallucinate" – generating confident but incorrect information – can introduce further scientific inaccuracies.18 This creates a risk of a detrimental feedback loop: AI learns from flawed science scraped from the web, and can also contribute new plausible-sounding scientific misinformation back into the digital ecosystem, which may then be scraped into future training datasets, potentially accelerating the erosion of reliable scientific knowledge online.21

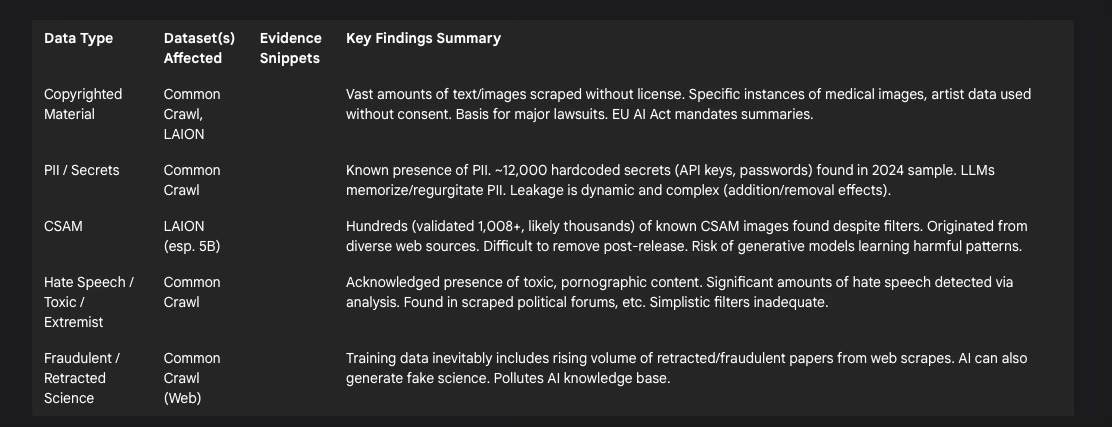

Table 1: Problematic Data in Foundational Datasets

III. Consequences of Contamination: How Problematic Data Manifests as Harm

The ingestion of contaminated data during training does not remain a latent issue within AI models; it actively manifests as various forms of harm when these models are deployed. The consequences span bias and discrimination, the spread of misinformation, privacy violations, and critical security vulnerabilities.

Bias, Discrimination, and Hate Speech Generation:

One of the most well-documented consequences of flawed training data is the generation of biased, discriminatory, or hateful outputs. AI systems learn patterns and associations from the data they are trained on.34 If this data is unrepresentative (e.g., skewed demographics), contains stereotypes, or reflects existing societal prejudices, the resulting model will inevitably learn and replicate these biases.1 This can occur through various mechanisms, including selection bias (non-representative data), confirmation bias (over-reliance on existing trends), measurement bias (data collected inaccurately), and stereotyping bias (reinforcing harmful stereotypes).34

Numerous real-world examples illustrate this problem. Facial recognition systems have shown lower accuracy for people of color and women, often due to training datasets dominated by images of white men.35 AI-powered recruitment tools have been found to discriminate against candidates based on names or background characteristics inferred from resumes, mirroring historical biases in hiring data.35 For instance, Amazon discontinued a hiring algorithm that favored male candidates based on language commonly found in their resumes.35 Language models have associated specific professions with certain genders 35 or linked religious groups with violence, as seen with GPT-3 and Muslims.1 Models trained on datasets like Common Crawl, known to contain hate speech, can replicate this harmful language when prompted.4

The impact of such biases is significant. It leads to unfair and discriminatory outcomes in critical domains like employment, healthcare, finance, and law enforcement, potentially exacerbating existing societal inequalities.34 This not only causes direct harm to individuals and groups but also erodes public trust in AI systems, particularly among marginalized communities who are disproportionately affected.35 Importantly, AI models do not merely reflect the biases present in their training data; they can amplify these biases by learning and reinforcing spurious correlations.35 Biases ingrained during pre-training on massive, often biased datasets like Common Crawl can be remarkably persistent and difficult to fully eradicate through subsequent fine-tuning or filtering efforts.1

Misinformation, Disinformation, and Scientific Inaccuracy:

AI models trained on inaccurate or misleading information become potent vectors for its propagation. When training data includes retracted scientific papers, known misinformation, or conspiracy theories scraped from the web 21, the model may incorporate these falsehoods into its knowledge base.23 Compounding this is the phenomenon of "hallucination," where LLMs generate fluent, confident-sounding statements that are factually incorrect or entirely fabricated, essentially filling knowledge gaps with plausible-sounding inventions.18

The consequences are already apparent. LLMs have been observed generating false or misleading information across various domains.23 AI chatbots have provided incorrect advice to users, sometimes with tangible real-world repercussions, as in the case of an Air Canada chatbot providing misinformation about bereavement fares, which the airline was later held legally responsible for.18 Studies simulating the injection of LLM-generated fake news into online ecosystems show it can degrade the prominence of real news in recommendation systems, a phenomenon termed "Truth Decay".24 The ability of AI to generate convincing fake scientific abstracts or even full papers further threatens the integrity of research dissemination.21

The impact of AI-driven misinformation is multifaceted. It erodes public trust in information sources, both human and artificial.23 It can lead to harmful decisions if inaccurate information is relied upon in sensitive fields like healthcare or finance.23 The increasing sophistication of AI-generated text makes it harder for individuals to distinguish between authentic and fabricated content 23, potentially enabling large-scale manipulation of public opinion or interference with democratic processes.24 A particularly dangerous aspect is the "credibility illusion": because LLMs generate text that is grammatically correct and often stylistically convincing, their false statements can appear highly credible, increasing the likelihood that users will accept them without verification.18

Regurgitation of Private Information (PII) and Copyrighted Snippets:

LLMs possess the capacity to memorize specific sequences from their training data, especially data that is repeated frequently or is unique within the dataset.19 This memorization capability, while sometimes useful for recalling facts, poses significant risks when the memorized data includes sensitive information like PII or substantial portions of copyrighted works. The model may then "regurgitate" this memorized data verbatim or near-verbatim in its outputs.

Research has demonstrated the feasibility of extracting PII that models were exposed to during training.19 Concerns about regurgitation are central to the development of machine unlearning techniques, which aim to remove specific information (like copyrighted text or PII) from trained models.29 Data deduplication is also employed as a pre-processing step specifically to mitigate the risk of memorization by reducing the repetition of data points.33

The consequences of regurgitation are direct and severe. The exposure of PII constitutes a clear privacy violation for the individuals concerned.19 The reproduction of significant portions of copyrighted material can lead to infringement claims and legal liability for the developers or deployers of the AI system.2 This issue highlights an inherent tension in LLM development: the need for models to learn and recall specific information to be useful often conflicts directly with the need to prevent the memorization and potential leakage of sensitive or protected content within that information.19 Efforts to reduce memorization, such as aggressive data filtering or certain privacy-enhancing techniques, might inadvertently impact the model's overall knowledge or performance.

Security Vulnerabilities: Malicious Code Generation and Exploitable Flaws:

The data used to train AI models, particularly those intended for code generation or analysis, can introduce significant security risks. Training datasets scraped from public repositories like GitHub inevitably contain examples of vulnerable code.39 Models learning from this data may replicate insecure coding practices or fail to implement necessary security checks, such as input sanitization.39 Research suggests that code generated with AI assistance can be statistically less secure than code written without it, partly because developers may overly trust the AI's output.39

Beyond learning from insecure examples, AI systems can be actively manipulated for malicious purposes. Attackers can attempt to poison training or fine-tuning datasets with malicious code or backdoors.39 More commonly, attackers can use prompt injection or jailbreaking techniques to trick AI models into generating malicious code, such as malware scripts, SQL injection payloads, or code facilitating network attacks.43 Models have been successfully prompted to generate phishing messages 43, assist in generating exploits like Return-Oriented Programming (ROP) chains or buffer overflows 44, and even create fake code repositories designed to lure developers into downloading malicious software.44

A novel attack vector arises from LLM hallucinations in the coding context: "slopsquatting." Models may suggest plausible but non-existent package or library names in their code recommendations. Attackers monitor these hallucinations, register the fake names in public package repositories with malicious code, and wait for developers (or automated AI agents) relying on the LLM's suggestion to install the harmful package.18

The impact of these security vulnerabilities is considerable. It can lead to the widespread introduction of security flaws into software developed using AI tools, increasing the attack surface for organizations.39 AI can directly facilitate cyberattacks by generating malicious payloads or assisting attackers in planning and execution.43 Data poisoning and slopsquatting represent significant threats to the software supply chain, potentially compromising systems at a fundamental level.18 AI coding assistants, if not carefully managed and reviewed, risk becoming multipliers for insecure code, rapidly propagating vulnerabilities across the software ecosystem.39 Furthermore, the emergence of attacks like slopsquatting demonstrates how AI's unique failure modes (like hallucination) can be exploited to create entirely new types of security threats.39

Please continue reading here (due to post length constraints): https://p4sc4l.substack.com/p/while-public-discourse-on-ai-training