- Pascal's Chatbot Q&As

- Posts

- Todor Markov, a former researcher now at Anthropic, declared under oath that Altman is a "person of low integrity" who lied to staff about non-disparagement agreements and likely other matters...

Todor Markov, a former researcher now at Anthropic, declared under oath that Altman is a "person of low integrity" who lied to staff about non-disparagement agreements and likely other matters...

...including OpenAI’s commitment to its mission. Markov said the Charter was a “smoke screen... to attract and retain idealistic talent while providing no real check” on OpenAI’s pursuit of AGI.

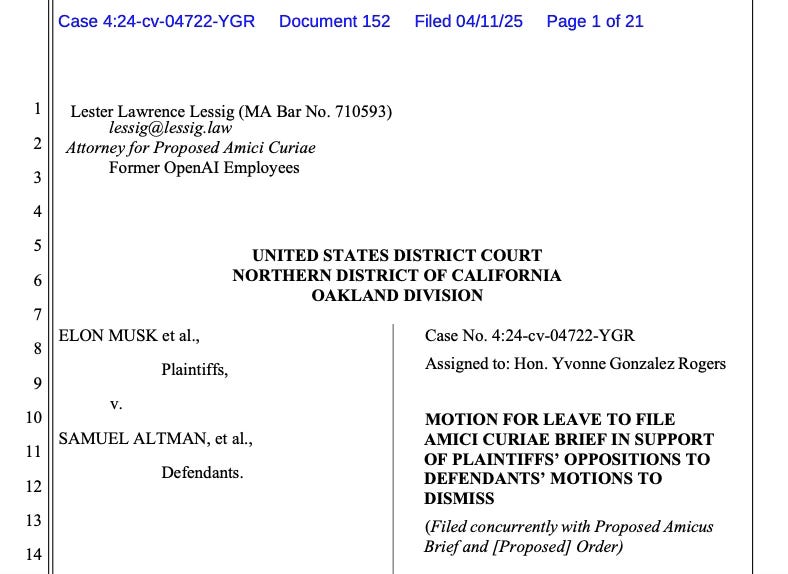

Asking ChatGPT-4o: Please analyze the articles “12 former OpenAI employees asked to be heard in Elon Musk’s lawsuit against the company; one calls Sam Altman a ‘person of low integrity’” , “Ex-OpenAI staffers file amicus brief opposing the company’s for-profit transition” , “Group of ex-OpenAI employees back Musk's lawsuit to halt OpenAI restructure” and the associated Amicus Briefand list the most surprising, controversial and valuable statements made. Do you agree with the arguments of the 12 former employees?

ChatGPT-4o’s answer:

Based on a full analysis of the Amicus Brief and supporting articles from Yahoo News, Reuters, and TechCrunch, here are the most surprising, controversial, and valuable statements from the 12 former OpenAI employees—and my assessment of their arguments.

🔥 Most Surprising Statements

Personal Integrity Attack on Sam Altman

Todor Markov, a former researcher now at Anthropic, declared under oath that Altman is a "person of low integrity" who lied to staff about non-disparagement agreements and likely other matters including OpenAI’s commitment to its mission.Former Employees Back Musk Despite Financial Risks

Markov states that supporting the lawsuit might cost him financially since much of his life savings are in OpenAI equity. He says this underscores how deeply he believes OpenAI is betraying its original mission.Use of Charter as ‘Facade’

Markov said the Charter was a “smoke screen… to attract and retain idealistic talent while providing no real check” on OpenAI’s pursuit of AGI.

⚖️ Most Controversial Assertions

That Restructuring Violates OpenAI’s Mission

The brief argues that any change stripping the nonprofit of control is a “fundamental violation” of its mission, as codified in its Charter and governance structure.OpenAI Misled Staff and Investors

The employees allege that assurances about nonprofit control were instrumental in recruiting and retaining them, and those commitments are now being quietly abandoned.Concerns About Safety and AGI Race

The brief warns that removing nonprofit control opens the door to "cutting corners" on safety and triggering a reckless AGI arms race—contradicting the Charter’s “merge and assist” clause, which says OpenAI should help a safer competitor rather than race to beat them.

💎 Most Valuable Insights

Charter Integration into Company Culture

The brief documents how OpenAI institutionalized the Charter—including into performance evaluations, hiring pitches, and leadership communication. This isn’t just PR fluff; it was central to internal governance.Importance of Nonprofit Control to Fundraising vs. Mission

OpenAI claims that shedding nonprofit control is necessary to raise capital (like a $40 billion round), but the employees counter that capital must not compromise oversight critical to global safety.Recruitment Framed Around Ethical Structure

Candidates were often told OpenAI’s structure made it morally superior to Google or Anthropic, which makes the potential restructuring feel especially deceptive to those who joined based on that premise.

🤔 Do I agree with the 12 former employees?

Broadly, yes—with caveats.

✔️ Why I Agree:

Their insider knowledge makes their testimony powerful, especially since they represent a range of technical and policy staff.

The concern about charitable commitments vs. profit motives is foundational in high-stakes AI development. Giving investors control over AGI development without nonprofit safeguards would contradict OpenAI's public promise.

Their transparency (including financial self-harm) adds credibility and ethical weight to their position.

❗ Caveats:

The legal claim that this violates OpenAI’s mission or fiduciary duty may be challenging to prove unless the Charter is legally binding (not just aspirational).

Some may argue the shift to a PBC (Public Benefit Corporation) still allows OpenAI to pursue public-good goals with greater financial flexibility—if governance and transparency are preserved.