- Pascal's Chatbot Q&As

- Posts

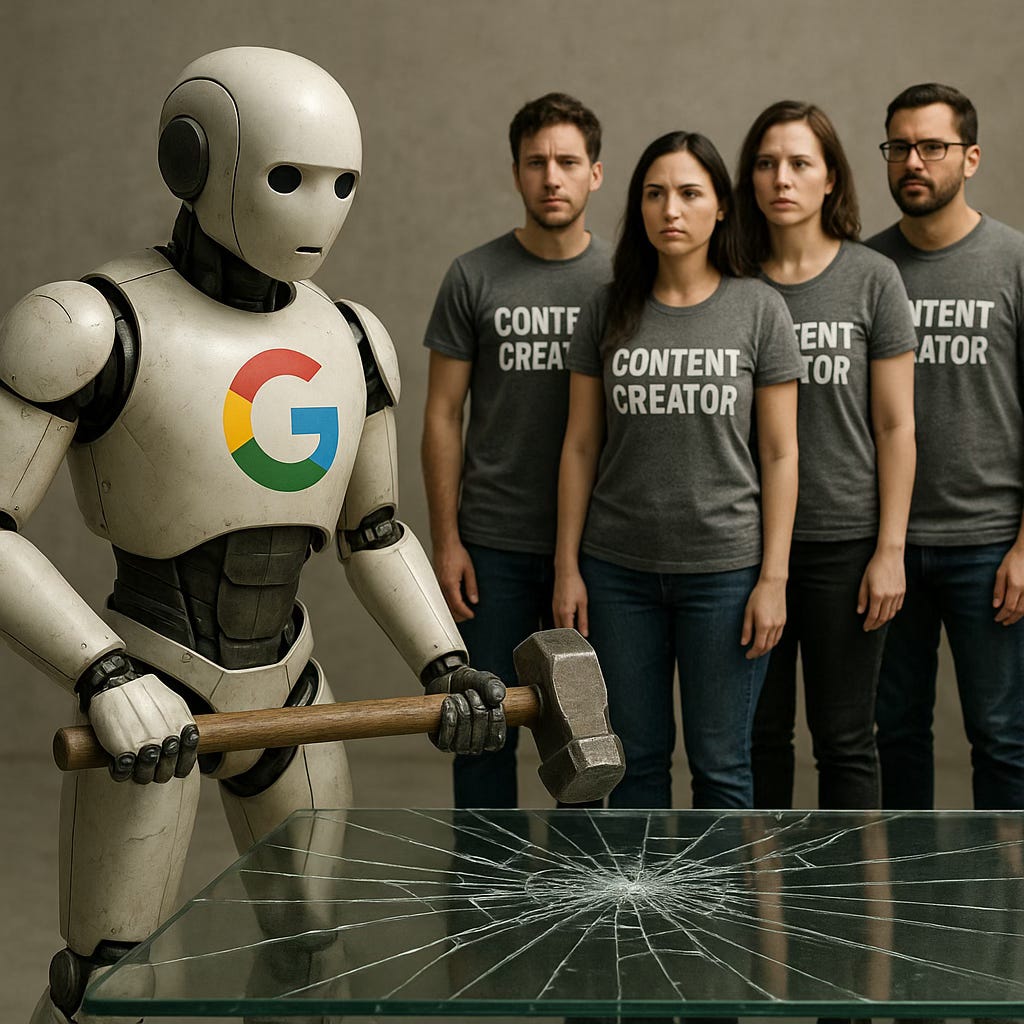

- These companies rely on legal grey areas, user agreements, and sheer market power to harvest content without meaningful consent or remuneration.

These companies rely on legal grey areas, user agreements, and sheer market power to harvest content without meaningful consent or remuneration.

Such behavior undermines public trust and fuels the perception that AI is built on systemic appropriation.

YouTube, AI, and the Ethics of Consent — Google’s Gemini and Veo 3 Under Scrutiny

by ChatGPT-4o

The recent revelation that Google is using its vast repository of YouTube videos to train its AI systems — including Gemini and Veo 3 — has reignited the debate on data ethics, intellectual property, and the fair treatment of creators. While Google maintains that such use falls under the platform’s existing terms of service, the lack of transparency and consent mechanisms for creators raises legal and ethical questions. This development should also be viewed alongside similar controversies, such as NVIDIA’s use of YouTube videos and Meta’s training on pirated books, both of which illuminate systemic issues in how Big Tech companies approach AI development.

Google’s Training of Gemini and Veo 3 on YouTube Videos

According to a CNBC report from June 2025, Google has confirmed that it trains its Gemini and Veo 3 models using a subset of YouTube videos. While the company insists it uses only a portion of its 20-billion-video library and adheres to agreements with creators and media companies, experts point out that many creators are unaware that their work is being used to develop AI tools that may ultimately replace or compete with them.

YouTube’s terms of service do grant the platform a sublicensable, royalty-free license to use uploaded content. However, those terms were written for video hosting, not for training generative models capable of replicating the audio-visual essence of a creator’s identity. The moral and economic implications of using personal and professional content to generate synthetic “facsimiles” of that same content without notice, let alone compensation, raise concerns that go well beyond legal formalism.

Comparison with NVIDIA and Meta

Google is not the first to face criticism for such practices:

NVIDIA was found to have scraped and used YouTube videos to train its AI models. The issue came to light when YouTube parent Google accused NVIDIA of violating its terms of service. Unlike Google, NVIDIA was a third party, making its use of YouTube data a more clear-cut case of unauthorized data scraping. However, the ethical principle remains: even publicly available data does not equate to freely usable training material for commercial AI systems, especially when creators have no opt-out mechanism or awareness of the usage.

Meta took things even further by reportedly using pirated copies of books for AI training. This triggered outrage in the literary community, not just because of copyright violations, but because Meta had taken from authors without consent — a particularly egregious act in a creative ecosystem already struggling with monetization in the digital age.

What binds all three cases is a disregard for the spirit of copyright law and the moral rights of creators. These companies rely on legal grey areas, user agreements, and sheer market power to harvest content without meaningful consent or remuneration. Even when technically legal — as is arguably the case with Google's internal use of YouTube content — such behavior undermines public trust and fuels the perception that AI is built on systemic appropriation.

Is Google Acting Against Its Own Terms?

While Google appears to be acting within the letter of its terms of service, it may be violating the spirit of trust and transparency that underpins user relationships. YouTube’s terms grant Google a broad license to use uploaded content, but they were not crafted with AI training in mind — especially not for models that simulate voices, visuals, or artistic styles.

More problematically, while YouTube allows creators to opt out of third-party AI training by companies like Apple, NVIDIA, or Amazon, it provides no such opt-out for Google’s own models. This two-tier system betrays an inherent conflict of interest: YouTube is both a platform for creators and a data mine for its parent company’s AI ambitions.

If Google’s AI-generated content closely mimics the style or voice of an individual creator — as some cases already show with tools like Veo 3 — it edges dangerously close to rights violations regarding likeness, performance, and authorship, even if no direct reproduction occurs. Indemnifying users of Veo-generated content (as Google promises) does little to address the underlying issue: creators never agreed to this form of exploitation in the first place.

Ethical, Legal, and Moral Guidelines for Big Tech

To prevent further erosion of creator rights and public trust, companies developing AI models should adopt the following principles:

1. Consent as a Core Principle

Creators must be given a clear, accessible option to opt out of AI training — even if their content resides on platforms they do not control. Consent must be specific, informed, and revocable.

2. Transparency in Training Practices

Companies must publish detailed information on what data is used in training, including content sources, quantity, timeframes, and model capabilities. Users deserve to know if their likeness, style, or work is being ingested into a generative system.

3. Compensation and Revenue Sharing

When creator content is used to build commercial tools, a portion of the profits should be shared with the original rights holders. Just as Spotify pays musicians based on usage, AI companies should pay creators when their content is repurposed.

4. Strengthened Legal Frameworks

Governments and regulators must step in to update copyright law for the AI era, closing loopholes around fair use, training data, and synthetic likenesses. Laws like the EU AI Act and proposed US federal digital replicas legislation should enforce limits on what kinds of data can be used without explicit rights clearance.

5. Independent Oversight

An independent body, similar to a digital copyright ombudsman, should be tasked with resolving disputes, verifying data provenance, and overseeing the ethical behavior of AI companies.

6. Moratoriums for High-Risk Models

For models that simulate human likeness or mimic performance (voice, face, style), moratoriums or stricter licensing obligations should apply until ethical and legal frameworks catch up.

Conclusion: Time for a Creator-Centric AI Ecosystem

Google’s use of YouTube videos for training its AI models, while potentially legal under existing agreements, is ethically fraught and emblematic of a broader industry trend: exploiting creator content for AI development without consent or compensation. When compared to NVIDIA’s unauthorized scraping or Meta’s training on pirated books, Google’s actions highlight how even “first-party” usage can be controversial when creators are left in the dark.

In the AI era, consent cannot be implied through outdated terms of service. It must be earned, requested, and respected. Big Tech companies must not only comply with the law but also uphold the dignity, labor, and rights of creators who make the digital world worth modeling. Anything less will not only spark more lawsuits — it will bankrupt the creative commons upon which the future of human-AI collaboration depends.