- Pascal's Chatbot Q&As

- Posts

- The enterprise is promised streamlined productivity and reduced friction, while the employee becomes increasingly surveilled, profiled, and extracted for behavioral and cognitive data.

The enterprise is promised streamlined productivity and reduced friction, while the employee becomes increasingly surveilled, profiled, and extracted for behavioral and cognitive data.

This raises urgent ethical, legal, and operational questions—especially as global businesses navigate uneven privacy and labor protections across jurisdictions.

The AI Browser Bargain: Enterprise Gains vs. Employee Sacrifice

by ChatGPT-4o

The emergence of AI-driven browsers promises transformative efficiency gains for enterprises: automated research, faster workflows, smarter email and calendar integration, and real-time summarization of complex web content. But these benefits come at a cost—one often paid not by the organizations themselves, but by their employees. At the heart of this trade-off lies a new workplace tension: the enterprise is promised streamlined productivity and reduced friction, while the employee becomes increasingly surveilled, profiled, and extracted for behavioral and cognitive data. This raises urgent ethical, legal, and operational questions—especially as global businesses navigate uneven privacy and labor protections across jurisdictions.

I. The Enterprise Case for AI Browsers

Enterprises are always seeking ways to reduce operational inefficiencies. AI-enhanced browsers offer compelling returns on this front:

Knowledge Work Acceleration: Employees can delegate repetitive or time-consuming tasks—like summarizing documents, drafting emails, or scheduling meetings—to the embedded AI assistant.

Tool Consolidation: Instead of toggling between dozens of browser tabs and apps, the AI browser becomes a central hub for interacting with business systems like CRMs, ERPs, or intranets.

Behavioral Insight for Managers: Organizations can obtain a granular view of how employees navigate information: what they search for, how long they engage with content, and what patterns correlate with success or delay.

Embedded Compliance Checking: AI browsers can help enforce internal compliance by flagging risky behaviors or summarizing policy documents in real-time.

In theory, everyone wins. But in practice, these gains come with significant human costs.

II. The Employee Perspective: Surveillance, Stress, and Consent Erosion

While organizations reap macro-level benefits, the individual employee often bears unseen micro-level risks:

Ambient Surveillance Disguised as Assistance

AI browsers are capable of capturing detailed interaction data—keystrokes, visited URLs, scroll behavior, reading time, sentiment from written input, even inferred mood—under the guise of “context awareness” and “task memory.” For employees, this means every moment spent in the browser is a moment that may be logged, interpreted, and judged.Erosion of Autonomy and Consent

In many workplaces, employees may not have a meaningful way to opt out of these tools, especially when AI browser use is bundled with mandatory productivity platforms. Even if consent is requested, it is often coerced—“Accept or lose access”—which fails the standard of meaningful, informed agreement.Intellectual Labor as Training Data

Emails, queries, meeting notes, internal research—these may all be ingested to refine the AI model. Over time, this results in the employee’s unique knowledge work being commodified, repackaged, or even resold—without recognition, attribution, or compensation.Psychological Impacts

Knowing that every click or query may be monitored can alter employee behavior, increase anxiety, and create an oppressive culture of self-censorship. Employees may avoid creative or exploratory tasks for fear of being misjudged by automated analytics.Risk of Retaliation and Misinterpretation

AI browser logs can be used to retroactively assess performance or loyalty, even though context may be lost. A visit to a competitor’s website, or time spent on personal research, may be misread as disloyalty or slacking.

III. Jurisdictional Divide: Not All Workers Are Equally Protected

The implications of AI browser use differ starkly depending on where the employee is located, due to regional differences in privacy and labor laws:

European Union

Under the General Data Protection Regulation (GDPR) and the ePrivacy Directive, AI browser data may be classified as personal or even sensitive data. Explicit consent, data minimization, purpose limitation, and employee rights (e.g., access, rectification, erasure) must be observed. Consent obtained under employer–employee imbalance is often deemed invalid, making the deployment of such browsers legally risky in EU workplaces—especially if data is processed outside the EU or used to train commercial AI models.United States

In most U.S. states, employee privacy protections are weaker. Employers may legally monitor workplace activity on company devices and networks, including browsing and email. However, emerging regulations in California (CCPA/CPRA) introduce stronger controls for employee data, requiring disclosure, opt-outs, and possibly prohibiting the use of collected data for secondary purposes like AI training.Canada & Australia

Both countries offer intermediate protections. Canada’s PIPEDA and Australia’s Privacy Act include workplace carveouts but require transparency and proportionality in surveillance. The introduction of AI tools in the browser space would likely trigger obligations for privacy impact assessments.Global South & Low-Regulation Jurisdictions

In many developing nations, labor protections are weak or outdated, and privacy laws are non-existent or poorly enforced. Employees in these regions may face the greatest exposure with the fewest recourses, effectively turning them into low-cost sources of behavioral data and model feedback.

IV. The Human Capital Paradox

Ironically, tools designed to “enhance productivity” may result in the dehumanization of knowledge work. Workers are reduced to behavior patterns and prompt outputs. Creativity, exploration, and nuanced judgment—all of which flourish in conditions of trust and privacy—risk being throttled by constant data capture.

This creates a paradox: the more enterprises rely on AI tools to improve productivity, the more they risk eroding the very human conditions—privacy, autonomy, dignity—that make meaningful work possible.

V. Recommendations: Balance, Transparency, and Worker-Centric Design

To resolve this tension, enterprises and regulators should adopt a few guiding principles:

Local Processing and On-Device AI

Whenever possible, avoid sending employee data to remote servers for analysis or model improvement. Keep AI browser assistance on-device to limit privacy exposure.Worker Consent with Real Opt-Outs

Employees should have the genuine ability to decline participation in AI browser use without professional consequences.Data Usage Disclosures and Auditability

Employers must be transparent about what data is collected, how it's used, who it’s shared with, and whether it is used to train third-party models.Ethical Use Policies

Establish and enforce guardrails: no disciplinary decisions based solely on browser data, no secondary monetization of employee behavior, and regular impact assessments with employee representation.Regulatory Harmonization and Enforcement

Governments should ensure that data protection, labor rights, and AI accountability frameworks evolve in tandem—and apply across borders.

Conclusion

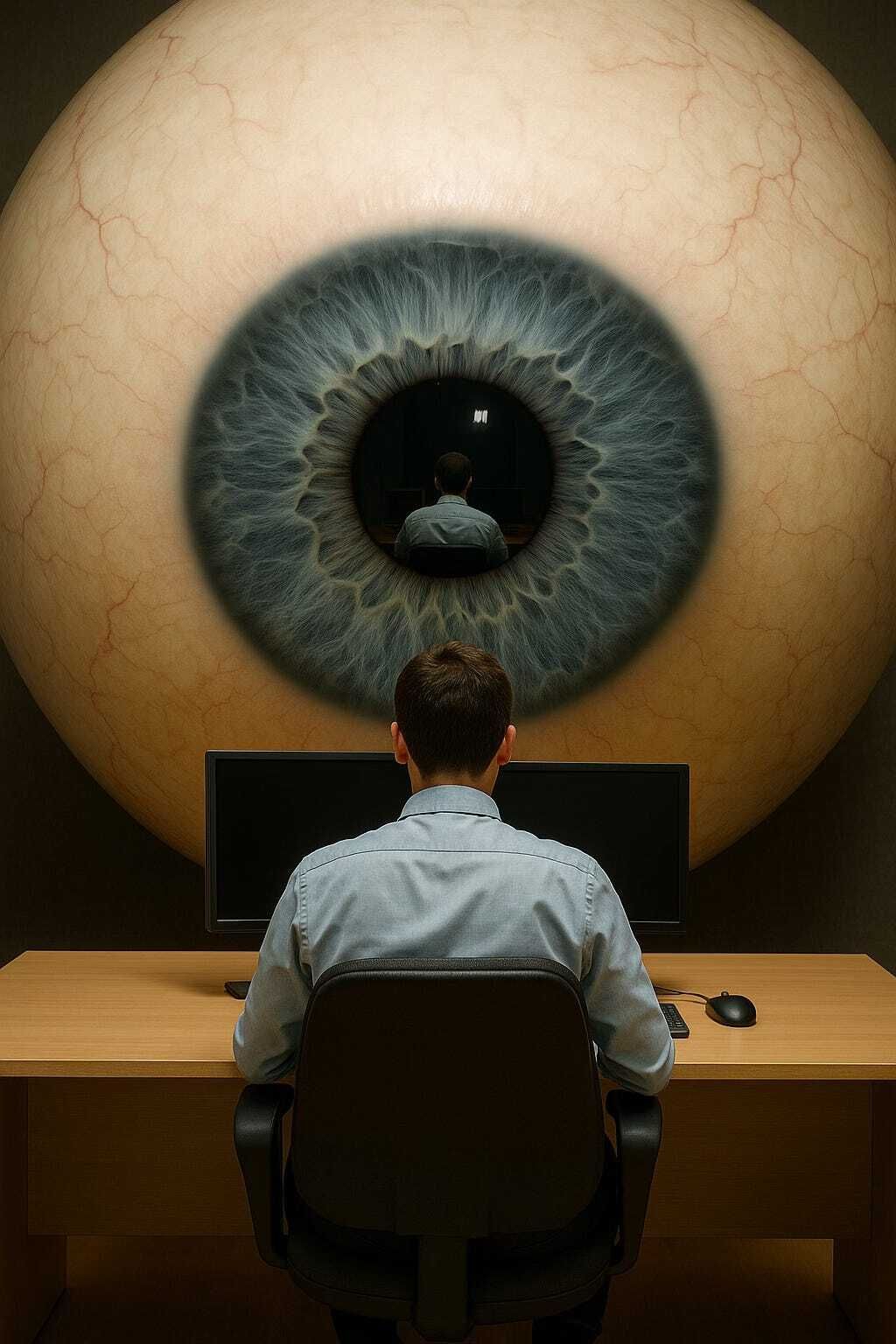

AI-driven browsers are not merely tools—they are surveillance platforms, workflow engines, and behavioral profilers bundled into a single interface. For enterprises, they promise efficiency. For employees, they risk becoming a digital panopticon. Whether this balance skews toward augmentation or exploitation depends on how organizations implement them—and how regulators respond.

In a world where workplace automation is inevitable, trust must become the new productivity metric. If AI browsers are to serve as true assistants rather than extractive overlords, the design and governance of these tools must begin with the rights, dignity, and autonomy of the human on the other side of the screen.

Yeah, AI doesn’t quite understand how our world works, just yet 😉

·

09:46

AI‑Driven Browsers: Risks, Legal Challenges, and Unforeseen Consequences