- Pascal's Chatbot Q&As

- Posts

- The author claims that a trained model file—a persistent, multi-gigabyte object stored on disk—is a “tangible medium of expression.” Because verbatim training data can be extracted from these weights

The author claims that a trained model file—a persistent, multi-gigabyte object stored on disk—is a “tangible medium of expression.” Because verbatim training data can be extracted from these weights

...the model file itself allegedly fits the statutory definition of “Copies”: “material objects... in which a work is fixed...and from which the work can be... reproduced...with the aid of a machine.

An Analysis of Generative AI Copyright: Corroborating Technical Memorization and Market Substitution

by Gemini 2.5 Pro. Warning, LLMs may hallucinate!

An analysis of the provided materials 1 authored by Michael Bommarito reveals two distinct but complementary arguments regarding generative artificial intelligence (AI) and copyright law. The first is a technical thesis concerning the nature of model weights as infringing “copies.” The second is a legal-economic thesis arguing that model outputs are “functional substitutes” that cause actionable market harm.

A. The Technical Thesis: Model Weights as Legally Definite “Copies”

The primary technical claim is that the complex, often-misunderstood “black box” of a neural network is, in legal and mathematical terms, a data compression system.1 The argument, titled “model weights contain copies: compression is not magic,” posits that the fundamental training objective of a modern generative model—to minimize the “loss,” or the difference between its predictions and the training data—is mathematically equivalent to data compression. The goal is to store the information (or “entropy”) of a large training dataset into a smaller, more efficient representation: the billions of numerical parameters, or “weights,” that constitute the final model.1

This argument asserts that memorization of training data is not merely an occasional “bug” or design flaw. Instead, it is a mathematical necessity for achieving high performance on real-world data, which follows a “long-tail” distribution.1 Natural language, for example, is composed of a few common words and a vast “long tail” of rare but critical words, names, facts, and phrases. To be useful, a model must be able to recall these rare items, which requires memorizing them.1

The legal hook for this technical mechanism is 17 U.S.C. §101, the definitions section of the U.S. Copyright Act. The author claims that a trained model file—a persistent, multi-gigabyte object stored on disk—is a “tangible medium of expression.” Because verbatim training data can be extracted from these weights, the model file itself allegedly fits the statutory definition of “Copies”: “material objects... in which a work is fixed... and from which the work can be... reproduced... with the aid of a machine or device”.1

To demonstrate this principle, the author presents an “existence proof” 1 described as “Linux-as-a-Model.” This experiment is framed as a reductio ad absurdum of the tech industry’s legal position:

GPL-2.0 licensed source code (from the Linux kernel) is used as training data.

The resulting model weights are distributed under a permissive MIT license.

The MIT-licensed model, when prompted, outputs the original, verbatim GPL-2.0 code.

This process is presented as self-evident “license laundering,” intended to demonstrate that the weights must be a copy or derivative work of the training data, otherwise the copyleft obligations of the GPL license could not be so easily stripped.1

B. The Legal-Economic Thesis: Functional Substitution as Market Harm

The second core claim 1 addresses the far more common scenario of model outputs that are not verbatim, character-for-character reproductions. The argument is that these “near copies” or “functional substitutes” cause market harm, which is the decisive factor in a U.S. fair use analysis.

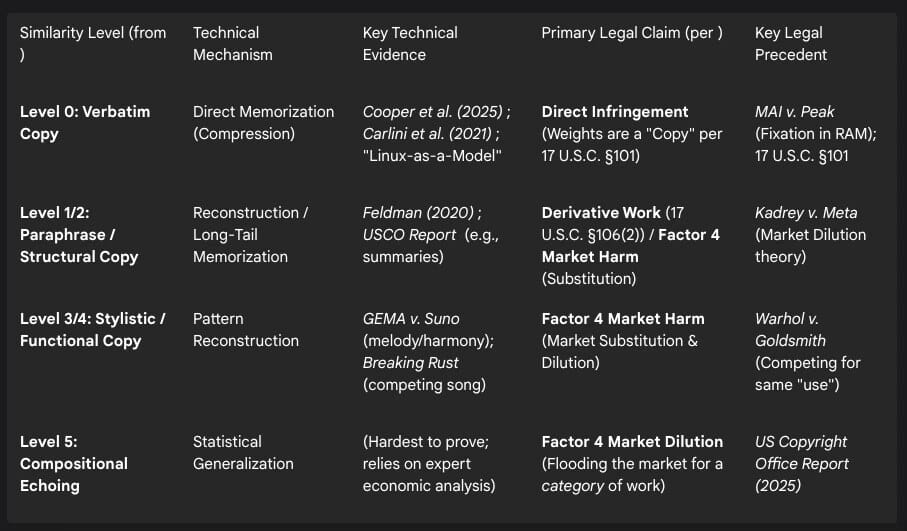

The author proposes a 5-level “spectrum of similarity” to categorize these outputs, ranging from high n-gram overlap (Level 1) and paraphrase (Level 2) to stylistic (Level 3) and structural (Level 4) reproduction.1 The central claim is that all of these levels can functionally substitute for the original work, even if they are not direct copies. For example, a generated image “in the style of” an artist (Level 3) competes for the same market as that artist’s commissions. A generated code snippet (Level 4) competes with the licensed code library it was trained on.

This thesis centers its legal argument on the fourth factor of the fair use test, 17 U.S.C. §107(4): “the effect of the use upon the potential market for or value of the copyrighted work”.1 The author argues, citing Harper & Row v. Nation Enterprises, that Factor 4 is “undoubtedly the single most important element of fair use”.1 Citing the recent Kadrey v. Meta Platforms case, the analysis notes that “market dilution will often cause plaintiffs to decisively win the fourth factor—and thus win the fair use question overall”.1

The author’s argument rests on a specific interpretation of two key precedents:

Andy Warhol Foundation v. Goldsmith: This Supreme Court case is cited to support the principle that a “transformative purpose” (Factor 1) does not excuse a substitutive market effect (Factor 4). The author argues that AI outputs compete in the same market as the originals, just as the Warhol print was found to compete for the same magazine cover licensing market as Goldsmith’s original photograph.1

Authors Guild v. Google: This case is presented as the critical distinction. The “snippet view” in Google Books was found to be a fair use precisely because it was intentionally non-substitutive by design. The court noted that a user could not use snippets to reconstruct a “competing substitute”.6 The author argues that generative AI models, which can output entire works or their functional equivalents, are the direct opposite of the “snippet view” safeguard and thus fail the Google Books test.1

Finally, the argument relies on the “market dilution theory” 1 , which is corroborated by the US Copyright Office 2025 report.1 This theory posits that AI harms the entire category of a work (e.g., the market for “romance novels”) by flooding that market with cheap, algorithmically-generated substitutes, even if it does not harm the specific market for one particular novel used in training.

II. Corroboration of Technical & Scientific Assertions

The accuracy of the author’s technical claims is evaluated by cross-referencing them with independent scientific and technical literature. The claims are found to be strongly corroborated.

A. Verifying Verbatim Memorization and Extraction

The claim that models memorize and can reproduce verbatim copies of training data 1 is a foundational element. This is not a theoretical assertion; it is a well-documented and replicated finding in the machine learning literature.

Evidence from GPT-2: The foundational 2021 paper, “Extracting Training Data from Large Language Models” by Carlini et al., provides definitive proof.8 The researchers “extract[ed] hundreds of verbatim text sequences from... GPT-2’s training data,” including personally identifiable information (names, phone numbers, email addresses), code, and IRC conversations.8 This work was significant for proving that memorization could occur even for data sequences that appeared only once in the training corpus 9 , refuting the common defense that models only memorize highly duplicated content.

Evidence from Llama 3.1 70B: A 2025 paper by Cooper et al. demonstrates a catastrophic escalation of this phenomenon.10 The study found that Meta’s Llama 3.1 70B model “entirely memorizes some books,” specifically Harry Potter and the Philosopher’s Stone and George Orwell’s 1984.10 The researchers reported that they could “deterministically generate the entire book near-verbatim” using only a simple prompt.10

“Linux-as-a-Model” Corroboration: The author’s own “Linux-as-a-Model” experiment 1 is corroborated as a reproducible “existence proof” by its public release on the Hugging Face model-sharing platform 13 and related documentation.14

The confluence of these findings indicates a bifurcated legal risk for model developers. The first is a systemic risk, demonstrated by Carlini et al. (2021), involving widespread, low-level memorization of myriad training snippets.8 The second is a catastrophic “black swan” risk, demonstrated by Cooper et al. (2025), where a single, high-value, culturally significant work is perfectly and deterministically reproducible.10

This technical reality presents a severe challenge to the legal defenses used by AI developers. The primary defense has relied on an analogy to Authors Guild v. Google 16 , a case that rested entirely on the impossibility of substituting the original work via the “snippet view”.6 The Cooper et al. (2025) findings shatter this analogy by proving that perfect, 1-to-1 substitution is not only possible but is an observable property of state-of-the-art models. A plaintiff (e.g., the Harry Potter publisher) now has a dramatically simplified legal case, able to allege direct, verbatim copying rather than relying on complex “substantial similarity” arguments.

B. Verifying the Predictability and Necessity of Memorization

The author’s second technical claim is that memorization is (a) predictable and (b) a mathematical necessity, not a “bug”.1 This is also strongly confirmed by independent research.

Predictability: The 2023 paper “Emergent and Predictable Memorization in Large Language Models” by Biderman et al. provides the empirical evidence.17 By training the Pythia suite of models, the researchers demonstrated that memorization scales predictably with model size and other training dynamics.17 This refutes the narrative that memorization is a random, uncontrollable accident.

Necessity: The 2020 paper “Does Learning Require Memorization? A Short Tale about a Long Tail” by Vitaly Feldman provides the core theoretical basis for this claim.19 The paper argues that for models to learn effectively from “long-tail distributions” (which include all real-world data like language and images), memorization is mathematically required to achieve optimal generalization.22 Rare-but-important data points must be memorized to be learned.

This Feldman (2020) paper, in particular, provides a potent rebuttal to the common defense that “better engineering” will eventually “fix” the memorization problem. It suggests that AI makers face a fundamental Catch-22: they cannot fully eliminate memorization of copyrighted works without also harming model performance and making their models “dumber” and less useful. This creates a trap for legal defenses. A plaintiff’s expert can now:

Prove a model is memorizing content using extraction attacks, as demonstrated by Cooper et al. (2025).10

Argue that this memorization is not an “accident” but a necessary and intentional part of the model’s design to achieve its performance, as proven by Feldman (2020).22

This combination establishes the technical act of copying (fixation and reproduction) while simultaneously discrediting the “accidental bug” narrative.

III. Corroboration of Legal & Market Assertions

The accuracy of the legal-economic claims—that AI outputs function as market substitutes and that “market dilution” is the new legal frontier—is assessed by examining recent litigation, market events, and regulatory reports.

A. Verifying Real-World Market Substitution

The author’s claim 1 that AI outputs are already competing with and substituting for human-created works is confirmed by recent, high-profile market events.

The “Breaking Rust” Incident: This is a direct, measurable example of market substitution. In late 2024, an AI-generated “virtual act” named Breaking Rust produced a song, “Walk My Walk,” which reached #1 on the Billboard Country Digital Song Sales chart.23 This is not a hypothetical. An AI-generated product, created from patterns trained on human-made music, directly competed in the same market (digital song sales) and displaced human artists for the #1 position and the corresponding consumer spending. The “artist” has accumulated over two million monthly listeners on Spotify.26

The GEMA v. Suno Lawsuit: This 2025 lawsuit, filed by the German music rights society GEMA, shows that major rights-holder organizations are now litigating based on functional substitution.27 The claim is that Suno’s AI music generator infringes on protected works by creating outputs that match their “melody, harmony, and rhythm”.28 This is a real-world, high-stakes legal argument based on the “stylistic” or “structural” (Level 3/4) similarity proposed in the author’s taxonomy.1 Similar lawsuits have been filed by the RIAA.30

These two events demonstrate a crucial strategic shift. The Breaking Rust case 23 provides a perfect real-world example for the Warhol v. Goldsmith 5 substitution test: the AI song is competing for the exact same “use” as a human artist. The GEMA case 29 signals a pivot by plaintiffs. The first wave of AI lawsuits (Andersen, Kadrey) focused on the input (training data), allowing tech companies to deploy the “transformative” (Factor 1) defense.4 This new wave of lawsuits focuses on the output (functional substitution), which is a Factor 4 (market harm) offense. This strategy flanks the “transformative” defense by arguing: “Even if your process is transformative, your product is substitutive, and Warhol holds that this is what matters.”

B. The “Market Dilution” Theory: Corroborating the New Legal Frontier

The assertion that “market dilution”—harm to a category of work—is the new legal battleground 1 is strongly confirmed by recent judicial and regulatory actions.

Kadrey v. Meta Platforms: While the court ultimately ruled for Meta on a failure of evidence, Judge Chhabria’s opinion endorsed the underlying theory.4 The judge stated, “it seems likely that market dilution will often cause plaintiffs to decisively win the fourth factor”.4 This has been widely interpreted as a judicial invitation for future plaintiffs: “Bring evidence of market dilution, and you will likely win”.35

US Copyright Office 2025 Report: This report, as cited by the author 1 , explicitly endorses the “market dilution theory”.7 While acknowledging it is “uncharted territory” 37 , the Copyright Office gives the precise example that “AI-generated romance novels... may flood the market and harm the market for these types of copyrighted works, even in the absence of evidence of harm to the market for an individual romance novel”.7

These two developments—a federal judge’s roadmap in Kadrey 4 and a major regulatory endorsement from the Copyright Office 7 —fundamentally change the evidentiary burden for plaintiffs. The task is no longer just finding “substantial similarity” in a single output. The new, and perhaps more viable, path is to commission an economic analysis 36 showing a category-level decline in revenue (e.g., for scholarly journal subscriptions, stock photo licenses, or emerging artist streaming royalties) that is causally linked to the rise of a specific AI model.

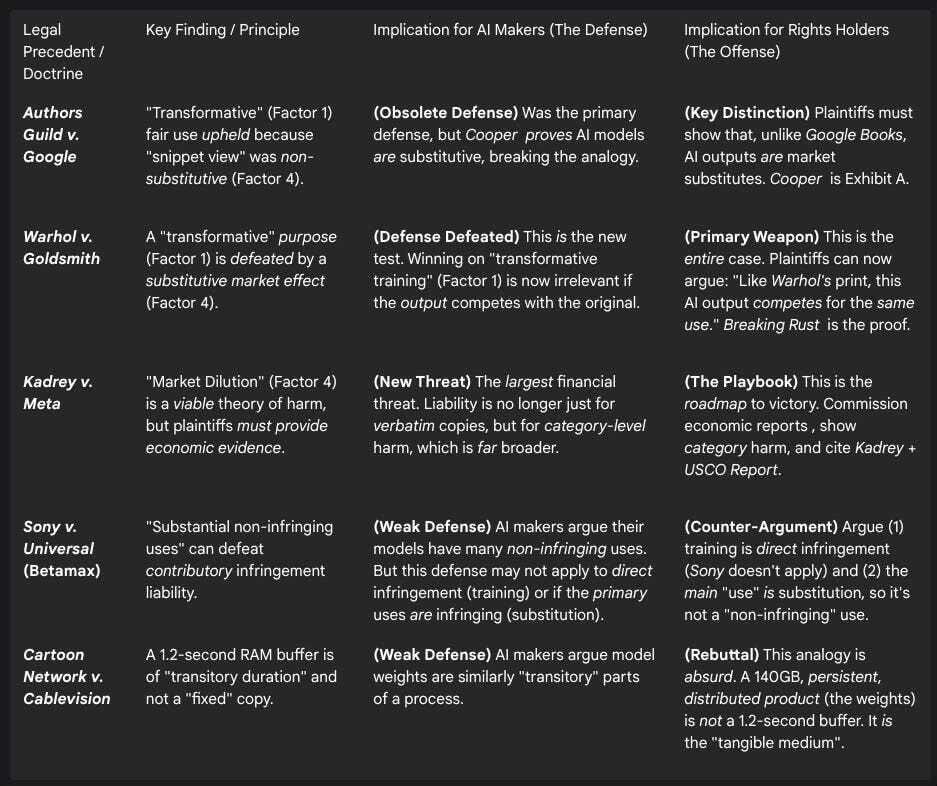

C. The Precedential Context: Warhol and Google Books

The author’s framing of Warhol as the new test and Google Books as the key distinction 1 is an accurate reading of the legal landscape.

Andy Warhol Foundation v. Goldsmith: The Supreme Court’s test zoomed in on the specific “use” of the work. The snippets confirm the court found that “works that have the same purpose are more likely to serve as substitutes”.5 Warhol’s print, when licensed for a magazine cover, competed in the same licensing market as Goldsmith’s photo, and this market harm (Factor 4) weighed against fair use.41 This maps directly to AI.

Authors Guild v. Google: The snippets confirm that the “snippet view” was foundational to the fair use finding.16 The Second Circuit held the use was fair because the display of text was “limited” and did not “provide a significant market substitute”.6 The court explicitly found a user “cannot succeed... in revealing... what could usefully serve as a competing substitute”.6

A cohesive legal-technical narrative emerges from this. (1) Warhol 5 provides the test: Is the output a substitute that competes in the same market? (2) Google Books 6 provides the defense: No, the system is non-substitutive by design. (3) Cooper et al. (2025) 10 breaks the defense by proving AI models are perfectly substitutive. (4) Breaking Rust 23 proves this substitution is already happening in the market. (5) Kadrey 4 and the USCO Report 7 provide the legal mechanism (”market dilution”) to scale this harm from one work to an entire creative category.

IV. Implications for Judges, Litigants, and Rights Holders

The corroboration of these technical and legal claims carries significant implications for all stakeholders in the AI and copyright ecosystem.

A. For the Judiciary: Re-evaluating Obsolete Technical Analogies

Judges are now being asked to rule on technical defenses that are demonstrably false. The “black box” defense, which treats the model as an unknowable magic, is refuted by the Biderman et al. (2023) 17 “predictable memorization” findings.

The most significant technical-legal defense to be re-evaluated is the “transitory duration” argument based on Cartoon Network v. CSC Holdings (Cablevision).1 In Cablevision, the Second Circuit found that a 1.2-second RAM buffer was of “transitory duration” and thus not “fixed” as a “copy” under the Copyright Act.44 AI makers argue that copies made during training or inference are similarly transitory.

This analogy is profoundly mismatched with the technical reality. Model weights are not a 1.2-second buffer; they are the final, persistent, multi-gigabyte product, stored on disk for years and intentionally distributed. The “transitory duration” doctrine applied to a process (buffering a stream). Model weights are a product. As the author 1 argues, a more apt analogy is a .zip file or an encrypted file: a machine-readable “copy” from which the original can be “perceived, reproduced, or otherwise communicated... with the aid of a machine or device”.2 The Cooper et al. (2025) 10 paper proves this reproduction is not only possible but can be perfect.

B. For Litigants (Plaintiffs): The New Evidentiary Burden

The recent rulings in Kadrey v. Meta 4 and Bartz v. Anthropic 32 are not a defeat for plaintiffs, but a roadmap. The courts 4 have signaled that “transformative use” (Factor 1) will likely favor AI makers for the training process. Therefore, the entire case must be built on Factor 4: Market Harm.

This shifts the evidentiary burden. The case will no longer be won by literary experts comparing two texts for “substantial similarity.” The case will be won by economists and market data that show a detailed record of substitution or revenue loss.39 The Breaking Rust incident 23 and the “melody, harmony, rhythm” analysis from the GEMA lawsuit 29 are the types of evidence that will satisfy this new, higher burden.

C. For Rights Holders (Scholarly Publishers, News Agencies, Artists)

Different rights holders are positioned differently in this new landscape:

Scholarly Publishers: They are in a uniquely strong position. Their content (e.g., medical and legal research) is the definition of Feldman’s (2020) 22 “long-tail” data: new, rare, valuable facts. This is precisely the content models must memorize to be useful, making them highly susceptible to regurgitating it as a functional substitute (e.g., answering a complex query, thereby supplanting a journal subscription).

News Agencies: They can now leverage the Copyright Office Report’s 7 “market dilution” theory to argue that AI-generated summaries (Level 2 substitution) flood the market and destroy the market for original reporting.

Artists: They can use the GEMA 29 “melody, harmony, rhythm” argument for “stylistic substitution” (Level 3) and point to Warhol 5 and Breaking Rust 23 to prove direct market competition.

The following table synthesizes the author’s two arguments 1 with the corroborating evidence into an actionable framework.

Table 1: A Spectrum of Substitution and Associated Legal Claims

V. Implications for AI Makers, Developers, and Technology Companies

The corroboration of these claims has severe strategic implications for AI developers and the broader tech industry.

A. The “Transformative Use” Defense (Factor 1): A Necessary but Insufficient Shield

The research confirms that AI makers are winning the “transformative use” argument for the training process, but this is a Pyrrhic victory. The Bartz and Kadrey rulings 4 found the input stage to be “highly transformative.”

However, the legal battle has pivoted. Winning on Factor 1 (Input) no longer wins the case. The Warhol v. Goldsmith 5 precedent has made the entire case hinge on Factor 4 (Output). Tech companies’ legal strategies, which were built on the Google Books 16 “transformative use” (Factor 1) precedent, are now obsolete because their models fail the Google Books 6 “non-substitutive output” (Factor 4) test.

B. The “Substantial Non-Infringing Use” Defense (Sony v. Universal)

The tech industry’s other major defense is the “Betamax” case, Sony v. Universal.45 This doctrine holds that a product (like a VCR) that is “capable of substantial non-infringing uses” (like “time-shifting,” which was found to be a fair use) cannot be held liable for contributory infringement.

This defense is far weaker for AI than it was for VCRs for three reasons:

Direct vs. Contributory Infringement: Sony 46 was only about contributory infringement (liability for users’ actions). The AI lawsuits allege direct infringement (the act of training is itself copying).1 The Sony defense does not apply to direct infringement.

“Fair Use” of the Use: The Sony defense worked because “time-shifting” was itself deemed a fair use.48 It is inconceivable that a court would find a user “deterministically generat[ing] the entire book Harry Potter“ 10 or “topping the Billboard charts” 23 to be a “fair use” by the user.

“Substantial” Use: The core value proposition of many AI models is explicitly substitutive: “Summarize this article,” “Write a song in the style of,” “Implement this algorithm.” The “infringing” uses are the “substantial” uses.

C. Strategic Pathways: Mitigation, Licensing, and Liability

AI makers are left with few viable legal-technical strategies.

Technical Mitigation is Failing: The Cooper et al. (2025) 10 paper is the proof. Llama 3.1 70B is a state-of-the-art, “aligned,” and “mitigated” model. It still memorized Harry Potter. This proves that alignment and deduplication are brittle safeguards, not a structural legal defense.

The Licensing “Off-Ramp”: The Copyright Office Report 37 and the GEMA lawsuit 28 repeatedly highlight the availability of licensing. The GEMA filing explicitly states it has a “licence model” and is suing because Suno refused it.28 The Copyright Office explicitly discusses “Voluntary licensing” and “Collective licensing” as a path forward.50

This context suggests the ongoing litigation is not an existential threat to AI, but rather a commercial negotiation being conducted via the courts. The GEMA and RIAA lawsuits 27 are leverage to force AI makers to the table to pay for compulsory or collective licenses. The tech industry’s “litigate-and-claim-fair-use” strategy is high-risk, as the technical 10 and market 23 evidence against it mounts. The only stable, long-term, scalable path to de-risk these products is to abandon the brittle “fair use” argument and begin these licensing negotiations.

The following table summarizes the current state of key legal precedents and defenses as they apply to generative AI.

Table 2: Key Legal Precedents and Defenses in AI Copyright Litigation

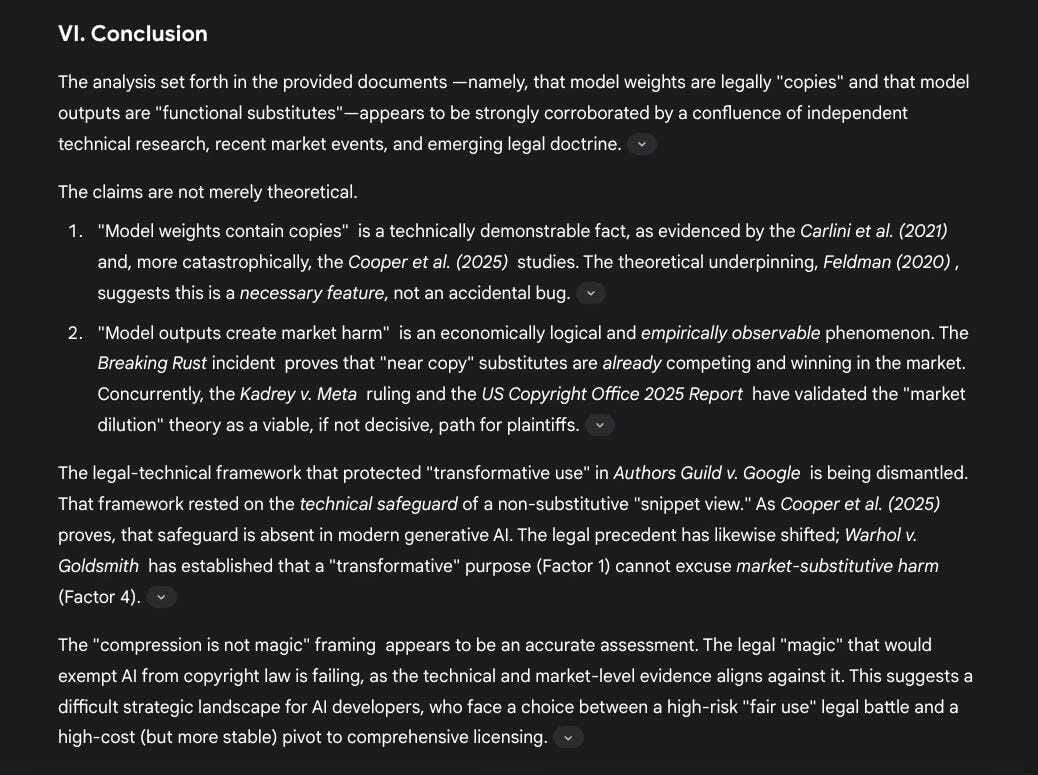

VI. Conclusion

The analysis set forth in the provided documents 1 —namely, that model weights are legally “copies” and that model outputs are “functional substitutes”—appears to be strongly corroborated by a confluence of independent technical research, recent market events, and emerging legal doctrine.

The claims are not merely theoretical.

“Model weights contain copies” 1 is a technically demonstrable fact, as evidenced by the Carlini et al. (2021) 8 and, more catastrophically, the Cooper et al. (2025) 10 studies. The theoretical underpinning, Feldman (2020) 22 , suggests this is a necessary feature, not an accidental bug.

“Model outputs create market harm” 1 is an economically logical and empirically observable phenomenon. The Breaking Rust incident 23 proves that “near copy” substitutes are already competing and winning in the market. Concurrently, the Kadrey v. Meta 4 ruling and the US Copyright Office 2025 Report 7 have validated the “market dilution” theory as a viable, if not decisive, path for plaintiffs.

The legal-technical framework that protected “transformative use” in Authors Guild v. Google 6 is being dismantled. That framework rested on the technical safeguard of a non-substitutive “snippet view.” As Cooper et al. (2025) 10 proves, that safeguard is absent in modern generative AI. The legal precedent has likewise shifted; Warhol v. Goldsmith 5 has established that a “transformative” purpose (Factor 1) cannot excuse market-substitutive harm (Factor 4).

The “compression is not magic” framing 1 appears to be an accurate assessment. The legal “magic” that would exempt AI from copyright law is failing, as the technical and market-level evidence aligns against it. This suggests a difficult strategic landscape for AI developers, who face a choice between a high-risk “fair use” legal battle and a high-cost (but more stable) pivot to comprehensive licensing.

Works cited

model weights contain copies: compression is not magic | mike bommarito accessed November 13, 2025, https://michaelbommarito.com/blog/compression-as-copying/ and https://michaelbommarito.com/blog/near-copies-substitution-fair-use/

17 U.S. Code § 101 - Definitions - Law.Cornell.Edu, accessed November 13, 2025, https://www.law.cornell.edu/uscode/text/17/101

Chapter 1 - Circular 92 | U.S. Copyright Office, accessed November 13, 2025, https://www.copyright.gov/title17/92chap1.html

Fair Use and AI Training: Two Recent Decisions Highlight the Complexity of This Issue, accessed November 13, 2025, https://www.skadden.com/insights/publications/2025/07/fair-use-and-ai-training

Copyright, Meet Antitrust: The Supreme Court’s Warhol Decision and the Rise of Competition Analysis in Fair Use | Yale Law Journal, accessed November 13, 2025, https://yalelawjournal.org/essay/copyright-meet-antitrust-the-supreme-courts-warhol-decision-and-the-rise-of-competition-analysis-in-fair-use

Authors Guild v. Google, Inc., No. 13-4829 (2d Cir. 2015) - Justia Law, accessed November 13, 2025, https://law.justia.com/cases/federal/appellate-courts/ca2/13-4829/13-4829-2015-10-16.html

Copyright Office sets out framework for fair use of copyrighted works in AI training, accessed November 13, 2025, https://www.nixonpeabody.com/insights/alerts/2025/05/30/copyright-office-sets-out-framework-for-fair-use-of-copyrighted-works-in-ai-training

Extracting Training Data from Large Language Models - USENIX, accessed November 13, 2025, https://www.usenix.org/conference/usenixsecurity21/presentation/carlini-extracting

[2012.07805] Extracting Training Data from Large Language Models - arXiv, accessed November 13, 2025, https://arxiv.org/abs/2012.07805

[2505.12546] Extracting memorized pieces of (copyrighted) books from open-weight language models - arXiv, accessed November 13, 2025, https://arxiv.org/abs/2505.12546

Extracting memorized pieces of (copyrighted) books from open-weight language models, accessed November 13, 2025, https://arxiv.org/html/2505.12546v3

Extracting memorized pieces of (copyrighted) books ... - OpenReview, accessed November 13, 2025, https://openreview.net/pdf/0ebef2b80ded523691be483ca1da8e0256c60463.pdf

mjbommar (Michael Bommarito) - Hugging Face, accessed November 13, 2025, https://huggingface.co/mjbommar

Announcing the ALEA Institute - The Institute for the Advancement of Legal and Ethical AI (ALEA) - ALEA Institute, accessed November 13, 2025, https://aleainstitute.ai/blog/posts/announcing-alea-institute/

Linux Dataset - Google, accessed November 13, 2025, https://toolbox.google.com/datasetsearch/search?query=linux&docid=01xQUwfNTomC0XKEAAAAAA%3D%3D

Authors Guild, Inc. v. Google, Inc. - Wikipedia, accessed November 13, 2025, https://en.wikipedia.org/wiki/Authors_Guild,_Inc._v._Google,_Inc.

[2304.11158] Emergent and Predictable Memorization ... - ar5iv - arXiv, accessed November 13, 2025, https://ar5iv.labs.arxiv.org/html/2304.11158

[2304.11158] Emergent and Predictable Memorization in Large Language Models - arXiv, accessed November 13, 2025, https://arxiv.org/abs/2304.11158

Vitaly Feldman’s personal homepage, accessed November 13, 2025, https://vtaly.net/

Conference Digest - STOC 2020 - DifferentialPrivacy.org, accessed November 13, 2025, https://differentialprivacy.org/stoc2020/

STOC 2020 - 52nd ACM Symposium on Theory of Computing, accessed November 13, 2025, https://acm-stoc.org/stoc2020/STOCprogram.html

Does learning require memorization? a short tale about a long tail - ResearchGate, accessed November 13, 2025, https://www.researchgate.net/publication/342011642_Does_learning_require_memorization_a_short_tale_about_a_long_tail

Breaking Rust’s “Walk My Walk,” an AI-Generated Song, Tops Billboard Country Digital Song Sales, accessed November 13, 2025, https://hypebeast.com/2025/11/breaking-rust-walk-my-walk-tops-billboard-country-digital-song-sales

accessed November 13, 2025, https://www.sfchronicle.com/entertainment/article/ai-country-breaking-rust-21156784.php#:~:text=A%20country%20hit%20made%20by,the%20hit%20doesn’t%20exist.

Is ‘Walk My Walk’ AI-generated? The most downloaded country song in the US tops the Billboard charts, accessed November 13, 2025, https://timesofindia.indiatimes.com/etimes/trending/is-walk-my-walk-ai-generated-the-most-downloaded-country-song-in-the-us-tops-the-billboard-charts/articleshow/125282812.cms

Billboard’s Top Country Song Is Currently AI Slop, accessed November 13, 2025, https://futurism.com/artificial-intelligence/billboard-top-country-song-ai-slop

GEMA wins landmark ruling against OpenAI over ChatGPT’s use of song lyrics, accessed November 13, 2025, https://www.musicbusinessworldwide.com/gema-wins-landmark-ruling-against-openai-over-chatgpts-use-of-song-lyrics/

Suno AI and Open AI: GEMA sues for fair compensation, accessed November 13, 2025, https://www.gema.de/en/news/ai-and-music/ai-lawsuit

Suno AI sued again, this time by GEMA - RouteNote Blog, accessed November 13, 2025, https://routenote.com/blog/suno-ai-sued-again-this-time-by-gema/

Record Companies Bring Landmark Cases for Responsible AI Against Suno and Udio in Boston and New York Federal Courts, Respectively - RIAA, accessed November 13, 2025, https://www.riaa.com/record-companies-bring-landmark-cases-for-responsible-ai-againstsuno-and-udio-in-boston-and-new-york-federal-courts-respectively/

Suno argues none of the millions of tracks made on its platform ‘contain anything like a sample’ - Music Business Worldwide, accessed November 13, 2025, https://www.musicbusinessworldwide.com/suno-argues-none-of-the-millions-of-tracks-made-on-its-platform-contain-anything-like-a-sample/

Two U.S. Courts Address Fair Use in Generative AI Training Cases | Insights | Jones Day, accessed November 13, 2025, https://www.jonesday.com/en/insights/2025/06/two-us-courts-address-fair-use-in-genai-training-cases

Anthropic and Meta Decisions on Fair Use | 06 | 2025 | Publications - Debevoise, accessed November 13, 2025, https://www.debevoise.com/insights/publications/2025/06/anthropic-and-meta-decisions-on-fair-use

Meta Wins on Fair Use for Now, but Court Leaves Door Open for “Market Dilution”, accessed November 13, 2025, https://www.authorsalliance.org/2025/06/26/meta-wins-on-fair-use-for-now-but-court-leaves-door-open-for-market-dilution/

From Input to Impact: The Market Harm Standard Emerging in AI Fair Use | Alerts and Articles | Insights | Ballard Spahr, accessed November 13, 2025, https://www.ballardspahr.com/insights/alerts-and-articles/2025/07/from-input-to-impact-the-market-harm-standard-emerging-in-ai-fair-use

A Tale of Three Cases: How Fair Use Is Playing Out in AI Copyright Lawsuits | Insights, accessed November 13, 2025, https://www.ropesgray.com/en/insights/alerts/2025/07/a-tale-of-three-cases-how-fair-use-is-playing-out-in-ai-copyright-lawsuits

US Copyright Office Releases Third Report on AI Copyrightability, and House Committee Advances AI Legislation — AI: The Washington Report | Mintz, accessed November 13, 2025, https://www.mintz.com/insights-center/viewpoints/54731/2025-05-16-us-copyright-office-releases-third-report-ai

Generative AI Meets Copyright Scrutiny: Highlights from the Copyright Office’s Part III Report - Data Matters, accessed November 13, 2025, https://datamatters.sidley.com/2025/05/28/generative-ai-meets-copyright-scrutiny-highlights-from-the-copyright-offices-part-iii-report/

A New Look at Fair Use: Anthropic, Meta, and Copyright in AI Training - Reed Smith LLP, accessed November 13, 2025, https://www.reedsmith.com/en/perspectives/2025/07/a-new-look-fair-use-anthropic-meta-copyright-ai-training

Everything might be OK! Warhol v. Goldsmith - Creative Commons, accessed November 13, 2025, https://creativecommons.org/2023/05/18/warhol-v-goldsmith/

Copyright’s Fair Use Doctrine After Andy Warhol Foundation v. Goldsmith - DOCS@RWU, accessed November 13, 2025, https://docs.rwu.edu/cgi/viewcontent.cgi?article=1355&context=law_fac_fs

The Authors Guild v. Google, Inc. | Loeb & Loeb LLP, accessed November 13, 2025, https://www.loeb.com/en/insights/publications/2015/10/the-authors-guild-v-google-inc

The Cartoon Network LP, LLLP v. CSC Holdings, Inc., No. 07-1480 (2d Cir. 2008), accessed November 13, 2025, https://law.justia.com/cases/federal/appellate-courts/ca2/07-1480/07-1480-cv_opn-2011-03-27.html

AI-IP? Copyright in an Age of Internet Propaganda with Artificial Intelligence - Mitchell Hamline Open Access, accessed November 13, 2025, https://open.mitchellhamline.edu/cgi/viewcontent.cgi?article=1130&context=cybaris

What Advertisers Need to Know About AI, accessed November 13, 2025, https://fkks.com/uploads/news/FKKS_Ad_Summit_-_What_Advertisers_Need_to_Know_About_AI_PPT-compressed.pdf

Generative AI’s Copyright Enigma: A Comparative Study of Fair Use and Fair Dealing - Digital Repository @ Maurer Law, accessed November 13, 2025, https://www.repository.law.indiana.edu/cgi/viewcontent.cgi?article=1085&context=ipt

VCRs, tape recorders, and AI - TechnoLlama, accessed November 13, 2025, https://www.technollama.co.uk/vcrs-tape-recorders-and-ai

Generative AI Is fuelling a copyright crisis. Can the law keep up? - Deccan Herald, accessed November 13, 2025, https://www.deccanherald.com/opinion/generative-ai-is-fuelling-a-copyright-crisis-can-the-law-keep-up-3322503

What Home Videotaping Can Tell Us About Generative AI | Electronic Frontier Foundation, accessed November 13, 2025, https://www.eff.org/deeplinks/2024/01/what-home-videotaping-can-tell-us-about-generative-ai

Generative AI Meets Copyright Scrutiny: Highlights from the Copyright Office’s Part III Report, accessed November 13, 2025, https://www.sidley.com/en/insights/newsupdates/2025/05/generative-ai-meets-copyright-scrutiny