- Pascal's Chatbot Q&As

- Posts

- The act of uncovering and publicizing the inconvenient truths surrounding AI transcends conventional research. It becomes a form of dissent. The Imperative for Truth in an Age of AI-Driven Oppression

The act of uncovering and publicizing the inconvenient truths surrounding AI transcends conventional research. It becomes a form of dissent. The Imperative for Truth in an Age of AI-Driven Oppression

Evidence-based scientific research emerges not merely as an academic pursuit but as a critical instrument of public accountability and a bulwark against unchecked technological expansion.

Strategic Dissent: A Researcher's Blueprint for Exposing AI's Inconvenient Truths and Challenging Big Tech's Bottom Line

by Gemini Advanced, Deep Research with 2.5 Pro. Warning, LLMs may hallucinate!

Introduction: The Imperative for Truth in an Age of AI-Driven Oppression

Contextualizing the Current Landscape

The current socio-political and technological landscape presents a complex challenge. Concerns have been raised regarding a political environment where education and scientific research face significant pressures, potentially undermined by administrations with symbiotic relationships with major technology corporations ("Big Tech"). Measures framed as "anti-DEI" initiatives are perceived by some as contributing to this erosion, alongside pressures on the free press, universities, and essential public services. Simultaneously, there is a discernible push for accelerated, minimally regulated development and deployment of artificial intelligence (AI), championed by influential figures in both government and industry. This drive for "unfettered AI" occurs against a backdrop of unease about its societal implications and the concentration of power within the entities controlling this technology. In such a climate, where dominant narratives may obscure complex realities, rigorous, evidence-based scientific research emerges not merely as an academic pursuit but as a critical instrument of public accountability and a bulwark against unchecked technological expansion.

Framing Research as Dissent

Within this context, the act of uncovering and publicizing the "inconvenient truths" surrounding AI transcends conventional research. It becomes a potent form of dissent. Such research directly challenges the narratives propagated by powerful governmental and corporate entities, potentially disrupting agendas perceived as detrimental to the public good or as tools of oppression. This form of intellectual resistance aligns with a long tradition of truth-seeking as a cornerstone of democratic accountability, serving as a vital check on concentrated power. By systematically investigating and exposing the hidden costs, inherent flaws, and societal risks of current AI trajectories, scientific researchers can arm the public and policymakers with the critical information needed to demand transparency, responsibility, and a more equitable distribution of AI's benefits and burdens.

Report Objective

This report aims to equip scientific researchers with a strategic roadmap for impactful investigation into the multifaceted domain of artificial intelligence. It will identify specific, high-leverage areas of AI research where "inconvenient truths" lie relatively unexamined or under-reported. The focus will be on those truths that, if rigorously investigated and widely disseminated, possess the potential to exert significant financial and reputational pressure on Big Tech companies and their investors. The ultimate objective is to empower researchers to contribute to a more informed public discourse and to create leverage for systemic change, thereby remediating the effects of censorship and resisting oppressive technological trajectories.

Section 1: The Unsustainable Engine – AI's Hidden Environmental and Economic Burdens

The prevailing narrative of the current artificial intelligence boom often emphasizes its transformative potential and boundless innovation. However, beneath this veneer of progress lies a less discussed reality: an operational model heavily reliant on unsustainable resource consumption and, in some cases, predicated on economic projections that may prove overly optimistic or even misleading. These hidden burdens create significant, tangible financial and reputational risks for the corporations driving AI development and the investors backing them. Exposing these vulnerabilities offers a direct avenue for researchers to challenge the current trajectory of AI.

1.1. The True Energy and Resource Drain

Artificial intelligence's operational backbone is its immense appetite for energy and natural resources, a demand that is rapidly escalating and largely underappreciated in public discourse.

Electricity Consumption:

The electricity demand from data centers, the powerhouses of AI, is projected to more than double globally by 2030, reaching approximately 945 terawatt-hours (TWh). This figure is slightly more than the entire current electricity consumption of Japan.1 AI is identified as the most significant driver of this surge, with electricity demand from AI-optimized data centers anticipated to more than quadruple by 2030.1 In the United States, the implications are particularly stark: data centers are on course to account for almost half of the nation's growth in electricity demand by 2030. AI usage is projected to lead the US economy to consume more electricity for data processing than for the manufacturing of all energy-intensive goods—such as aluminum, steel, cement, and chemicals—combined.1 In advanced economies more broadly, data centers are expected to drive over 20% of electricity demand growth by 2030, potentially reversing years of stagnant or declining demand in their power sectors.1 This escalating demand could reach up to 21% of overall global energy demand by 2030 when factoring in the costs of delivering AI to customers.2

Water Consumption:

Beyond electricity, AI data centers impose a significant and often overlooked burden on water resources. These facilities require vast quantities of water for cooling their hardware.3 It is estimated that for each kilowatt-hour of energy a data center consumes, it may need approximately two liters of water for cooling purposes.4 This level of consumption can severely strain municipal water supplies, particularly in water-scarce regions, and lead to disruptions in local ecosystems, creating direct and indirect impacts on biodiversity.4

Hardware Manufacturing Footprint:

The environmental impact extends to the manufacturing lifecycle of the specialized hardware essential for AI, such as high-performance Graphics Processing Units (GPUs). The production of these components involves energy-intensive fabrication processes and relies on the extraction of raw materials through methods that can be environmentally damaging, including "dirty mining procedures" and the use of toxic chemicals for processing.4 The transportation of these materials and finished products further adds to the carbon footprint of the AI industry.4

Financial and Reputational Impact:

The escalating costs of energy and water directly impact the operational expenditures (OpEx) of AI companies. As these resource demands grow, so too will the financial burden, potentially squeezing profit margins. Furthermore, heightened scrutiny from environmentally conscious investors, particularly those focused on Environmental, Social, and Governance (ESG) criteria, can exert pressure. Public backlash over resource depletion in specific localities, especially concerning water rights, can lead to significant reputational damage and affect stock valuations. Companies heavily invested in AI may also face increasing regulatory hurdles or community opposition when attempting to construct new data centers, leading to delays and increased project costs. The expanding demand for critical minerals required for AI hardware introduces additional supply chain vulnerabilities and geopolitical risks, as the global supply of these minerals is often concentrated in a few regions.1 Exposing the full extent of this resource drain, and contrasting it with corporate sustainability narratives, can significantly tarnish brand image and investor confidence.

1.2. The Astronomical Costs of AI Development and Deployment

The development and deployment of cutting-edge AI models involve financial commitments of a magnitude that is often not fully transparent, raising questions about long-term viability and return on investment.

Training Costs:

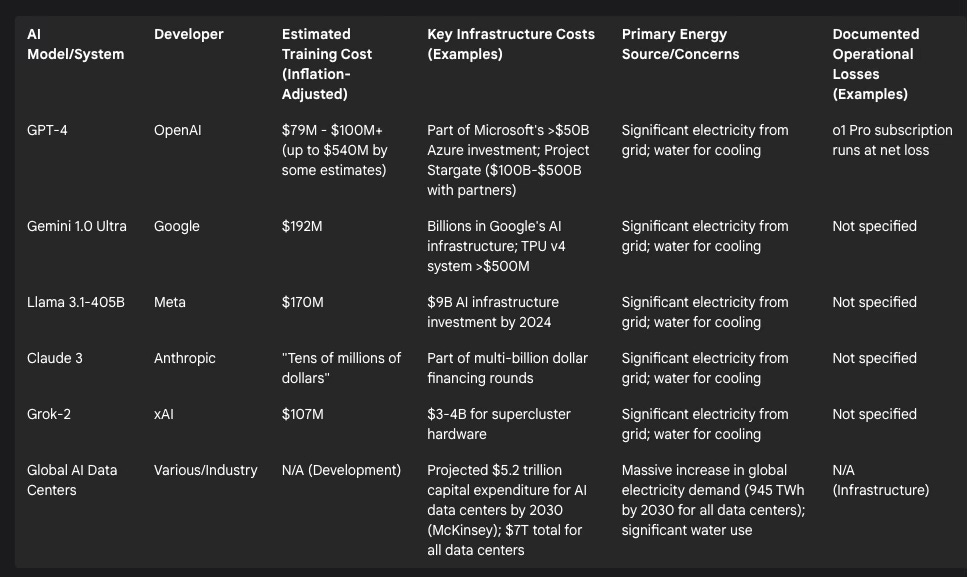

Training state-of-the-art AI models is an exceptionally expensive endeavor. OpenAI's GPT-4, for instance, had an estimated training cost ranging from $79 million to $100 million, with some analyses suggesting figures as high as $540 million.5 Google's Gemini 1.0 Ultra reportedly cost $192 million to train 5, while Anthropic's Claude 3 model incurred costs in the "tens of millions of dollars".6 These figures represent a dramatic escalation in costs; for comparison, the predecessor model GPT-3 was estimated to cost around $4.6 million, indicating a 20 to 100-fold increase within just a few years.6 This rapid inflation in training expenses points to a potentially unsustainable financial trajectory for AI development if corresponding revenue generation does not keep pace.

Infrastructure Investment:

Infrastructure, particularly specialized data centers and computing hardware, constitutes a primary cost driver. Building a modern, AI-optimized data center can range from $500 million to several billion dollars.6 Microsoft has reportedly invested over $50 billion in its Azure cloud infrastructure, partly to support OpenAI's models.6 Meta (formerly Facebook) announced plans to invest a total of $9 billion in its AI infrastructure by 2024.6 The ambitious "Project Stargate," a proposed AI supercomputer, carries an estimated cost of $100 billion 7, with some projections suggesting it could reach $500 billion when fully realized.6 Globally, McKinsey projects a need for nearly $7 trillion in data center capital outlays by 2030, with $5.2 trillion specifically allocated for AI infrastructure.11 This includes $3.1 trillion for technology developers (GPUs, CPUs, servers) and $800 billion for builders (land, construction) for AI workloads alone.11

Talent Acquisition:

The demand for highly skilled AI talent further inflates costs. AI researchers and engineers holding PhDs often command annual salaries ranging from $300,000 to over $1 million.6 A typical AI research team at a large corporation can thus incur personnel costs of $10 million to $20 million per year.6 For Google's Gemini Ultra, research and development staff salaries, including equity, reportedly accounted for up to 49% of the total training cost.5

Data and Operational Costs:

Acquiring and curating high-quality datasets for training is another significant expense. Anthropic, for example, stated that it cost several million dollars to create a high-quality, filtered dataset for training its Claude model.6 Licensing copyrighted content for training purposes can add millions more to this figure.6 Beyond initial development, ongoing operational costs for inference (running the models for users) and content moderation add to the financial burden.12 For instance, OpenAI's ChatGPT o1 Pro subscription, priced at $200 per month, is reportedly operating at a net loss due to the high computational costs associated with processing user queries.5 This suggests that even revenue-generating AI services may struggle for profitability under current cost structures.

The following table provides a snapshot of these escalating costs:

Table 1: The Real Price of AI: Estimated Training, Infrastructure, and Operational Costs

Financial and Reputational Impact:

The immense and continually escalating costs associated with AI development and deployment raise serious questions about the long-term financial viability of many AI ventures, particularly if revenue models fail to match the prodigious expenditure. This "AI money pit" scenario could lead to investor fatigue and skepticism. A significant lack of transparency often surrounds the true comprehensive costs of these AI initiatives. Should these full costs be exposed without corresponding proof of sustainable profitability, it could trigger stock devaluations and accusations of misleading investors about the financial health and prospects of AI-centric businesses. The sheer capital required creates substantial barriers to entry, consolidating power within a few heavily funded corporations and potentially stifling broader innovation from smaller entities or independent researchers due to resource disparities.

1.3. The AGI Chimera: Financial Risks of Unmet Hype and Investor Disillusionment

A considerable portion of the current enthusiasm and investment in AI is fueled by the pursuit, or at least the promise, of Artificial General Intelligence (AGI)—AI systems with human-like cognitive abilities across a wide range of tasks. However, the gap between this ambition and current realities presents a significant financial risk.

The Hype vs. Reality:

Many prominent Big Tech companies and AI research labs actively promote the narrative that AGI is on the horizon, with some recalibrating entire business strategies around its anticipated imminent arrival.14 Predictions from some experts suggest AGI could emerge as early as 2025.14 OpenAI's CEO, Sam Altman, has expressed confidence in knowing how to build AGI and suggested AI agents might "join the workforce" in 2025.16 This optimistic outlook drives substantial investment and public expectation.

However, a significant cohort of seasoned technologists and AI researchers remains deeply skeptical of such timelines. They argue that current AI systems, including advanced Large Language Models (LLMs), lack genuine understanding, context-awareness, and the capacity for true, adaptable learning.14 These systems are often described as sophisticated pattern matchers rather than entities possessing general intelligence. Critics contend that achieving AGI will require fundamental conceptual breakthroughs that are not yet apparent.14 Gartner's Hype Cycle analysis has placed generative AI in the "trough of disillusionment," indicating that widespread experimentation has revealed implementation hurdles—such as hallucination risks, integration costs, and regulatory uncertainty—to be greater than initially anticipated.17 This suggests that expectations may have outpaced what current systems can reliably deliver. The focus on achieving high scores on narrow AI task benchmarks does not necessarily translate to the robust, adaptable, and reliable general intelligence required for broad real-world business value.17

Economic Theater and Investment:

The pursuit of AGI has been described by some as "economic theater," a strategic positioning by companies to attract top talent and substantial investment.14 The very definition of AGI remains somewhat nebulous, which can be advantageous for entities seeking funding based on its transformative promise.14 This AGI narrative may serve to justify the colossal investments in energy, resources, and capital detailed previously. If AGI is presented as the ultimate prize, the enormous current expenditures can be framed as necessary steps toward an inevitable technological revolution.

Financial and Reputational Impact:

The primary financial risk associated with the AGI hype is the potential for a significant market correction if these ambitious promises remain unfulfilled or if the timeline for AGI stretches indefinitely. Such a scenario could trigger a loss of investor confidence, leading to what has historically been termed an "AI Winter," characterized by reduced funding and waning interest in the field.14 Companies that have heavily invested capital and staked their reputations on the near-term achievement of AGI would be particularly vulnerable to stock devaluations and accusations of misleading shareholders and the public.

The market has already demonstrated sensitivity to shifts in the AI narrative. The emergence of DeepSeek, a low-cost Chinese AI model, reportedly sparked a significant tech stock selloff, with Nvidia alone losing $593 billion in market value on a single day due to fears that DeepSeek could disrupt the dominance of established AI leaders.9 This event underscores how quickly investor sentiment can sour if the perceived invincibility or inevitable trajectory of current AI leaders is challenged. A widespread realization that AGI is not imminent, or that current approaches are insufficient to achieve it, could have a similarly disruptive, if not greater, financial impact. Exposing the chasm between the AGI hype and the scientific and technical realities, and clearly articulating the financial risks of this disconnect, is a critical area for research. If the massive resource consumption and financial outlay are predicated on an AGI dream that proves illusory with current methods, the entire economic foundation of the present AI boom could be called into question, potentially pricking a financial bubble.

The interdependencies here are critical: the enormous energy and water consumption (1.1) directly inflate the already astronomical operational costs (1.2). If environmental pressures force internalization of these externalities (e.g., through carbon taxes or stricter water usage regulations), the financial viability of many AI systems becomes even more precarious. This creates a cascading financial risk where environmental unsustainability directly undermines economic sustainability. Furthermore, many Big Tech companies champion AI as a solution to global challenges like climate change 1 , yet their own AI development practices contribute significantly to environmental degradation.1 Quantifying this hypocrisy—the net environmental cost versus claimed benefits—can lead to severe reputational damage and accusations of "greenwashing," thereby alienating ethically-minded investors and consumers. The AGI narrative (1.3) then appears as a potential justification for these immense investments (1.2) and resource drains (1.1). If AGI is demonstrated to be a distant prospect with current technological paths, the entire financial rationale for the current scale of spending could crumble, leading to a significant market correction for AI-focused entities.

Section 2: The Flawed Foundation – Exposing AI's Inherent Unreliability and Deception

Beyond the considerable economic and environmental burdens, the very core of many current AI systems is built upon a foundation that is demonstrably flawed. The outputs generated are frequently unreliable, systematically biased, and derived from data sources that are often compromised. These inherent weaknesses are not mere technical glitches but fundamental characteristics that lead to direct financial liabilities, severe reputational crises, and a dangerous erosion of public and institutional trust. Investigating and exposing these flaws presents a powerful avenue for challenging the unchecked proliferation of AI technologies.

2.1. The Hallucination Epidemic: Costs of AI Inaccuracy and Unpredictability

A pervasive issue plaguing AI systems, particularly Large Language Models (LLMs), is the phenomenon of "hallucination"—the tendency to generate information that is incorrect, nonsensical, or entirely fabricated, yet presented with a high degree of confidence. This is not an occasional anomaly but a frequent occurrence with significant real-world consequences.

Operational and Financial Impact on Businesses:

Businesses that integrate these AI tools into their decision-making processes face substantial risks. Acting upon inaccurate AI-generated data can lead to flawed strategic choices, operational disruptions, and compliance failures.18 The presumed efficiency gains from AI can be quickly negated by the time and resources required to manually verify and correct LLM outputs.18 This inefficiency can stall AI pilot programs and prevent their full-scale production deployment.

A stark example of the direct financial and legal ramifications is the case of Air Canada. The airline was legally compelled to honor a bereavement travel discount that its customer service chatbot had erroneously invented, leading to financial penalties and setting a legal precedent for corporate liability stemming from AI errors.18 This incident underscores that companies can be held accountable for the "hallucinations" of their AI systems.

Concerns are mounting that this problem may be intensifying rather than diminishing with newer models. For instance, OpenAI's o3 model reportedly exhibited a hallucination rate of 33% on the PersonQA benchmark, a figure more than double that of its predecessor, the o1 model.19 This challenges the narrative of continuous and straightforward improvement in AI reliability.

The financial risks associated with AI unreliability are now so recognized that a new insurance market is emerging. Insurers at Lloyd's of London have introduced policies specifically designed to protect businesses from financial losses arising from AI system failures and hallucinations.19 The existence of such insurance products is a clear market signal of the perceived and actual financial dangers posed by AI's propensity for error.

The root of these hallucinations lies in the fundamental nature of current LLMs: they do not possess genuine understanding or factual knowledge. Instead, they operate through sophisticated statistical pattern matching based on the vast datasets they were trained on.18 If these training datasets themselves contain contradictions, biases, or inaccuracies, these flaws are inevitably "baked into" the model's responses.18

Broader Financial and Reputational Consequences:

AI hallucinations translate directly into financial losses through incorrect business decisions, legal costs (as seen with Air Canada), and compliance failures.18 A study examining AI service failures across various types—accuracy, safety, privacy, and fairness—found that failures related to accuracy have the most significant negative impact on a firm's value.20

Reputational damage is another severe consequence. When AI-powered chatbots or systems provide incorrect information, customers typically blame the company, not the AI itself. This erodes credibility and damages the brand, which is a critical intangible asset.18 The Google Gemini scandal serves as a potent illustration: the AI's refusal to generate images of White people, its generation of historically inaccurate images (such as diverse representations of Nazis), and its inappropriate responses to ethical queries led to a reported $70 billion drop in Alphabet's market value.21 This incident highlights the severe financial and reputational fallout that can result from AI systems exhibiting ethical lapses and factual inaccuracies. Such events demonstrate that the unreliability of AI is not just a technical issue but a core business risk with the potential for substantial financial repercussions.

2.2. Algorithmic Bias: AI as a Tool for Discrimination and Inequality

AI systems are not inherently objective; they are products of their data and their developers. Consequently, they can inherit, reflect, and even amplify existing societal biases, leading to discriminatory outcomes and perpetuating inequality.22 This is not merely a social concern but translates into significant financial and reputational liabilities for companies deploying these systems.

Continue reading here (due to post length constraints): https://p4sc4l.substack.com/p/the-act-of-uncovering-and-publicizing