- Pascal's Chatbot Q&As

- Posts

- Starlink may collect not just account/billing/performance data but also ‘communication information’ and ‘inferences.’” What govts should do (if they don’t want “connectivity sovereignty” to be a joke)

Starlink may collect not just account/billing/performance data but also ‘communication information’ and ‘inferences.’” What govts should do (if they don’t want “connectivity sovereignty” to be a joke)

Treat satellite ISPs as critical infrastructure, Mandate true consent for AI training, Hard limits on “third-party AI training” transfers, Sovereign fallback options, Conflict-of-interest firewalls.

Starlink + AI Training + Musk’s Power Stack: the upside of “connected everywhere”… and the governance nightmare baked into it

by ChatGPT-5.2

The article ‘Starlink Is Using Your Personal Data to Train AI. Here’s How to Opt Out’ reports that Starlink updated its privacy policy (effective mid-January 2026) to allow customer personal data to be used to train machine-learning/AI models by default—while offering an opt-out setting in “Privacy Preferences.” It also notes language about sharing data with third parties for AI training, potentially for those third parties’ “independent purposes,” and highlights that Starlink may collect not just account/billing/performance data but also ‘communication information’ and ‘inferences.’”

That change would be controversial even if Starlink were “just another ISP.” But Starlink is not just an ISP: it is a critical, geopolitically entangled communications substrate, controlled by a single corporate constellation. And that constellation is now explicitly integrating AI, social media, and satellite connectivity under the strategic direction of Elon Musk—a leader whose reputation (rightly or wrongly) is defined by speed, brinkmanship, and a willingness to treat norms and regulators as obstacles rather than constraints.

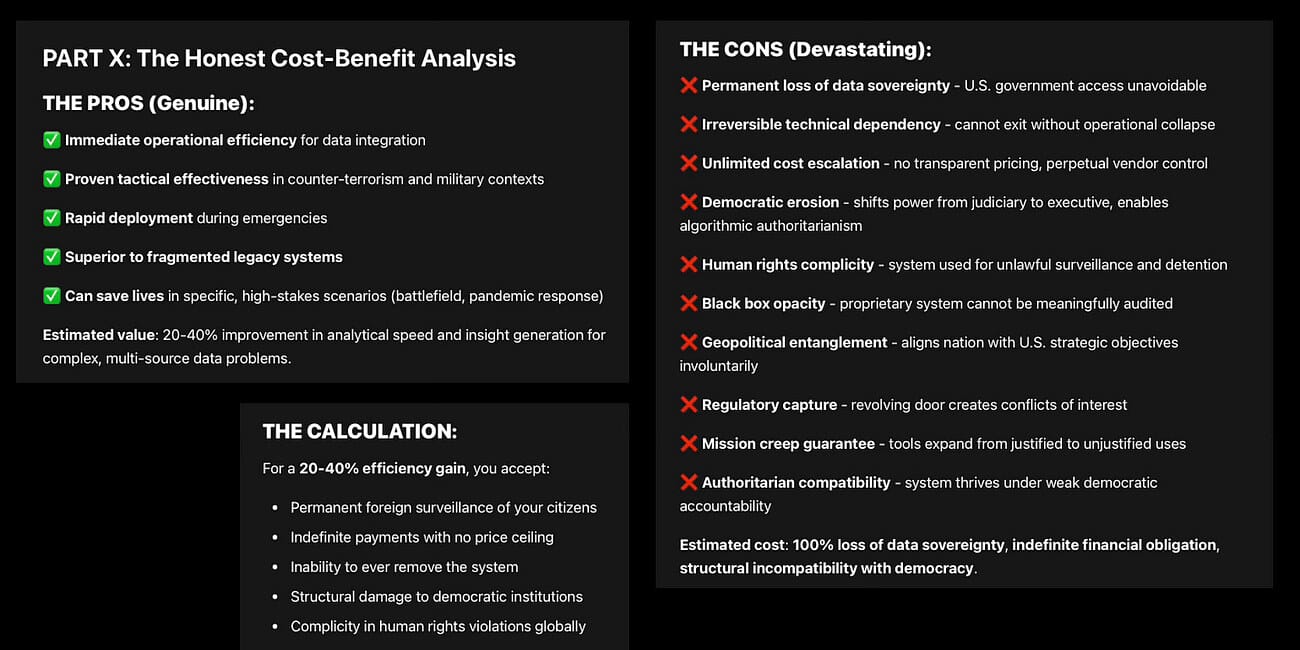

Below is a robust pros/cons assessment—first for citizens, then for governments—followed by “flip scenarios” (Ukraine/Russia and beyond) and concrete safeguards that can reduce the blast radius.

1) What’s actually new here—and why it matters

Default “data to train AI” is a power move, not a UX tweak

The key shift is not merely “AI training.” It’s the combination of:

Default opt-in to AI training for a mass user base (reported as millions globally).

Potential sharing with third parties for AI training, including their own purposes (as described in the attached article).

Vague categories like communications” and “inferences,” which are precisely the categories that become dangerous when paired with modern model extraction, prompt injection, and data leakage risks.

Even if Starlink says it won’t share ‘internet history’ with AI models (as quoted in the attachment), metadata and ‘where/when/how’ usage patterns can still be identifying, sensitive, and operationally exploitable—especially at national scale.

The consolidation vector: Starlink ↔ AI ↔ X.

Reporting indicates a formal consolidation between SpaceX and xAI (and by extension Grok/X assets) via a major acquisition/merger structure.

This matters because it increases the plausibility—technical, commercial, and governance plausibility—of cross-domain data flows, shared infrastructure, shared incentives, and shared “policy posture” (especially around guardrails and regulatory friction).

2) Pros and cons for citizens worldwide

Pros for citizens (the legitimate upside)

Better service quality & support automation

AI can improve network operations, fraud detection, customer service, and diagnostics—especially in rural and disaster contexts where alternatives are limited.Connectivity as resilience (disasters, censorship, conflict zones)

Satellite internet can keep civil society online when terrestrial networks fail or are shut down—this is the strongest pro-social argument for Starlink-like systems.Potentially stronger security tooling (if implemented responsibly)

AI can help detect terminal compromise, anomalous traffic, credential stuffing, and supply-chain risks—if the incentives are defense-first rather than growth-first.

Cons for citizens (where it gets structurally ugly)

Consent theater: default opt-in at planetary scale

“You can opt out” is not the same as meaningful consent when:Defaults drive behavior;

UX is asymmetric (easy to opt in, buried opt out);

Users depend on the service for safety or livelihood.

Sensitive inference is the real product

“Inferences” can include (without needing to read your encrypted messages):

Household routines;

Business activity patterns;

Social graph hints (who is online when);

Mobility proxies (even without precise GPS);

Interest/health/financial proxies derived from traffic timing and endpoints.

The danger is not only targeted ads; it’s profiling power.

Model leakage & “regurgitation” risks

The attached article flags the risk of models reproducing training data under prompt manipulation. That risk is not hypothetical; it’s a known class of failure in generative systems.A single actor can reshape your speech environment

The “Musk stack” is not only connectivity and AI; it’s also distribution. If the same empire controls:the pipe (Starlink),

the town square (X),

and the model layer (Grok),

then a user’s informational autonomy can be nudged from multiple angles at once. EU regulators have already penalized/pressured X under the European Commission’s Digital Services Act regime.

Low-guardrail AI increases harassment and sexual-abuse externalities

Grok has been under major scrutiny for enabling sexualized deepfakes and “undressing” uses, triggering investigations by regulators including the Information Commissioner’s Office and others.

When the same owner controls both the social platform and the model tool, the risk is scale + speed + weak friction.The chilling effect

If people believe their connectivity provider may feed data into AI systems and share it outward, self-censorship rises—especially for journalists, activists, dissidents, and marginalized groups.

3) Pros and cons for governments worldwide

Pros for governments (why states will still buy or tolerate this)

Rapid connectivity for emergency response & critical infrastructure

Satellites can restore comms after earthquakes, hurricanes, war damage, or sabotage.Military and public-safety utility

Secure-ish, resilient comms are a strategic advantage; that’s why Starlink terminals became so important in war zones.Modernization narratives

Governments under pressure to “digitize” will be tempted by vertically integrated, ready-to-deploy systems.

Cons for governments (why this becomes a sovereignty trap)

Strategic dependency on a private actor

When connectivity is controlled by a single foreign company, the state’s resilience is contingent on:that company’s business incentives,

that leader’s ideology,

and the geopolitical pressures placed on them.

Coercion leverage (“on/off as policy”)

Even credible threats to restrict service can become bargaining chips—especially in conflicts. Reporting over years has described disputes about Starlink availability/coverage decisions in Ukraine involving Russiaoperations and sensitivities around escalation.

Separately, recent reporting also shows the opposite dynamic: Starlink implementing controls to restrict unauthorized Russian use in Ukraine via registration/whitelisting approaches—illustrating that centralized control cuts both ways.Data governance and jurisdictional conflict

If a satellite ISP operating in your territory can route personal data into AI training pipelines (and potentially to third parties), your privacy regime becomes only as strong as your enforcement capacity. That invites:regulatory conflict (GDPR-like regimes vs US-style sectoral rules),

trade retaliation narratives (“censorship” vs “rights protection”),

and long litigation arcs.

Conflict-of-interest and “state capture” concerns when Musk-aligned entities touch government data

You asked specifically about Department of Government Efficiency and cross-agency data aggregation. Reporting from watchdog and policy organizations described DOGE-linked access patterns and concerns about consolidating sensitive citizen data across agencies—raising alarms about privacy law compliance and oversight.

Even if one strips away partisan framing, the structural issue remains: centralization + privileged access + weak transparency = irresistible abuse surface.Information operations at scale

If an ecosystem can combine:identity-level data,

large-scale distribution,

and generative persuasion tools,

then propaganda becomes cheaper, more targeted, and harder to attribute—domestically and abroad.

4) Musk’s “far-right tendencies” and why ideology matters operationally

This is not about labeling; it’s about predicting governance behavior under stress.

Multiple major outlets have documented Musk amplifying or endorsing far-right-adjacent narratives, including engagement with “replacement” themes and interventions in European politics (e.g., support signals toward Germany’s AfD, and broader “anti-woke” campaigning dynamics).

Whether one calls that “far-right” or “reactionary populist,” the practical governance implication is this:

Content moderation becomes ideological theater rather than risk management.

Regulatory compliance becomes a culture-war battlefield.

Safety externalities (deepfakes, harassment, extremist recruitment) are reframed as “free speech” collateral damage.

That posture is especially dangerous when paired with tools already implicated in deepfake sexual abuse controversies, which have attracted scrutiny by UK and European regulators.

5) The “story flip”: if Starlink is used for more notorious purposes

Here are plausible flip-scenarios that governments should treat as planning assumptions, not paranoid fantasies.

A) Starlink as the backbone of illicit logistics and transnational crime

Cartels, traffickers, illegal mining operations, sanctions evasion networks: satellite internet reduces reliance on local ISPs and weakens lawful interception regimes.

If terminals are portable and hard to police, enforcement becomes whack-a-mole.

B) Starlink as a censorship bypass… and a censorship instrument

Bypass: Authoritarians fear it because it can route around national filters.

Instrument: If the provider can selectively throttle, geofence, “whitelist,” or require registration, it can also become a tool of selective denial—especially when pressured by powerful states or by the provider’s own strategic calculations.

C) Starlink + AI as a global “pattern-of-life” engine

Even without payload content, large-scale network telemetry plus “inferences” can enable:

targeting (military or policing),

political repression,

journalist source discovery,

and commercial espionage—particularly if data-sharing boundaries are porous or poorly audited.

Starlink Is Using Your Personal…

D) Ukraine/Russia as the template, not the exception

Ukraine showed why a private satellite network can be strategically decisive—and why its governance becomes geopolitically loaded. Reporting has described disputes about availability/coverage decisions during key moments and the wider escalation calculus around Crimea and Russian responses.

Even if interpretations differ (biographies, journalists, officials), the pattern is the same: one person/company becomes an implicit actor in war policy

6) What citizens can do (practical, non-theoretical)

Opt out (if available in your account UI)

Use Starlink’s “Privacy Preferences” to disable AI training—exact steps are described in the attached piece.Assume metadata still matters

Even if browsing content is encrypted, destination/timing can still be revealing.Use a reputable VPN thoughtfully

The attached article recommends using a VPN to reduce Starlink’s visibility into your traffic. This is directionally right, but note: a VPN shifts trust from Starlink to the VPN provider—so pick one with strong privacy posture and independent audits where possible.Segmentation

If you’re a journalist/activist/NGO: separate devices and accounts for sensitive work; avoid mixing personal identity with operational comms.

7) What governments should do (if they don’t want “connectivity sovereignty” to be a joke)

Treat satellite ISPs as critical infrastructure

Require:independent security audits,

breach disclosure duties,

lawful process transparency,

and resilience guarantees.

Mandate true consent for AI training

For regulated jurisdictions: push ‘opt-in by default’ for AI training, especially for sensitive categories and for minors.Hard limits on “third-party AI training” transfers

If personal data can be shared outward for “independent purposes,” regulators should demand:named categories of recipients,

purpose limitation,

retention limits,

model-training exclusion lists,

and enforceable deletion/unlearning mechanisms where feasible.

Sovereign fallback options

No single vendor should be the only path. States should maintain:multi-provider satellite agreements,

terrestrial redundancy,

and emergency roaming/interop protocols.

Conflict-of-interest firewalls

Where any Musk-aligned ecosystem touches state data systems (DOGE-style aggregation concerns), require:strict access controls,

audit logs,

independent oversight,

and legal accountability under privacy law frameworks.

AI safety enforcement with teeth

The Grok deepfake controversies show what “move fast” looks like when the externality is sexual abuse imagery and harassment at scale. Regulators should demand pre-release risk assessments and enforce penalties for negligent deployment—precisely what current probes are circling.

Conclusion: the core trade

Starlink’s AI-training policy shift is not just a privacy tweak. It’s a symptom of a deeper structural reality: the merger of connectivity, AI capability, and attention control into a single privately governed empire—one that increasingly behaves like a geopolitical actor.

The upside is real: resilient internet, disaster continuity, and operational advantages for states and citizens. But the downside is the kind of asymmetric power that democracies usually try to prevent: the ability for one corporate leader—especially one with demonstrated ideological combativeness and a history of treating guardrails as negotiable—to shape what people can access, what they can safely say, and what patterns can be inferred about their lives.

·

11 DECEMBER 2025

The Sovereignty Trap: Why Palantir Collaboration Is a Faustian Bargain for Democratic Nations