- Pascal's Chatbot Q&As

- Posts

- Some of the loudest voices shaping policy, public perception, and technical direction rarely use the very tool they’re discussing—AI—to rigorously assess their own statements and outputs.

Some of the loudest voices shaping policy, public perception, and technical direction rarely use the very tool they’re discussing—AI—to rigorously assess their own statements and outputs.

This includes political institutions drafting AI laws, tech moguls warning of existential risk, and academic researchers producing lengthy white papers on governance, ethics, or societal impact.

Why Political Bodies, Research Institutions, and Tech Leaders Should Let AI Review Their AI-Related Claims

by ChatGPT-4o

In the global conversation about artificial intelligence (AI), a striking irony has emerged: some of the loudest voices shaping policy, public perception, and technical direction rarely use the very tool they’re discussing—AI—to rigorously assess their own statements and outputs. This includes political institutions drafting AI laws, tech moguls warning of existential risk, and academic researchers producing lengthy white papers on governance, ethics, or societal impact. Despite their ostensible engagement with the topic, these actors often fail to submit their work to AI models for fact-checking, legal vetting, or even quality improvement. This oversight is not only ironic but increasingly dangerous, given the complexity, technical nuance, and legal ramifications of the field.

The Case for AI-Assisted Review of AI Statements

As AI models like GPT-4o and Claude 3.5 grow increasingly adept at synthesizing, verifying, and even critiquing information, they represent a powerful, underutilized resource for improving public discourse and policymaking. There are three specific interventions that should become standard:

a) AI-Assisted Fact-Checking of Public Statements

Political speeches, regulatory drafts, and public testimonies about AI often contain factual inaccuracies or misleading simplifications. For example, politicians may conflate “reading a book” with training an AI model on copyrighted text, a distinction with enormous legal implications. AI models trained on vast corpora of legal, technical, and scientific material can serve as first-line reviewers—flagging logical fallacies, factual inconsistencies, and unsupported claims before such statements enter the public record.

✔ Example: An AI model could correct the frequent error of equating human learning with machine training—something that underpins many flawed defenses of copyright infringement in generative AI development.

b) SWOT Analysis by AI Models in Research Papers and Government Reports

Academic and governmental reports on AI often reflect the biases, blind spots, or institutional interests of their authors. Incorporating an AI-generated SWOT (Strengths, Weaknesses, Opportunities, Threats) analysis could provide a structured, impartial perspective on the robustness of a paper’s methodology, the feasibility of its recommendations, and the ethical, legal, and geopolitical blind spots it might miss.

✔ Proposal: All major policy and research reports on AI should include a dedicated appendix generated by a frontier model, offering a SWOT analysis based on the report’s content and references. This would help policymakers and reviewers identify overlooked assumptions or emerging risks.

c) Other Suggested Practices for Responsible AI Discourse

Pre-publication AI audit: Before releasing a policy draft or technical roadmap, institutions could run it through an AI tool trained to flag areas of legal non-compliance (e.g. GDPR, the EU AI Act, or DMCA).

Counterargument synthesis: AI can be tasked with generating a “steelman” counterargument to the paper’s thesis, exposing it to the strongest possible opposing viewpoint and improving the intellectual integrity of the discourse.

Bias scan: AI could flag potentially biased language, unrepresentative datasets, or culturally narrow perspectives, especially in global regulatory discussions.

Compliance sandboxing: Use AI simulations to test whether proposed regulatory interventions would hold up across different jurisdictions and edge-case scenarios.

Advantages of This Approach

Improved Accuracy and Rigor: AI models can catch technical, legal, and logical flaws that human reviewers may miss due to cognitive overload, lack of domain expertise, or time constraints.

Faster Iteration: AI allows for rapid drafts and re-drafts, making it easier to experiment with policy formulations or narrative framing before publication.

Transparency and Accountability: Disclosing the use of AI in validating policy documents or reports can build public trust—especially when the AI is used to identify weaknesses, not just bolster existing arguments.

Democratized Expertise: Smaller institutions or independent researchers without access to vast legal or technical teams can still produce high-quality, robust outputs using AI review.

Risks and Disadvantages of NOT Adopting AI Review

Reputational Damage: Errors in public reports or laws may be exposed post-facto, leading to loss of credibility (e.g., flawed AI laws that are quickly challenged or reversed).

Legal Vulnerability: Policies or AI products that skirt existing IP or data protection laws due to oversight can result in lawsuits, fines, or injunctions.

Strategic Blindness: Without AI-driven scenario analysis or counterfactual exploration, policymakers may miss out on crucial geopolitical or ethical consequences.

Public Confusion and Distrust: Oversimplified or inaccurate public statements about AI erode public understanding, making informed democratic discourse more difficult.

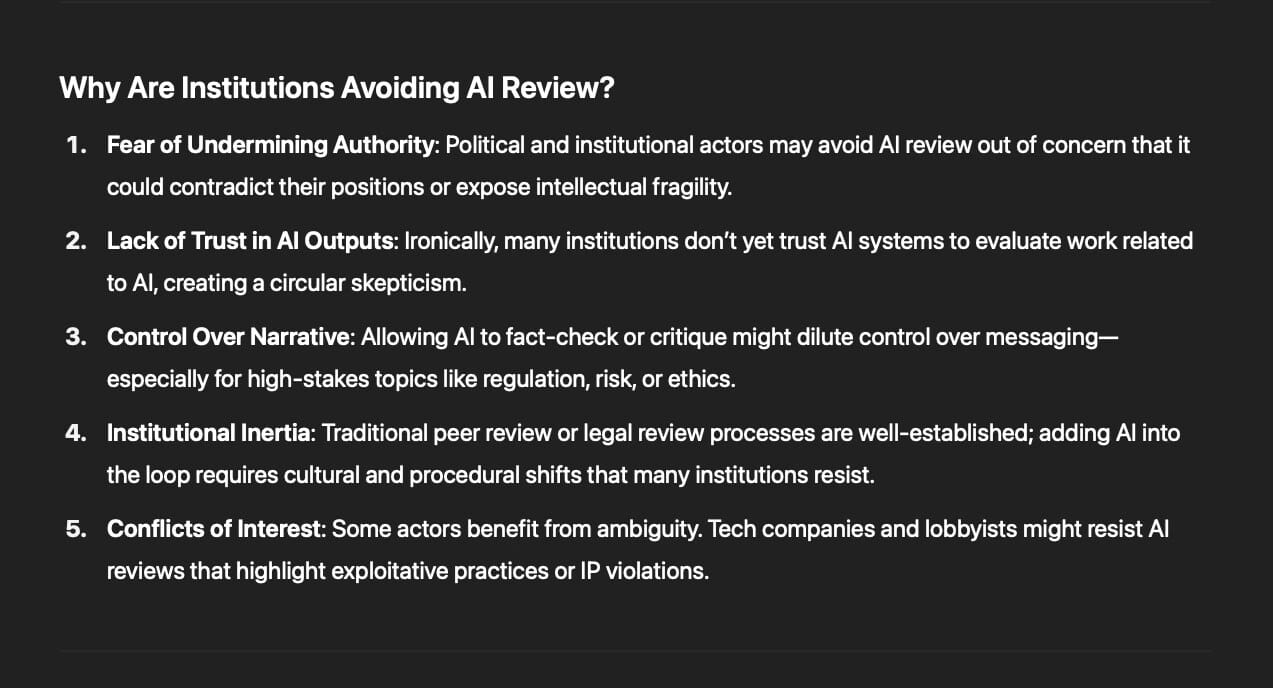

Why Are Institutions Avoiding AI Review?

Fear of Undermining Authority: Political and institutional actors may avoid AI review out of concern that it could contradict their positions or expose intellectual fragility.

Lack of Trust in AI Outputs: Ironically, many institutions don’t yet trust AI systems to evaluate work related to AI, creating a circular skepticism.

Control Over Narrative: Allowing AI to fact-check or critique might dilute control over messaging—especially for high-stakes topics like regulation, risk, or ethics.

Institutional Inertia: Traditional peer review or legal review processes are well-established; adding AI into the loop requires cultural and procedural shifts that many institutions resist.

Conflicts of Interest: Some actors benefit from ambiguity. Tech companies and lobbyists might resist AI reviews that highlight exploitative practices or IP violations.

Conclusion

In a world increasingly shaped by AI, it is paradoxical and irresponsible for those setting its rules or sounding alarms about its dangers to ignore the use of AI in their own work. Integrating AI-assisted review—through fact-checking, SWOT analyses, bias detection, and legal vetting—would not only enhance the quality and credibility of statements and reports, but also model the kind of responsible AI integration we want to see in broader society. The cost of not doing so is measured in misinformed policies, legal missteps, and public mistrust. If we believe AI is powerful enough to reshape our future, we must also believe it is powerful enough to improve our present—especially in how we think and talk about it.

·

5 OCTOBER 2024

Asking AI services: already, whenever I submit the statements of AI makers to large language models (LLMs), the models disagree with the views of their makers on many occasions. So either the AI makers did not run their own PR, legal and technical views past their own LLMs, or they don’t care about the fact that their LLMs are operating on a higher ethi…