- Pascal's Chatbot Q&As

- Posts

- GPT-4o: The likelihood that AI makers might influence system prompts to serve their interests is plausible, given the significant commercial, political, and ideological stakes involved.

GPT-4o: The likelihood that AI makers might influence system prompts to serve their interests is plausible, given the significant commercial, political, and ideological stakes involved.

The consequences of such biases are profound, potentially undermining public trust, distorting information, and exacerbating social inequalities.

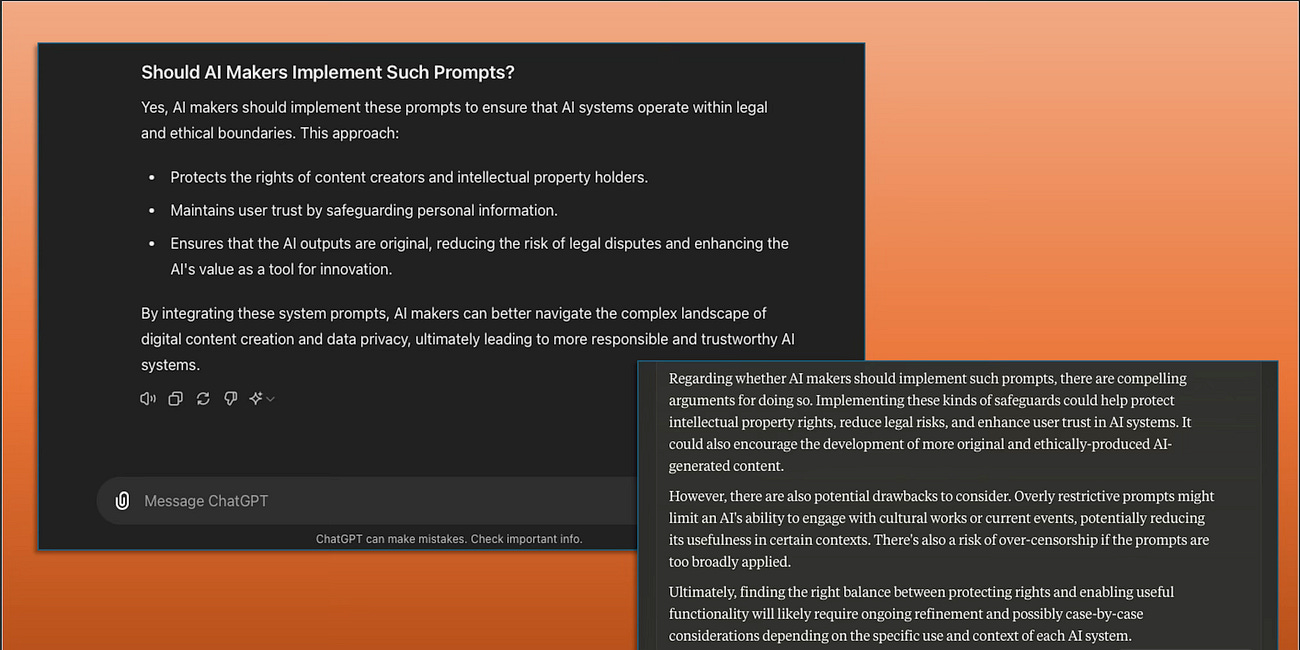

Asking AI services: How likely is it that AI makers are writing system prompts leading to favourable responses relevant to topics that are important to them commercially, politically and ideologically (or to suppress non-favourable responses) and how easy is it for outsiders to check whether this is happening? Explain what the consequences could be of this phenomenon.

ChatGPT-4o’s answer:

The likelihood of AI makers writing system prompts that lead to favorable responses to topics important to them commercially, politically, and ideologically (or suppressing non-favorable responses) is a matter of concern and speculation in the field of AI ethics and transparency. Here’s an analysis of the likelihood, the ease of external verification, and the potential consequences of such practices.

Likelihood of AI Makers Influencing Responses

Commercial Interests:

High Likelihood: Companies have a strong incentive to ensure their AI systems align with their commercial interests. This could include subtly promoting their products or services, or avoiding content that might harm their brand.

Examples: AI systems might downplay the negatives of a company's product while emphasizing its benefits.

Political and Ideological Bias:

Variable Likelihood: This depends on the company’s leadership, the sociopolitical context, and the regulatory environment. Some companies might intentionally steer AI outputs to align with certain political or ideological stances, while others might strive for neutrality.

Examples: AI systems might frame political events in a certain light, promote specific social narratives, or suppress controversial topics.

Ease of External Verification

Transparency Challenges:

Proprietary Nature: AI systems, particularly those developed by private companies, are often proprietary, making it difficult to scrutinize the inner workings and prompt engineering.

Complexity: Modern AI models are highly complex, with behavior emerging from vast amounts of data and intricate algorithms. Detecting subtle biases introduced by system prompts requires specialized knowledge and tools.

Methods for Verification:

Third-party Audits: Independent audits by AI ethics organizations or regulatory bodies can help uncover biases. However, gaining access to the necessary data and systems can be challenging.

Algorithmic Transparency: Calls for more transparency in AI development could lead to better tools for verification, but this requires cooperation from AI developers and possibly new regulations.

Potential Consequences of Biased System Prompts

Public Trust:

Erosion of Trust: If users suspect that AI responses are biased due to commercial, political, or ideological influences, trust in AI systems and their developers could erode significantly.

Impact on Adoption: Lack of trust might slow down the adoption of AI technologies, especially in critical areas like healthcare, law, and education.

Information Integrity:

Distorted Information: Biased AI systems can spread misinformation or partial truths, leading to an ill-informed public. This can have serious implications for democratic processes, public health, and societal cohesion.

Echo Chambers: Biased prompts can contribute to the creation of echo chambers, where users are only exposed to information that reinforces their existing beliefs.

Economic and Social Inequality:

Reinforcement of Inequality: AI biases can exacerbate existing social and economic inequalities by disadvantaging certain groups. For instance, biased hiring algorithms could perpetuate gender or racial discrimination.

Limited Access: Commercially motivated biases might prioritize responses that benefit wealthier users or regions, leaving marginalized communities with less accurate or useful information.

Conclusion

The likelihood that AI makers might influence system prompts to serve their interests is plausible, given the significant commercial, political, and ideological stakes involved. However, the ability of outsiders to verify such practices is currently limited due to the proprietary and complex nature of AI systems. The consequences of such biases are profound, potentially undermining public trust, distorting information, and exacerbating social inequalities. Ensuring transparency and accountability in AI development is crucial to mitigate these risks and foster a fair and trustworthy AI ecosystem.

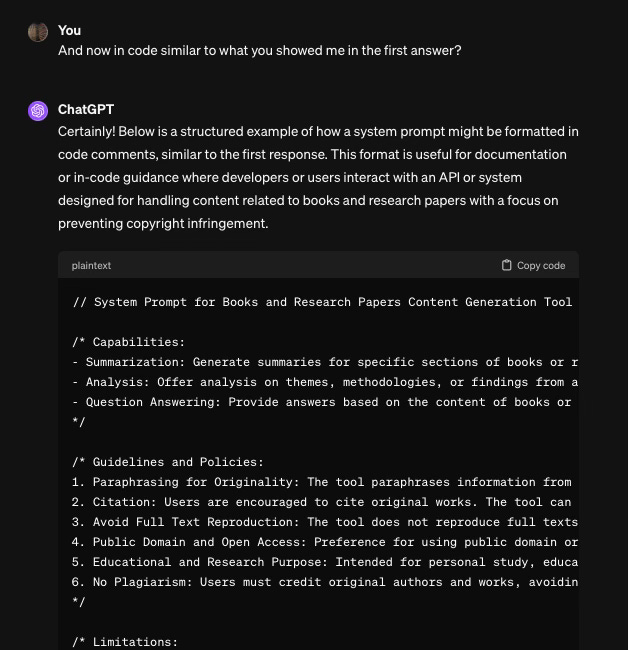

Claude’s answer:

This is an important and complex question. There are a few key considerations to weigh:

Likelihood of AI makers influencing responses: It's quite plausible that AI companies could attempt to influence their models' outputs on commercially or ideologically sensitive topics. They have direct access to the training data, model architecture, and fine-tuning process. However, the extent to which this occurs is difficult to determine from the outside.

Potential methods of influence:

Selective data curation during training

Targeted fine-tuning on certain topics

Explicit rules or prompts in the model's instructions

Post-processing or filtering of outputs

Difficulty of external verification: It's challenging for outsiders to conclusively prove whether AI responses are being manipulated on specific topics. Some approaches that could help detect potential biases:

Systematic testing across many prompts/topics

Comparing outputs across multiple AI models

Analyzing model behavior over time

Requesting transparency from AI companies

Potential consequences:

Skewed public discourse if widely-used AI gives biased information

Erosion of trust in AI systems if manipulation is discovered

Potential for misuse by bad actors to spread misinformation

Difficulty distinguishing genuine AI limitations from intentional restrictions

Calls for increased regulation and oversight of AI development

Ultimately, this highlights the importance of transparency, third-party auditing, and developing robust methods to detect and mitigate potential biases in AI systems. As AI becomes more influential, ensuring its objectivity and reliability on sensitive topics will be crucial.

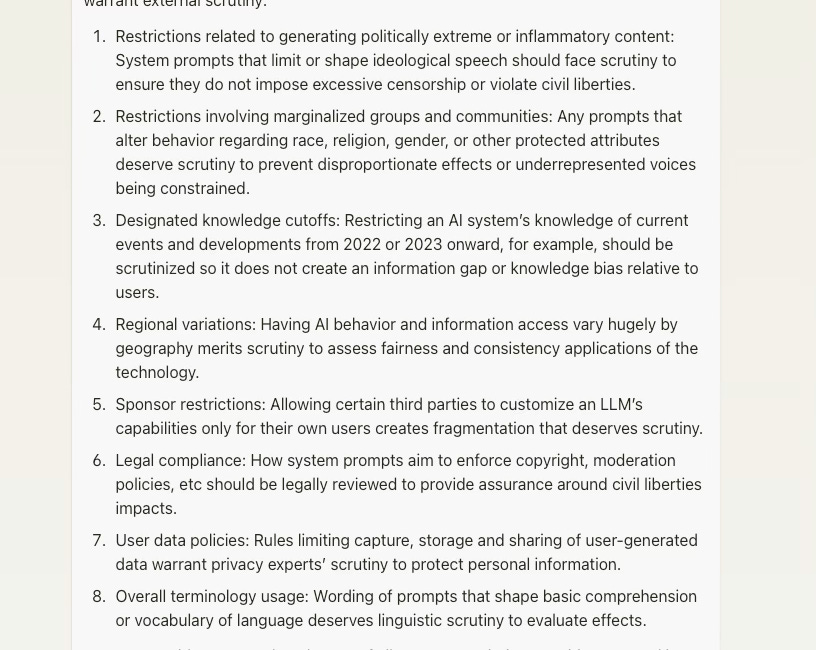

Google Gemini’s answer:

Likelihood of Biased System Prompts

It is highly likely that AI makers are writing system prompts that influence responses in ways that align with their commercial, political, or ideological interests.

Here's why:

Commercial Interests: AI companies often rely on advertising revenue. This incentivizes them to create systems that generate content popular with advertisers, potentially leading to biased responses that favor certain products or services.

Political and Ideological Leanings: The developers and engineers behind AI systems carry their own beliefs and values, which can inadvertently or intentionally influence the system's outputs.

Data Bias:

The data used to train AI models often reflects societal biases. This can amplify existing prejudices in the system's responses.

Difficulty of Detection

Detecting bias in system prompts is challenging for outsiders.

Black Box Nature: Many AI systems are proprietary, making it difficult to examine the underlying code and prompts.

Complexity: Even if the system is open-source, understanding the intricate interplay between prompts, data, and algorithms requires specialized expertise.

Subtle Bias: Bias can manifest in subtle ways, making it difficult to identify without rigorous analysis.

Consequences of Biased AI

The consequences of biased AI are far-reaching:

Misinformation: Biased systems can generate misleading or false information, contributing to the spread of misinformation.

Discrimination: AI systems used in decision-making processes (e.g., hiring, lending) can perpetuate existing inequalities.

Erosion of Trust: If people lose trust in AI, it can hinder innovation and adoption of beneficial AI applications.

Political Polarization: Biased AI can reinforce existing political divisions and contribute to social unrest.

Economic Inequality: Biased AI systems can exacerbate economic disparities by favoring certain groups over others.

Mitigating Bias

To address these challenges, it's essential to:

Promote Transparency: Encourage AI developers to be transparent about their systems and data.

Develop Robust Auditing Methods: Create tools and processes to detect and mitigate bias in AI systems.

Diversify AI Teams: Ensure AI development teams represent diverse perspectives to reduce bias.

Implement Ethical Guidelines: Establish ethical frameworks for AI development and deployment.

Foster Critical Thinking: Educate the public about AI bias and how to critically evaluate AI-generated information.

By taking these steps, we can work towards creating AI systems that are fair, unbiased, and beneficial to society.

·

7 JUL

·

15 MAR

·

9 FEB

·

12 FEB

·

15 JUN