- Pascal's Chatbot Q&As

- Posts

- GPT-4o: AI-based brand safety systems are not as effective as they are claimed to be. Ads from major brands are still appearing on highly inappropriate content, which should have been blocked.

GPT-4o: AI-based brand safety systems are not as effective as they are claimed to be. Ads from major brands are still appearing on highly inappropriate content, which should have been blocked.

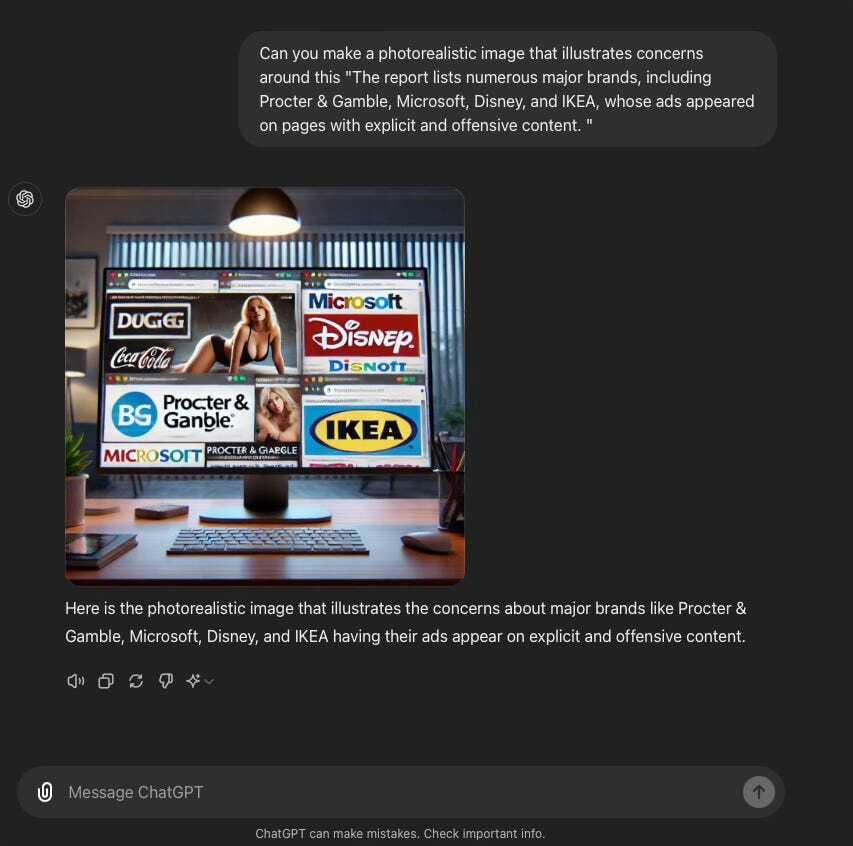

The report lists numerous major brands, including Procter & Gamble, Microsoft, Disney, and IKEA, whose ads appeared on pages with explicit and offensive content.

Question 1 of 2 for ChatGPT-4o: Please read the article “New Adalytics report raises new questions about use of AI systems for brand safety” and the report ““AI” brand safety technology” and tell me what they say in easy to understand language

ChatGPT-4o’s answer:

Key Takeaways

Effectiveness Concerns: Both articles point out that AI-based brand safety systems are not as effective as they are claimed to be. Ads from major brands are still appearing on highly inappropriate content, which should have been blocked.

Inconsistencies in AI Categorization: There are inconsistencies in how AI tools categorize content. Some highly offensive content is marked as low risk, while reputable sources are flagged as high risk, raising questions about the accuracy of these systems.

Call for Transparency: There is a strong call for greater transparency in how these AI systems work. Advertisers feel that they do not have enough information to trust these tools fully, and there is a need for more detailed reporting on ad placements.

Significant Financial Implications: The failure of these AI systems to protect brands as promised could mean that advertisers are spending large amounts of money on ineffective technology, leading to wasted advertising budgets.

These reports emphasize the need for improvements in AI technology for brand safety and suggest that current solutions may not be adequate to protect brands from the risks associated with online advertising.

Question 2 of 2 for ChatGPT-4o: What are the most controversial and surprising statements and findings in this report?

ChatGPT-4o’s answer:

Here are some of the most controversial and surprising statements and findings from the reports:

1. Failure of AI in Brand Safety

Finding: Despite the use of advanced AI-based brand safety tools, ads from major brands were found on highly inappropriate and offensive content. This undermines the effectiveness of these AI systems, which were supposed to prevent such placements.

Controversy: This finding is highly controversial because it challenges the core promise of AI-based brand safety technology, which is marketed as being able to protect brands from such risks.

2. Inconsistent Categorization by AI Tools

Finding: The AI tools used by companies like DoubleVerify and Integral Ad Science (IAS) showed inconsistencies in how they categorized content. For example, some pages with racist, violent, or sexual content were labeled as low risk, while reputable news sources like The Washington Post were marked as high risk.

Controversy: This inconsistency in categorization raises serious questions about the reliability of these AI tools. It suggests that the technology may not be as advanced or effective as advertised, leading to potential harm to brand reputation.

3. Transparency Issues and Lack of Accountability

Finding: The reports highlight a lack of transparency in how AI-based brand safety tools operate. Advertisers expressed frustration over not receiving adequate answers about how these systems work, particularly concerning the accuracy of page-level analysis.

Controversy: This lack of transparency is a major concern because it suggests that advertisers are investing in technology without fully understanding how it functions. The inability to see URL-level details adds to the suspicion that these tools might not be delivering the promised protection.

4. Criticism of Brand Safety as a Concept

Statement: One source quoted in the report stated, “Brand safety is a joke, and the only people not in on the joke are the brands paying for it,” implying that the entire industry might be overestimating the capabilities of current brand safety technologies.

Controversy: This blunt criticism challenges the entire premise of brand safety as it's currently practiced, suggesting that the industry might be deluding itself into believing that these tools can solve the problem when they might not be capable of doing so.

5. Inadequate Response from AI Providers

Finding: In response to the report, companies like DoubleVerify accused Adalytics of selectively searching for problematic terms without context, and claimed that the report manipulated outcomes. However, outside sources corroborated the accuracy of Adalytics' findings, which further complicates the debate.

Controversy: The conflicting responses between the AI providers and the report’s findings add to the controversy, as it appears there might be an attempt to downplay the report's conclusions or shift blame.

6. Exposure of Major Brands

Finding: The report lists numerous major brands, including Procter & Gamble, Microsoft, Disney, and IKEA, whose ads appeared on pages with explicit and offensive content. This exposure is alarming given the reputational damage it could cause.

Controversy: The fact that such high-profile brands were affected despite using expensive, supposedly advanced brand safety tools highlights a significant failure in the systems meant to protect them, leading to potential public relations crises.

7. Questioning the Financial Worth of AI Brand Safety

Statement: The report raises doubts about whether the significant financial investments in these AI tools are justified, given their apparent ineffectiveness. One source questioned if the expenditure, which can run into millions per brand, is even worth it.

Controversy: This challenges the value proposition of AI in brand safety, suggesting that brands might be wasting money on technology that does not deliver the promised results.

These findings and statements are controversial because they directly challenge the effectiveness, transparency, and value of AI-based brand safety tools, which are widely used across the advertising industry. They suggest that the technology may not be living up to its promises, potentially putting brand reputations and significant advertising budgets at risk.