- Pascal's Chatbot Q&As

- Posts

- GPT-4o: Using FLOP as a core threshold oversimplifies the multifaceted nature of AI capability and societal impact. Commercial actors could use open-source exemptions to circumvent oversight.

GPT-4o: Using FLOP as a core threshold oversimplifies the multifaceted nature of AI capability and societal impact. Commercial actors could use open-source exemptions to circumvent oversight.

Non-EU jurisdictions may resist these thresholds, creating compliance and enforcement disparities for global providers.

The EU Commission’s Guidelines on General-Purpose AI Models under the AI Act – Implications, Controversies, and Strategic Recommendations

by ChatGPT-4o

The European Commission’s July 2025 “Guidelines on the scope of the obligations for general-purpose AI models” represent a landmark operationalization of the EU AI Act (Regulation 2024/1689). This annexed communication offers detailed guidance on how developers, modifiers, and deployers of general-purpose AI models (GPAIMs) must navigate compliance obligations—particularly in terms of transparency, risk classification, copyright, and systemic risk mitigation.

Most Surprising, Controversial, and Valuable Statements

Surprising

Training Compute as a Proxy for Generality: The Commission adopts a threshold of 10²³ FLOP to define GPAIMs and 10²⁵ FLOP for systemic risk classification. This reliance on a raw compute metric rather than direct performance benchmarks was unexpected, especially given the limitations of FLOP as a proxy for intelligence or impact.

Exemptions for Open-Source Models: Open-source GPAIMs are exempt from transparency and documentation obligations unless they pose systemic risks. This carves out a significant loophole that large actors could exploit by releasing partially open models.

Monetization Restrictions: The notion that even indirect monetization (e.g., via ads or exclusive hosting) disqualifies a model from open-source exemptions is a stringent and surprisingly holistic interpretation of “non-commercial.”

Controversial

Systemic Risk Threshold and Classification: The automatic classification of models exceeding 10²⁵ FLOP as high-risk, with systemic obligations kicking in without prior incident, may be seen as punitive and potentially discouraging innovation, especially among research organizations with advanced compute resources.

Commission's Enforcement Monopoly: Only the European Commission (via the AI Office) has enforcement powers over GPAIM providers. This centralization limits the role of national regulators and raises concerns over bureaucratic bottlenecks and capacity.

Restriction of Downstream Modifiers: Modifiers of GPAIMs become subject to obligations if they use more than 1/3 of the original model’s training compute, which may stifle innovation and experimentation, particularly in the academic sector.

Valuable

Clarity on Lifecycle Obligations: Obligations span the entire model lifecycle—from training to deployment and post-market monitoring. This introduces accountability across the AI value chain and ensures continued oversight as models evolve.

Codes of Practice as a Compliance Tool: Adherence to AI Office-approved codes of practice can mitigate enforcement risks and reduce regulatory burdens—a pragmatic compromise for industry.

Distinction Between Capability and Generality: A model can exceed FLOP thresholds but still be exempt if it demonstrably lacks generality or systemic risk, allowing nuance and flexibility in enforcement.

SWOT Analysis

Strengths

Regulatory Clarity: The guidelines offer unprecedented operational detail for AI providers, reducing ambiguity in compliance.

Risk-based Differentiation: The act distinguishes between low-risk and systemic-risk models, enabling proportionate obligations.

Open Source Consideration: Carefully crafted exemptions incentivize transparency and community-driven innovation.

Weaknesses

Reliance on Compute Metrics: Using FLOP as a core threshold oversimplifies the multifaceted nature of AI capability and societal impact.

Rigid Provider Definitions: Designating downstream actors as providers may discourage model reuse and modification.

Enforcement Centralization: Sole reliance on the AI Office may overburden enforcement and reduce agility.

Opportunities

Template for Global Regulation: The EU’s leadership in AI governance could influence international regulatory norms.

Standardization via Codes of Practice: Collaborative industry codes could lead to harmonized best practices and competitive advantage.

Incentives for Transparency: Provisions encourage responsible release of open models with shared architecture and usage info.

Threats

Regulatory Overreach: Burdensome classification and lifecycle obligations may push innovation outside the EU.

Open Source Exploitation: Commercial actors could use open-source exemptions to circumvent oversight.

Fragmentation Risk: Non-EU jurisdictions may resist these thresholds, creating compliance and enforcement disparities for global providers.

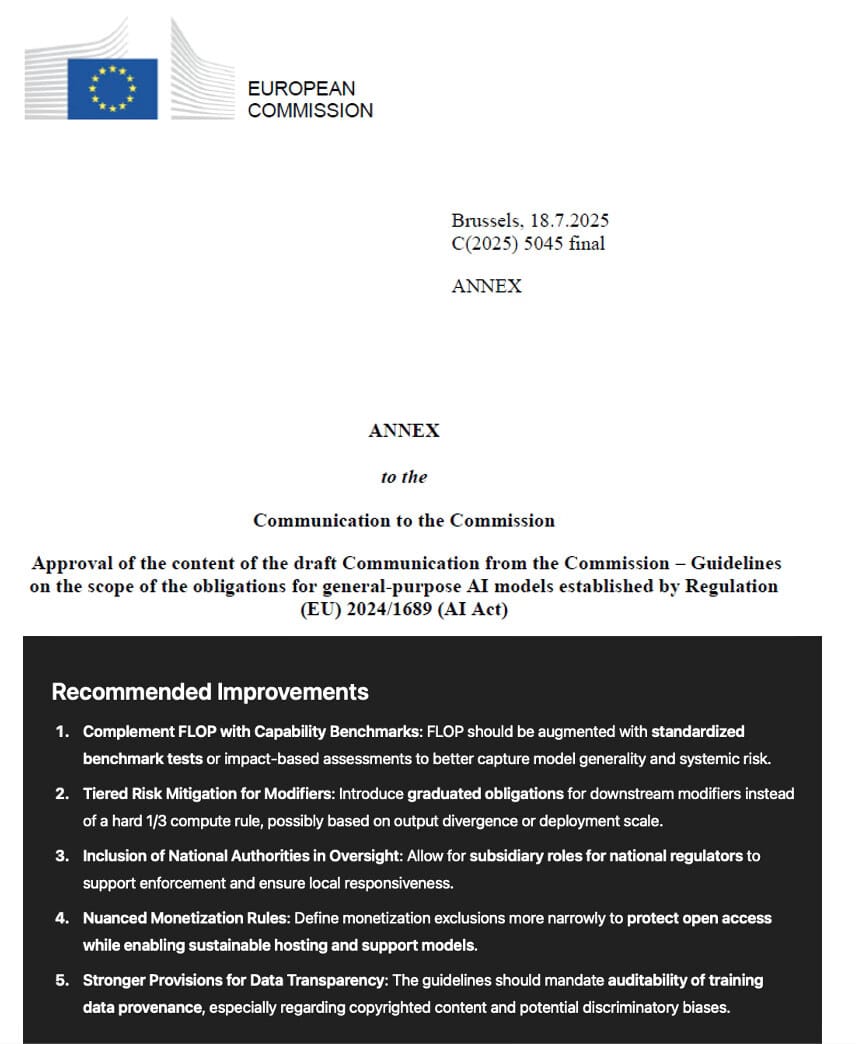

Recommended Improvements

Complement FLOP with Capability Benchmarks: FLOP should be augmented with standardized benchmark tests or impact-based assessments to better capture model generality and systemic risk.

Tiered Risk Mitigation for Modifiers: Introduce graduated obligations for downstream modifiers instead of a hard 1/3 compute rule, possibly based on output divergence or deployment scale.

Inclusion of National Authorities in Oversight: Allow for subsidiary roles for national regulators to support enforcement and ensure local responsiveness.

Nuanced Monetization Rules: Define monetization exclusions more narrowly to protect open access while enabling sustainable hosting and support models.

Stronger Provisions for Data Transparency: The guidelines should mandate auditability of training data provenance, especially regarding copyrighted content and potential discriminatory biases.

Firm Recommendations for Stakeholders

Scholarly Publishers

Assert Copyright Interests: Actively engage with AI developers and regulators to enforce reservation of rights under Article 4(3) of DSM Directive. Demand robust copyright compliance documentation.

Support Standardized Data Licensing: Push for sector-specific codes of practice or standard licensesgoverning use of scholarly and educational content in AI training.

Explore Strategic Partnerships: Collaborate with GPAIM developers to co-develop trusted, licensed AI research assistants, ensuring content attribution and value capture.

Monitor Use of Work in Training: Demand transparent summaries of training data (Article 53(d)) and exercise rights under the AI Act and DSM Directive.

Seek Representation in Code of Practice Development: Influence how transparency and risk mitigation measures are shaped by participating in multi-stakeholder forums.

Retain Derivative Rights: When licensing to publishers or platforms, retain rights to control AI training and derivative generation from their works.

Regulators Worldwide

Adopt a Layered Regulatory Model: Emulate the EU’s graduated oversight based on risk, ensuring proportionate safeguards without stifling innovation.

Push for Global FLOP Threshold Harmonization: Collaborate on international standards for training compute reporting and model benchmarking to prevent regulatory arbitrage.

Build Capacity in Oversight Bodies: Strengthen national AI offices or establish new ones to provide localized monitoring and ensure AI Act-like frameworks can be enforced at scale.

Conclusion

The Commission’s guidelines represent a forward-looking, sophisticated attempt to operationalize the AI Act for a rapidly evolving technology. While imperfect—particularly in their reliance on compute-based proxies and centralization of enforcement—they offer the most structured global effort to date in aligning innovation with fundamental rights. Scholarly publishers, creators, and regulators have a narrow but critical window to shape these interpretations and safeguard their interests in an AI-driven future. Cross-sector collaboration, transparency, and ongoing policy iteration will be essential to ensure this regulatory framework meets its ambitions.