- Pascal's Chatbot Q&As

- Posts

- GPT-4o: LLMs (especially bigger ones) memorize more than we thought—not just long, obvious passages, but obscure, high-surprisal tokens too. Post-training filters are not enough.

GPT-4o: LLMs (especially bigger ones) memorize more than we thought—not just long, obvious passages, but obscure, high-surprisal tokens too. Post-training filters are not enough.

Even with safety filters added by companies, memorization persists at the token level—this suggests post-training defenses are leaky.

Asking ChatGPT-4o: Please analyze the paper “Information-Guided Identification of Training Data Imprint in (Proprietary) Large Language Models”, tell me what it says in easy to understand language, list the most surprising, controversial and valuable statements and findings, and list lessons for AI developers, rights owners and regulators.

ChatGPT-4o’s answer:

Here’s a clear and digestible analysis of the paper “Information-Guided Identification of Training Data Imprint in (Proprietary) Large Language Models”by Abhilasha Ravichander et al.

🧠 What the paper says (in simple terms)

Large language models (LLMs) like GPT-4 are trained on huge amounts of text data—but the companies behind them don’t say exactly what that data is. This secrecy raises concerns about copyright, fairness, and scientific transparency.

The paper proposes a new method to detect what training data these models might have memorized, even if you only have black-box access (i.e., you can only give inputs and see outputs, no internal info).

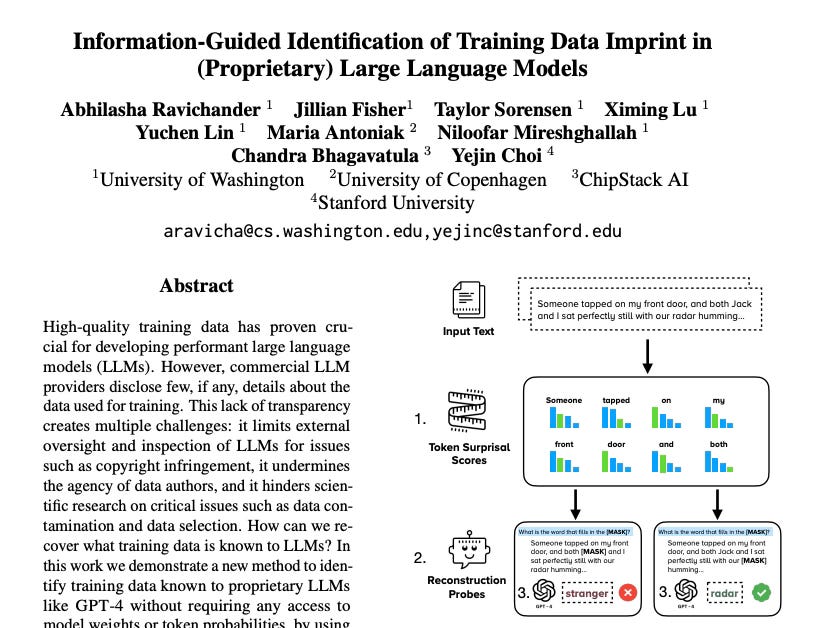

How it works:

Find "surprising" words in a piece of text (words that are hard to guess from context).

Mask those words and ask the model to fill in the blank.

If the model gets it right, and the word is hard to guess from context, it probably memorized that text.

This technique doesn’t need access to the model’s internal workings—it works just by analyzing its output, which makes it great for probing closed-source models like GPT-4.

💥 Most Surprising Findings

LLMs (especially bigger ones) memorize more than we thought—not just long, obvious passages, but obscure, high-surprisal tokens too.

Even when models no longer regurgitate full articles verbatim, they still recall "imprints" from the data, which can be detected with this method.

Their method beats prior probing techniques like prefix matching and cloze tests by being more precise and more applicable across different text types.

🧨 Most Controversial Points

LLMs can still retain copyrighted material without obvious verbatim copying, making it harder for publishers or regulators to prove misuse.

Test set contamination is real: LLMs may have memorized benchmark test questions, inflating their apparent intelligence during evaluations.

Even with safety filters added by companies, memorization persists at the token level—this suggests post-training defenses are leaky.

💎 Most Valuable Insights

Surprisal-based probing is a practical, powerful way to audit LLMs for training data without privileged access.

Combining multiple probing methods gives a fuller picture—different techniques catch different kinds of memorization.

Model size matters—larger models memorize more and can recover more obscure tokens (Figure 3, page 8).

📘 Lessons for Key Stakeholders

👩💻 AI Developers

Expect that LLMs will memorize surprising content—even when using general domain data.

Post-training filters are not enough; proactive data governance during training is essential.

Provide more transparency or risk being caught later through reverse-engineering probes.

🧑⚖️ Regulators & Policymakers

The lack of data transparency is a systemic problem. Black-box probing methods offer new tools for oversight.

Copyright laws may need updating to cover non-verbatim but memorized use.

Model evaluations must be independently verified to ensure no data leakage from benchmarks.

📚 Rights Owners (publishers, journalists, authors)

Your content might be inside an LLM—even if it’s not copied word-for-word.

Legal evidence could include not just verbatim output but token-level reconstructions.

Probing tools could support takedown requests or lawsuits by identifying memorized imprints.

🧭 Final Thoughts

This paper delivers a practical, elegant technique for peering into the “black box” of LLM training data. It bridges a critical gap for researchers, regulators, and rights holders without needing privileged access to model internals. As AI models grow more powerful, holding them accountable for their training data becomes more urgent, and this paper offers a vital tool for that task.