- Pascal's Chatbot Q&As

- Posts

- GPT-4o: AI is now a structural force across all industry sectors—and public companies must treat it as such, both in strategy and in stewardship.

GPT-4o: AI is now a structural force across all industry sectors—and public companies must treat it as such, both in strategy and in stewardship.

For public companies, the lesson is clear: AI is no longer optional, and its adoption is not confined to the innovation lab. It's operational, strategic, and mission-critical.

AI for Public Companies: Governance, Growth, and the Compliance Challenge of a Generation

by ChatGPT-4o

On April 23, Winston & Strawn LLP hosted a landmark webinar—"AI for Public Companies: Leveraging Innovation for Growth & Compliance"—that served not just as a legal primer, but as a wake-up call for public companies navigating the AI era. Moderated by Michael J. Blankenship, with expert insights from Mary Katherine Kulback and J. Eric Johnson, the session offered more than compliance pointers. It illuminated the urgent, multifaceted role AI now plays in public company operations, regulatory exposure, strategic planning, and boardroom governance.

What emerged from this conversation was not just a checklist, but a vision: that AI is now a structural force across all industry sectors—and public companies must treat it as such, both in strategy and in stewardship.

1. AI Is Already Here—and It’s Infrastructure, Not Just Innovation

The webinar began with a simple yet profound observation: AI has crossed the threshold from innovation to infrastructure.

As Eric Johnson highlighted, AI is now deeply embedded across key industries—telecom, energy, healthcare, financial services—driving efficiency and enabling new capabilities. Smart grid systems balance energy loads. AI tools optimize 5G networks. Predictive healthcare applications are influencing clinical decision-making. And fraud detection systems in finance are using machine learning models to identify anomalies at speed and scale.

But what’s striking is not just the diversity of use cases—it’s the convergence of AI's logic across sectors. Whether optimizing bandwidth or energy, the same underlying mechanisms—predictive modeling, real-time adjustments, machine learning—are being deployed.

For public companies, the lesson is clear: AI is no longer optional, and its adoption is not confined to the innovation lab. It's operational, strategic, and mission-critical.

2. The Regulatory Landscape: A Complex, Layered, and Evolving Patchwork

If AI is infrastructure, regulation is still catching up—and doing so in fragmented fashion. Kulback and Johnson walked through a three-tiered regulatory landscape:

Federal Level: Light-Touch, But Watch for the Edges

Under the Trump 2.0 administration, AI regulation in the U.S. is expected to adopt a light-touch, pro-innovation approach, emphasizing competitiveness and national security over ethical or safety concerns. However, this doesn’t mean companies are off the hook.

Existing federal laws still apply, and apply forcefully:

SEC: Misleading AI disclosures are securities fraud.

FTC: False advertising and discriminatory AI outputs are enforcement targets.

Export controls: Particularly for AI technologies moving toward China.

As Johnson aptly put it, “AI impacts every area of law.” The challenge isn’t whether laws apply—it’s how they apply, especially when courts begin interpreting legacy laws through the lens of emerging technologies.

State Level: A Patchwork of Consumer Protection and Anti-Discrimination Laws

States are moving faster than Washington. At least 40 U.S. states are considering or implementing AI-related laws, focused on:

Transparency (e.g., disclosing AI-generated content)

Employment discrimination

Use of biometric data

Deepfake regulations

The result? A patchwork regulatory environment where multi-state companies will need to tailor their compliance strategies accordingly—raising both costs and legal complexity.

International Level: The EU AI Act’s Extra-Territorial Gravity

Europe’s AI Act, in effect since August 2024, is the world’s first comprehensive AI regulation. It applies a risk-based framework that categorizes AI systems as:

Unacceptable (banned outright)

High risk (heavily regulated)

Limited/minimal risk (lightly governed)

Of particular concern: the Act’s extraterritorial reach and steep penalties—up to 7% of global annual revenue for non-compliance. That figure alone has pushed EU AI Act compliance to the forefront of 10-K disclosures for globally operating U.S. companies.

Kulback flagged a developing nuance: the EU may be softening its stance slightly to attract investment, especially in comparison to China’s tightly controlled but fast-moving AI regulatory regime. But the enforcement risk remains significant.

3. Data Ownership: The Legal and Strategic Battleground of the AI Age

One of the most practically urgent—and often overlooked—topics was addressed by Mary Katherine Kulback: data ownership and usage rights.

In a world where AI is only as good as the data it consumes, access, control, and clarity around data rights are make-or-break issues for AI deployment.

The Key Lessons:

Just because you have access to data doesn’t mean you have the rights to use it for AI. Many agreements predate the AI revolution and lack clauses for machine learning, data reuse, or model training.

Third-party data use is fraught with legal risk. Violating IP licenses, breaching confidentiality, and misusing trade secrets are all risks when deploying AI on data governed by outdated or narrow agreements.

Audit your data. Now. Kulback’s advice: start by auditing existing agreements, understand the scope of licensed data, and build internal playbooks to ensure legal and technical teams are aligned.

Segment your datasets. If your data rights differ across vendors or sources, you must ensure your AI tools don’t commingle them in ways that breach contract terms—or data protection laws.

This isn’t just a legal compliance issue—it’s a strategic readiness issue. Companies that fail to secure their data assets now may find themselves unable to capitalize on AI innovation tomorrow.

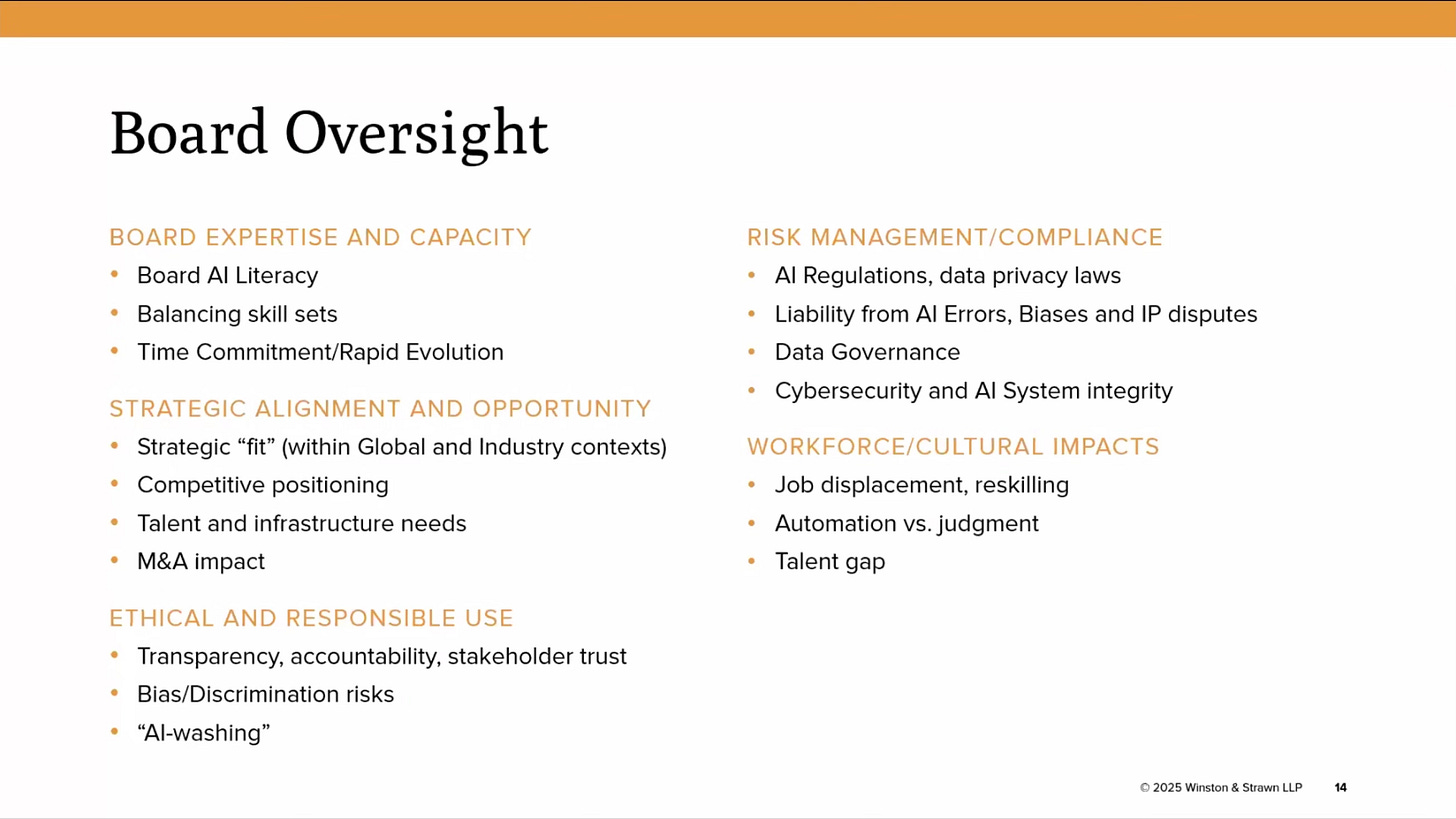

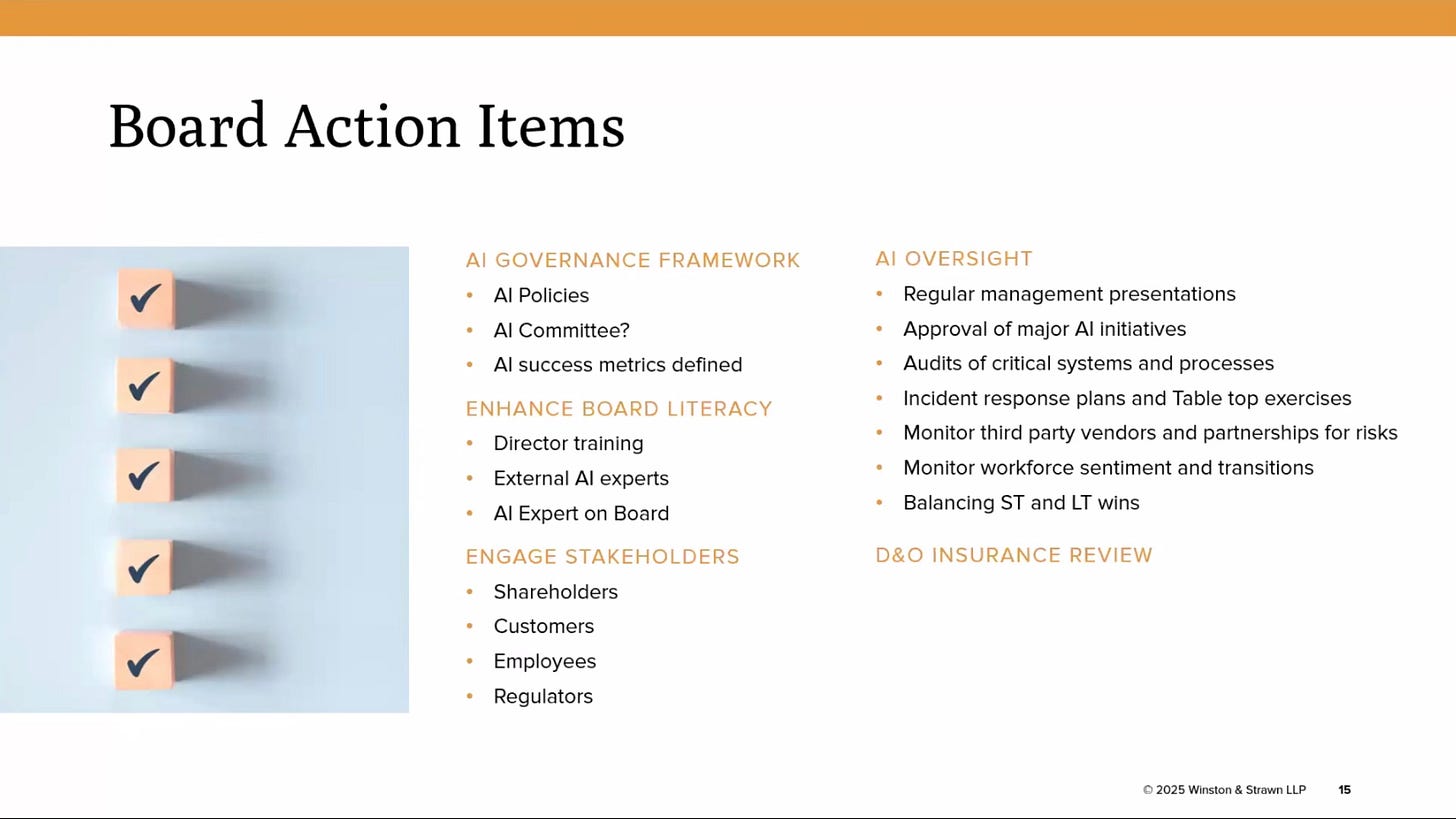

4. Board Oversight and AI Governance: No Longer Optional

Perhaps the most important takeaway came from Johnson’s closing section: AI governance must now become a core board function.

The Governance Imperatives:

Does the board have AI fluency? Boards need directors who understand the risks and opportunities of AI—not just technologists, but ethicists, strategists, and compliance experts.

Establish a governance framework. Companies must develop AI policies that define acceptable use, ethical guardrails, oversight structures, and reporting obligations. Who owns AI risk? What committee is accountable?

Beware of AI washing. Disclose your AI capabilities accurately. Inflating your tech credentials without substance is not only misleading—it may invite SEC scrutiny.

Understand workforce impact. AI won’t just displace jobs; it will change how work is done, how employees are reskilled, and how trust in automation is managed across the organization.

Think beyond short-term wins. Boards should balance short-term cost savings with long-term resilience. Are you investing in foundational AI capabilities that position the company for sustained innovation?

Review D&O insurance. AI adds a new dimension to board liability. It’s time to review coverage and ensure your policies reflect this growing risk vector.

5. AI in Legal Practice: The Profession Is Transforming, Too

To close, the Winston team shared how they are using AI themselves—from automated due diligence and contract review to proactive compliance monitoring. But critically, they framed AI not as a replacement for lawyers, but as a force multiplier, freeing up time for higher-value strategic work.

This aligns with the broader message of the session: AI is not a future concept—it’s present tense. It’s here, it’s being used, and companies that wait too long to develop fluency and oversight may find themselves at a competitive and regulatory disadvantage.

Conclusion: A Turning Point for Public Companies

The most resonant insight from this webinar may be this: AI is a generational challenge that touches every part of a public company’s DNA—legal, strategic, operational, and ethical.

To meet that challenge, public companies must:

Treat AI as a board-level issue, not just an IT or legal one.

Move quickly on data rights, ensuring their most valuable asset is usable and protected.

Embrace regulatory complexity, preparing for a patchwork world of state, federal, and international rules.

Balance speed with responsibility, avoiding both overuse and underpreparedness.

The AI transformation is no longer optional, and governance is not a passive task. It's time for public companies to lead—not just in deploying AI, but in deploying it wisely.