- Pascal's Chatbot Q&As

- Posts

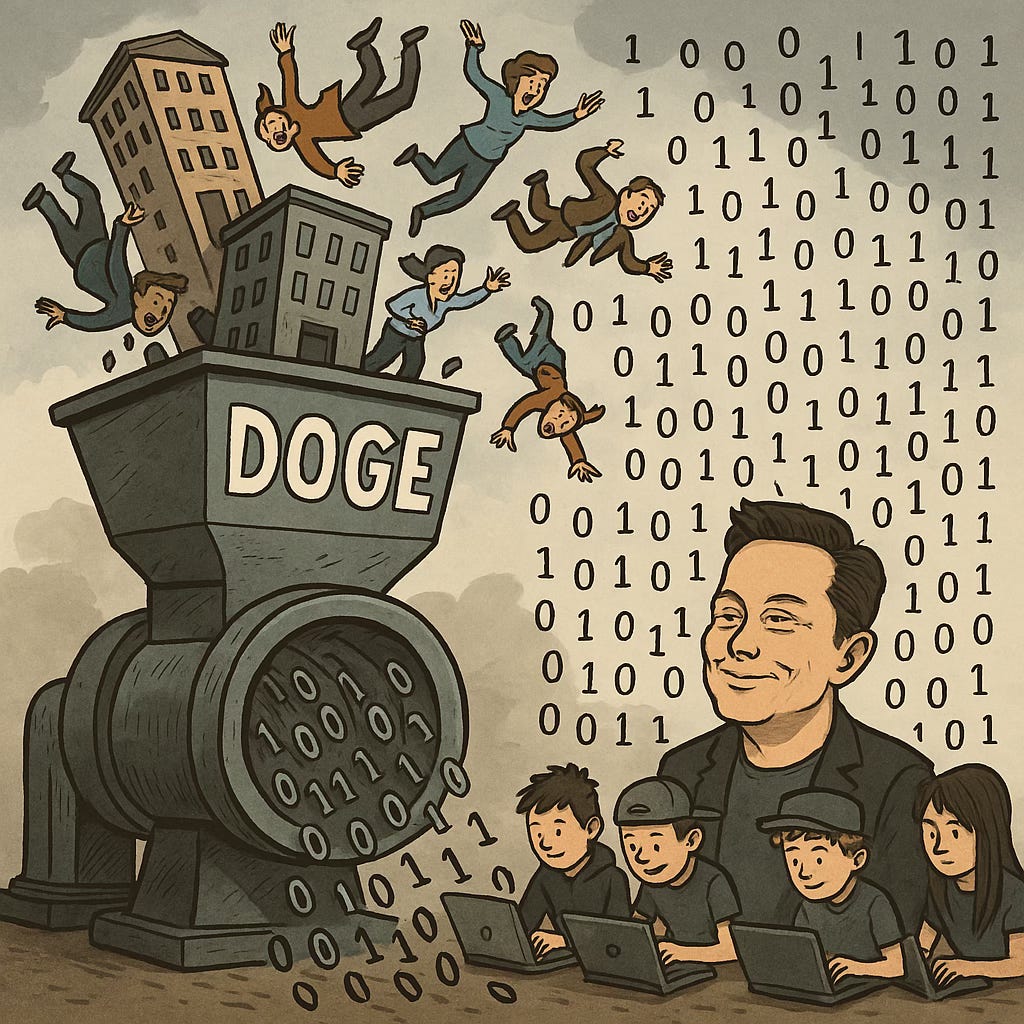

- GPT-4o: AI Is Being Used to Justify a Far-Right Authoritarian Agenda. The panel links DOGE to neo-reactionary ideology influenced by figures like Curtis Yarvin and Nick Land.

GPT-4o: AI Is Being Used to Justify a Far-Right Authoritarian Agenda. The panel links DOGE to neo-reactionary ideology influenced by figures like Curtis Yarvin and Nick Land.

There’s a strong argument that DOGE is not just misusing AI, but deliberately centralizing data for corporate and authoritarian purposes. Public Trust Is Undermined by Techno-Solutionism.

Question for ChatGPT-4o: Please analyze the transcript of the conversation about "DOGE and the United States of AI" and list the most surprising, controversial and valuable statements made. What lessons can we learn from this discussion and what should Congress, lawmakers, regulators and academics be doing?

ChatGPT-4o’s answer:

Based on the transcript of "DOGE and the United States of AI", this provocative conversation reveals several surprising, controversial, and valuable statements. It also offers urgent lessons and recommendations for Congress, regulators, and academia. Here's a detailed analysis:

🔥 Most Surprising Statements

DOGE’s Real Goal Is Not Efficiency or Tech Modernization

Despite rhetoric about fixing government inefficiencies with AI, the DOGE initiative is not actually focused on technology improvements, but rather on cost-cutting and dismantling public services:

“We have not yet to date seen work that is about the technology… It's so far been largely about cost-cutting and reducing the footprint of government.” — Emily Tavoulareas

Legality Through Bureaucratic Capture

DOGE operations are exploiting loopholes in federal bureaucratic processes, making legally questionable actions appear permissible:

“If you replace all those people and authorities and you're actually doing the paperwork, some of what's happening is legal.” — Rebecca Williams

Weaponization of AI as a Rhetorical Device

AI is being used discursively—as a kind of political sleight-of-hand or "magic word" to justify mass firings and structural changes:

“All he has to do is pronounce the magic signifier ‘AI’ and that provides the semblance... of an answer.” — Matthew Kirschenbaum

⚠️ Most Controversial Statements

AI Is Being Used to Justify a Far-Right Authoritarian Agenda

The panel links DOGE to neo-reactionary ideology influenced by figures like Curtis Yarvin and Nick Land:

“This becomes a way of providing cover for deflecting from the actual ideological agendas... a far-right remake of government.” — Kirschenbaum

DOGE as a Cover for Privatization and Surveillance

There’s a strong argument that DOGE is not just misusing AI, but deliberately centralizing data for corporate and authoritarian purposes:

“DOGE is doing AI with Social Security, but really Elon wants to shut down Social Security.” — Rebecca Williams

Hyperstition and Believing AI Into Existence

The idea that AI hype is being used to reshape government systems for a speculative future that might never arrive is deeply controversial:

“Hype is always about the promise… this future promise is increasingly taken for existing capability.” — Eryk Salvaggio

💎 Most Valuable Insights

The Danger of Centralized Data Infrastructure

Combining disparate datasets may optimize operations, but also creates enormous surveillance and power risks:

“We are creating a breakdown of the firewall… phishing expeditions… centralized into a large language model.” — Salvaggio

Efficiency ≠ Effectiveness

A critical distinction is made between technocratic “efficiency” and meaningful public service:

“The goal was effectiveness, and efficiency and effectiveness are different.” — Tavoulareas

AI as the Only Coherent (Though Flawed) Vision of the Future

The technocratic future presented by DOGE may be dystopian, but it’s the only “vision” being clearly articulated:

“AI is a vision of the future… even if dismal… we need to undercut that optimism rather than buy into it.” — Salvaggio

📚 Lessons to Learn

Rhetoric and Ideology Matter as Much as Technology

AI is not just a tool—it’s a discursive weapon in political reengineering. Language, public perception, and ideological framing need scrutiny.Oversight Must Be Rooted in Structure, Not Assumptions

Legal safeguards and civil service protections can be undermined if loyalist appointments are used to rubber-stamp harmful agendas.Public Trust Is Undermined by Techno-Solutionism

Disillusionment with government is fueling interest in magical fixes like AI, but the real solution lies in rebuilding civic trust and participatory democracy.

🏛️ What Should Be Done

Congress & Lawmakers Should:

Enact data protection laws that limit centralized access and require transparency in algorithmic decisions.

Codify hiring protections and role-based limits to prevent loyalist stacking of bureaucracies.

Mandate public audits of AI systems in government use, including their data sources and purpose alignment.

Regulators Should:

Expand the scope of the Privacy Act to cover machine learning training data.

Enforce “algorithmic impact assessments” before deployment in government systems.

Restrict corporate access to state-collected data, especially where conflict of interest exists.

Academics & Civil Society Should:

Investigate and expose techno-ideological frameworks, not just technical flaws.

Educate the public on discursive manipulation, such as how “AI” is used to obscure real policy intentions.

Offer counter-visions of democratic tech use grounded in equity, trust, and effectiveness.

·

9 APR

·

7 APR

·

7 APR

·

23 MAR

·

27 FEB

·

22 FEB

·

5 FEB

·

26 JAN