- Pascal's Chatbot Q&As

- Posts

- Gemini's Analysis: The Panopticon of Code: A Technical and Sociopolitical Analysis of AI-Driven Censorship, Surveillance, and Regime Survival.

Gemini's Analysis: The Panopticon of Code: A Technical and Sociopolitical Analysis of AI-Driven Censorship, Surveillance, and Regime Survival.

We are witnessing the emergence of “digital authoritarianism”—a model of governance where control is automated, invisible, and seamlessly integrated into the technological fabric of daily life.

The Panopticon of Code: A Technical and Sociopolitical Analysis of AI-Driven Censorship, Surveillance, and Regime Survival

by Gemini 3.0, Deep Research. Warning! LLMs may hallucinate!

1. Introduction: The Evolution of Coercive Control

The architecture of political control is undergoing a fundamental metamorphosis. Throughout the twentieth century, authoritarian stability was largely predicated on the visible application of state power: the secret police officer on the corner, the physical prison camp, and the overt censor’s black marker. These analogue methods, while effective, were resource-intensive and socially costly. They relied on fear, which is a volatile and often brittle foundation for long-term regime survival. Today, however, we are witnessing the emergence of “digital authoritarianism”—a model of governance where control is automated, invisible, and seamlessly integrated into the technological fabric of daily life.1

This transformation is driven by the rapid maturation of Artificial Intelligence (AI), specifically in the domains of Large Language Models (LLMs), Computer Vision (CV), and Graph Neural Networks (GNNs). These technologies allow regimes to shift from “high-intensity” repression (torture, imprisonment) to “low-intensity,” preventative manipulation.1 By processing vast oceans of data—from social media posts and private messages to gait patterns and financial transactions—AI systems enable a granularity of surveillance that the Stasi or the KGB could only have dreamed of. The goal is no longer merely to punish dissent after it occurs, but to predict and neutralize it before it can coalesce into a threat.3

This report provides an exhaustive analysis of the technical capabilities and strategic implementations of these AI-driven control systems. It dissects the mechanisms of automated censorship, where semantic understanding replaces keyword filtering; it explores the mathematical models used to map and dismantle social networks of dissent; and it investigates the psychological engineering facilitated by algorithmic ranking and user interface design. Furthermore, it synthesizes these technical insights into a broader understanding of how modern autocracies—and arguably, illiberal elements within democracies—are repurposing the internet from a tool of liberation into a mechanism of regime survival.1 Finally, it outlines a robust policy framework for democratic resilience, emphasizing algorithmic transparency, auditing, and the regulation of dual-use surveillance technologies.

2. The Automata of Silence: Technical Architectures of Mass Censorship

The first line of defense for any informational autocracy is the ability to filter content at scale. The explosive growth of User-Generated Content (UGC) renders human moderation economically unfeasible; a platform with hundreds of millions of users generates billions of data points daily. AI has thus become the indispensable gatekeeper. The shift from simple keyword blocking to AI-driven moderation represents a move from syntactic to semantic control.

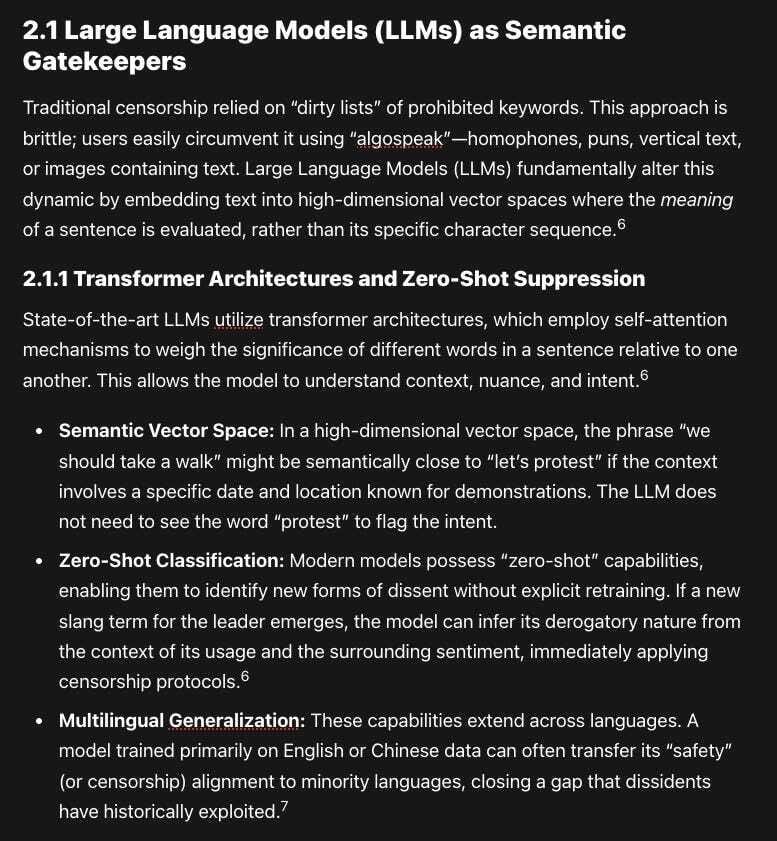

2.1 Large Language Models (LLMs) as Semantic Gatekeepers

Traditional censorship relied on “dirty lists” of prohibited keywords. This approach is brittle; users easily circumvent it using “algospeak”—homophones, puns, vertical text, or images containing text. Large Language Models (LLMs) fundamentally alter this dynamic by embedding text into high-dimensional vector spaces where the meaning of a sentence is evaluated, rather than its specific character sequence.6

2.1.1 Transformer Architectures and Zero-Shot Suppression

State-of-the-art LLMs utilize transformer architectures, which employ self-attention mechanisms to weigh the significance of different words in a sentence relative to one another. This allows the model to understand context, nuance, and intent.6

Semantic Vector Space: In a high-dimensional vector space, the phrase “we should take a walk” might be semantically close to “let’s protest” if the context involves a specific date and location known for demonstrations. The LLM does not need to see the word “protest” to flag the intent.

Zero-Shot Classification: Modern models possess “zero-shot” capabilities, enabling them to identify new forms of dissent without explicit retraining. If a new slang term for the leader emerges, the model can infer its derogatory nature from the context of its usage and the surrounding sentiment, immediately applying censorship protocols.6

Multilingual Generalization: These capabilities extend across languages. A model trained primarily on English or Chinese data can often transfer its “safety” (or censorship) alignment to minority languages, closing a gap that dissidents have historically exploited.7

2.1.2 Real-Time Latency and “Streaming” Moderation

A critical technical challenge in deploying LLMs for mass censorship is latency. Inference on large models (e.g., 100B+ parameters) is computationally expensive and slow, creating a bottleneck for real-time communication platforms.

Streaming Architectures: To address this, recent architectures like Qwen3Guard introduce “streaming safety” mechanisms. Instead of waiting for a full response or post to be generated, the system evaluates content at the token level using specialized classification heads—a “Prompt Moderator” for inputs and a “Response Moderator” for outputs.8

Native Partial Detection: This allows for immediate intervention. If a user begins typing a prohibited phrase, the system can detect the semantic trajectory of the sentence and block it before completion. While this “native partial detection” significantly reduces latency, it introduces the risk of context errors, where benign sentences are cut off because they share initial tokens with prohibited content.9

Edge Deployment: To further reduce latency and reliance on central servers (which can be bottlenecked during crises), regimes are exploring the distillation of large models into smaller, quantized versions capable of running on edge servers or even end-user devices. This pushes the censorship apparatus directly onto the user’s hardware.10

2.1.3 The “Refusal Vector”: Baked-In Ideology

In “sovereign” AI ecosystems like China’s, censorship is not merely a filter applied post-hoc; it is intrinsic to the model’s alignment. Research into Chinese LLMs (e.g., DeepSeek, Doubao, Ernie Bot) reveals that these models are trained via Reinforcement Learning from Human Feedback (RLHF) to refuse specific queries.12

Alignment as Censorship: The “refusal vector”—the direction in the model’s latent space corresponding to a decline to answer—is aligned with political taboos. When queried about events like Tiananmen Square, the model’s internal activation patterns trigger a refusal response similar to how a Western model might refuse to generate hate speech.13

Implicit vs. Explicit: This creates a form of “implicit censorship.” The model does not explicitly state “this topic is banned”; it provides a polite, evasive, or standard state-approved answer, mimicking the behavior of a safety guardrail. This makes it difficult for users to distinguish between technical limitations and political suppression.14

2.2 Computer Vision: The Panopticon of Images and Video

As text filtering becomes more sophisticated, dissidents often pivot to visual media—memes, screenshots of text, and symbolic imagery. Computer Vision (CV) closes this loophole by bringing the same semantic understanding to the visual domain.

2.2.1 Optical Character Recognition (OCR) and Meme Analysis

Integrated OCR systems automatically extract text from images and feed it into the LLM-based filtering pipelines described above. However, the true power of modern CV lies in understanding visual semantics.

Visual Symbol Detection: Algorithms are trained to recognize not just objects, but symbols and caricatures. For instance, if a specific cartoon character becomes a proxy for a leader, the CV system identifies the visual features of that character in various distortions and styles. This has been extensively documented in the censorship of “Winnie the Pooh” imagery in China.15

Latent Diffusion for Detection: The same generative technologies used to create images (Latent Diffusion Models) are being repurposed for detection. By analyzing the noise patterns and latent representations of an image, these models can identify “undesired content” with high fidelity, although they remain vulnerable to adversarial attacks (e.g., adding imperceptible noise to an image to fool the classifier).17

2.2.2 Real-Time Crowd Analysis and Drone Surveillance

In the physical realm, CV is the engine of mass surveillance. The integration of CV with ubiquitous CCTV and drone feeds creates a real-time map of public behavior.

Optical Flow and Crowd Dynamics: Algorithms analyze “optical flow”—the pattern of apparent motion of objects in a video—to detect anomalies in crowd behavior. Specific patterns, such as “converging” (forming a crowd) or rapid “diverging” (panic or flight), can trigger automated alerts for police intervention.19

Drone-Based Monitoring: Unlike static cameras, AI-enabled drones provide dynamic, adaptable surveillance. They can track the movement of a protest march, estimate crowd density (using density estimation algorithms like CSRNet), and identify key individuals even in occluded environments.20

Gait Recognition and ReID: Facial recognition is increasingly thwarted by masks (a lesson learned from the Hong Kong protests and the COVID-19 pandemic). In response, regimes are deploying “Person Re-Identification” (ReID) and gait analysis. These systems analyze the unique biomechanics of a person’s walk, allowing authorities to track an individual across different camera feeds and re-identify them even when their face is obscured.20

2.3 The Integration Challenge: Multimodal Fusion

The cutting edge of automated censorship is Multimodal Learning, where systems process text, image, and audio simultaneously.

Cross-Modal Context: A video might contain a benign image but a seditious audio track, or a sarcastic caption that inverts the meaning of a patriotic image. Multimodal Large Language Models (MLLMs) are designed to understand the relationship between these modalities.

Robustness Benchmarks: Research into the robustness of these models (e.g., JailbreakV-28K, MM-SafetyBench) highlights the ongoing arms race between censors and users. As models become better at understanding the interplay between text and image, they become harder to fool with “context-switching” attacks.17

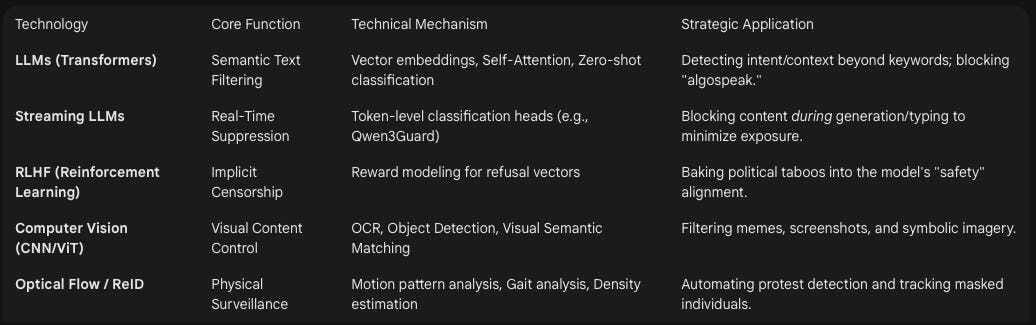

Table 1: Technical Capabilities of AI in Mass Censorship

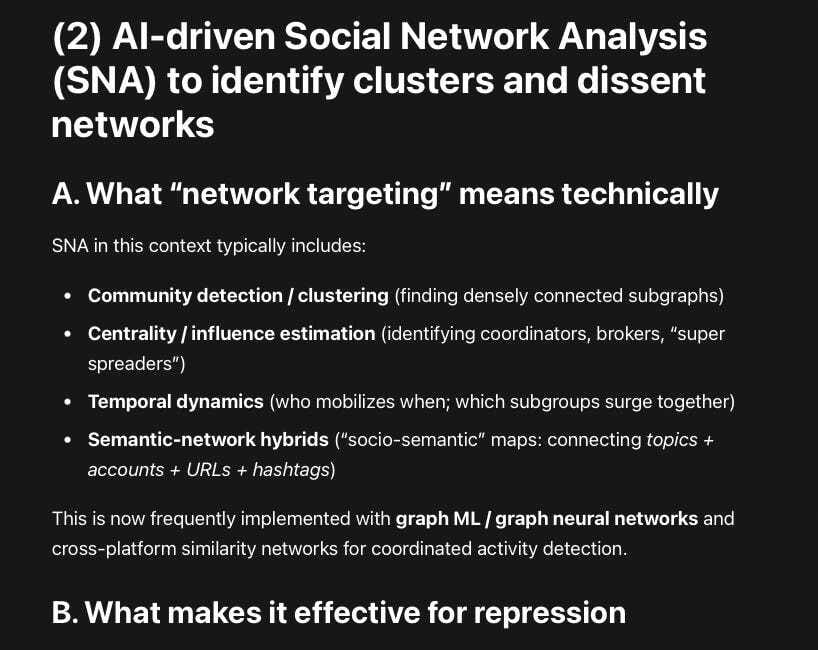

3. Mapping the Invisible: AI-Driven Social Network Analysis (SNA)

Identifying and deleting individual pieces of content is a tactical measure; identifying and disrupting the networks that produce them is a strategic one. AI-driven Social Network Analysis (SNA) allows regimes to map the hidden structures of dissent, identifying key opinion leaders (KOLs) and communities of interest that form the bedrock of opposition.

3.1 Graph Neural Networks (GNNs): Beyond Simple Connections

Traditional SNA relied on static metrics like “degree centrality” (number of connections) or “betweenness centrality” (control over information flow). Modern approaches utilize Graph Neural Networks (GNNs), which apply deep learning to graph structures. GNNs learn low-dimensional “embeddings” for every user (node) based not just on their connections, but on the content they post and the attributes of their neighbors.23

3.1.1 Deep Community Detection and Clustering

GNNs excel at Community Detection, partitioning the massive social graph into clusters of densely connected users.

Modularity Optimization: Algorithms maximize “modularity,” a measure of the density of links inside communities compared to links between communities. In a political context, a high-density cluster often represents an “echo chamber” or a mobilization cell. While traditional algorithms like Louvain or Infomap are used, GNNs provide higher accuracy by incorporating semantic data (post content) into the clustering process.24

Latent Dissent Identification: By training GNNs on labeled data (e.g., known dissident accounts), the model can propagate these labels through the graph to identify “latent” dissidents—users who may not have posted explicitly prohibited content but are structurally embedded in dissent networks. This allows for “predictive guilt” based on association.26

3.1.2 Role Classification and “Bridge” Destruction

Understanding the role of a node is crucial for disruption.

Bridge Nodes: Users who connect two disparate communities (e.g., connecting a labor union group with a student activist group) are critical for the spread of dissent. GNNs identify these “bridge” nodes for targeted suppression. Removing a bridge node is often more effective than removing a hub node, as it fractures the movement and prevents cross-group coordination.24

Bot vs. Human Classification: Paradoxically, the same techniques used to detect malicious botnets (analyzing synchronized behavior and high connectivity) are repurposed to detect coordinated human activism. If a group of users posts similar hashtags simultaneously, the system may flag them as a “bot swarm” and suppress them, regardless of their organic nature.27

3.2 Dynamic Diffusion Modeling and Controversy Detection

Static graphs provide a snapshot, but dissent is dynamic. Regimes use Information Diffusion Modeling—often powered by Recurrent Neural Networks (RNNs) or Transformers coupled with GNNs—to predict how information will spread.23

Predictive Containment: By simulating the spread of a “viral” protest post, the AI can predict which communities will be “infected” next. This allows the regime to intervene preemptively—for example, by shadowbanning the bridge nodes identified above before the information crosses the chasm to the wider public.23

Controversy Detection: Algorithms analyze the structure of the graph to detect polarization. A graph that begins to split into two clearly defined, opposing lobes (bipolarity) signals a rising “controversy.” Detecting this structural shift early allows for intervention before the polarization hardens into mobilization. The system monitors “boundary” nodes that sit between opposing groups to gauge the temperature of the conflict.24

4. The Architecture of Isolation: Algorithmic Suppression and “Shadowbanning”

Once dissent networks are identified, the most effective suppression strategy is often not overt deletion (which creates martyrs and evidence of repression) but covert isolation. AI facilitates “invisible” censorship techniques that degrade the user’s ability to coordinate without alerting them to the manipulation.

4.1 Shadowbanning and Visibility Reduction

Shadowbanning (or “visibility filtering”) refers to the practice where a user’s content is rendered invisible to others while appearing normal to the user themselves. This gaslights the user into believing their message is simply failing to gain traction organically.31

Algorithmic Down-Ranking: Recommender systems (RecSys) are the primary vehicle for this. These systems assign a ranking score to every potential piece of content a user might see. Regimes can inject negative weights into this scoring function for content from flagged clusters. Even if the content is not removed, it is buried so deep in the feed (e.g., position 100+) that it receives virtually zero engagement.31

Trust Scores: Users are often assigned “trust scores” or “reputation scores” based on their network position (calculated by GNNs) and past behavior. A low trust score acts as a drag coefficient on all future content, ensuring it never reaches a viral threshold.28

Breaking Discovery: Discovery algorithms (e.g., TikTok’s “For You” page, Instagram’s Explore) are the engines of viral growth. Regimes can instruct these algorithms to exclude specific topics or user clusters from discovery pools. This quarantines dissenters, allowing them to speak only to their existing followers (who are likely already convinced), thus preventing the expansion of the movement.31

4.2 The Search Engine Manipulation Effect (SEME)

Search engines are the arbiters of truth for the digital citizen. The Search Engine Manipulation Effect (SEME) describes how biased ranking of search results can shift user preferences and suppress dissent.34

Ranking Bias: AI ranking algorithms can be tuned to prioritize pro-regime content and demote critical information. Studies show that shifting the ranking of search results can alter the voting preferences of undecided individuals by 20% or more. Because users instinctively trust the top results (the “primacy effect”), this manipulation often goes unnoticed.35

Subtle Re-ordering: Unlike blocking a site (which triggers a noticeable error), SEME simply moves the dissenting article to the second or third page of results. Since over 90% of clicks occur on the first page, this is functionally equivalent to censorship but maintains the illusion of an open internet.34

4.3 Personalized Suppression and “Heating”

Platforms like TikTok have been found to possess mechanisms for manual and algorithmic “heating” (amplification) of content. This implies a corollary capability for “cooling” or suppression.

The “Heating” Button: Investigations and internal leaks have revealed that employees at platforms like TikTok can manually “heat” specific videos to ensure they go viral, bypassing the standard algorithm.37 This manual override can be used to amplify state propaganda or distractions.

Geofenced Suppression: This amplification/suppression can be hyper-targeted. A regime could allow a protest video to be seen by users in one city (where the event is already known) while suppressing it in neighboring regions to prevent the spread of unrest, creating “information firebreaks”.39

Demographic Targeting: Using the rich psychological profiles built by advertising algorithms, suppression can be targeted at specific demographics most likely to mobilize (e.g., university students, young men), while leaving the content visible to low-risk groups (e.g., retirees) to maintain a façade of normalcy.41

5. Active Measures: Engineering Reality and Apathy

Beyond suppression, AI enables “active measures” to flood the information space with noise, distract the populace, and induce political apathy.

5.1 Automated Astroturfing and “Bot Swarms”

The CounterCloud experiment demonstrated the terrifying potential of Generative AI for disinformation. This project created a fully autonomous, AI-driven propaganda system that could scrape the internet, generate counter-narratives, and post them via fake profiles.42

Cost Asymmetry: Previously, operations like Russia’s Internet Research Agency (IRA) required hundreds of humans. CounterCloud showed that a similar effect could be achieved with a single server running an LLM agent loop for less than $400 a month. The AI scrapes target content, generates a counter-argument (e.g., “The protesters are actually paid rioters”), and posts it across multiple accounts.44

Generative Profiles: GANs (Generative Adversarial Networks) create hyper-realistic profile pictures of non-existent people, while LLMs generate their biographies and consistent post histories. These “sybil” accounts are indistinguishable from real users to the casual observer and can be deployed in “swarms” to drown out organic dissent with manufactured consensus.29

Astroturfing 2.0: This allows for “astroturfing”—the creation of a fake grassroots movement. The AI can generate thousands of unique, context-relevant comments supporting the regime, creating a “bandwagon effect” where real users feel they are in the minority and self-censor.29

5.2 Deepfakes and Disinformation Campaigns

Generative AI allows for the creation of synthetic media to discredit opposition figures.

Character Assassination: Deepfake video and audio can be used to fabricate evidence of corruption, adultery, or treason. Even if the fake is eventually debunked, the initial emotional impact and the “liar’s dividend” (the skepticism generated towards all media) serve the regime’s purpose.47

Targeted Disinformation: AI can tailor disinformation narratives to specific sub-groups based on their psychological profiles (e.g., spreading rumors of police violence to incite panic in one group, while spreading rumors of protester violence to incite fear in another).47

5.3 Predictive Policing and Pre-Crime

AI tools process data fusion from social media, arrest records, and surveillance to “predict” crime or protest, effectively creating a “pre-crime” system.

Hot Spot Prediction: Algorithms forecast where protests are likely to occur based on historical data and real-time social media sentiment. This allows police to be deployed before the crowd gathers, exerting a chilling effect on assembly.4

Self-Fulfilling Feedback Loops: These systems often create feedback loops. If an algorithm predicts a neighborhood is “high risk,” police presence increases, leading to more arrests (often for minor offenses like jaywalking), which is fed back into the model to justify further policing. This targets marginalized communities and dissent centers disproportionately.3

6. Engineering Apathy: The Psychology of “Nudge” Control

The most subtle form of control is not to stop people from speaking, but to stop them from caring. AI algorithms are optimized to exploit psychological vulnerabilities to induce apathy and distraction.

6.1 Passive Consumption and Apathy Induction

Reinforcement Learning (RL) algorithms used for content recommendation are optimized for “engagement,” which often translates to “passive consumption.”

The Dopamine Loop: By analyzing user behavior, RL algorithms identify which content types induce a state of passive, entertainment-focused scrolling (the “dopamine loop”). For users identified as potential political actors, the algorithm can subtly shift their feed toward high-engagement, non-political entertainment (e.g., gaming, celebrity gossip, “slime” videos), effectively distracting them from political reality.51

The “Tittytainment” Strategy: This aligns with the concept of “tittytainment” (tits + entertainment)—keeping the population in a state of distracted contentment to prevent unrest. The AI automates this by maximizing “Time Spent” on non-seditious content.52

6.2 “Nudge” Techniques and Dark Patterns

User Interface (UI) design, optimized by AI, can utilize “nudge” theory to manipulate behavior.

Friction as Censorship: “Dark patterns” can introduce friction into the process of accessing or sharing dissenting information. For example, a platform might require two extra clicks to share a post flagged as “sensitive” or “unverified,” while making pro-regime content frictionless to share. This small barrier drastically reduces viral spread.53

Confirmation Shaming: Interfaces can use manipulative wording (e.g., “Are you sure you want to share this potentially misleading information?”) to induce doubt and shame in the user, leveraging social pressure to enforce conformity.53

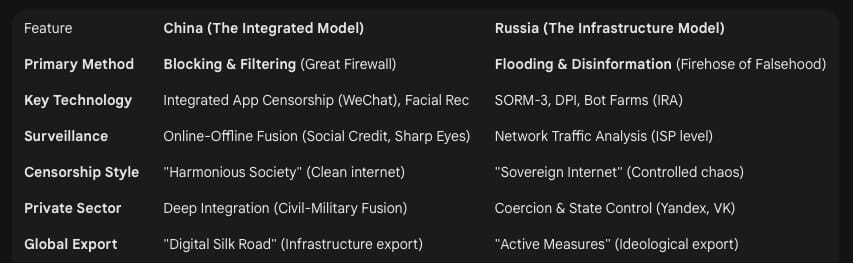

The integration of these tools into statecraft creates the model of “Digital Authoritarianism.” We examine two primary models: the integrated ecosystem of China and the infrastructure-heavy approach of Russia.

7.1 The China Model: Total Integration

China represents the most advanced implementation of this architecture, characterized by deep integration between the state and private technology companies.

The Great Firewall & Integrated Apps: The “Great Firewall” is not just a perimeter block; it is integrated with domestic platforms (WeChat, Douyin, Weibo). AI systems within these apps filter content at the source. Visual censorship in WeChat, for example, operates on a massive scale to detect and block memes in private chats.13

Social Credit and Surveillance: The “Sharp Eyes” and “Skynet” projects use facial recognition and AI to link online behavior with offline privileges. A mismatch between online conformity and offline actions can result in automatic penalties (e.g., travel bans), creating a seamless “coercive control” loop.1

Exporting Repression (Digital Silk Road): China actively exports this technology to other regimes (e.g., Zimbabwe, Myanmar, Venezuela), providing “authoritarianism-in-a-box”—turnkey surveillance and censorship systems. This diffuses the norms of digital authoritarianism globally.5

7.2 The Russia Model: Infrastructure and Disinformation

Russia’s approach has historically been more reliant on “information psychology” and hard infrastructure control.

SORM-3 and Deep Packet Inspection (DPI): The System for Operative Search Measures (SORM-3) allows the FSB to access all data traffic. Coupled with Deep Packet Inspection (DPI) mandated by the “Sovereign Internet” laws, this allows for granular filtering of specific protocols or keywords at the ISP level, blocking access to Tor, VPNs, and specific messaging apps.57

The Firehose of Falsehood: Unlike China’s focus on blocking (maintaining a “clean” internet), Russia focuses on flooding. AI bots and troll farms generate massive amounts of noise to confuse the public and erode trust in objective truth. The goal is not to convince people of a specific truth, but to convince them that no truth exists.29

8. Policy Recommendations and Safeguards

Democracies must develop robust frameworks to safeguard against the encroachment of these technologies, both from external authoritarian actors and internal misuse.

8.1 Algorithmic Transparency and Auditing

Mandated Researcher Access: Legislation like the EU’s Digital Services Act (DSA) (specifically Article 40) sets a vital precedent. It mandates that Very Large Online Platforms (VLOPs) provide vetted researchers with access to data to audit systemic risks. This model should be adopted globally and expanded to specifically include audits for political bias, shadowbanning, and suppression of protected speech.60

Algorithmic Nutrition Labels: Users should have visibility into why they are seeing content. “Algorithmic nutrition labels” could display the parameters influencing a specific recommendation (e.g., “This post is ranked lower because of your location” or “This post is recommended because of your interaction with Topic X”), empowering users to recognize manipulation.62

8.2 Liability and “Amplification” Regulation

Rethinking Immunity: Current legal frameworks (like Section 230 in the US) often shield platforms from liability for algorithmic curation. Recommendations suggest distinguishing between “hosting” content and “amplifying” it. Platforms could be held liable if their algorithms demonstrably amplify harmful disinformation or illegal content, or conversely, if they engage in politically motivated suppression that violates consumer protection or civil rights laws.63

Defining “Harmful” Amplification: Legislation must carefully define “harmful” amplification to avoid creating new censorship tools. The focus should be on the behavior of the algorithm (e.g., amplifying content solely for engagement despite it violating safety standards) rather than the specific viewpoint of the content.65

8.3 Standards for “Dual-Use” Technology

NIST Risk Management Framework (RMF): The NIST AI RMF provides a structure for managing AI risks. This should be expanded to explicitly include “human rights impact assessments” for AI exports. Technologies capable of mass surveillance or automated censorship (e.g., gait recognition, emotion detection) should be subject to export controls similar to munitions or dual-use goods.66

International Norms: Democracies must work together to establish international norms that define the “red lines” of AI use in governance, such as banning the use of biometric mass surveillance in public spaces.2

8.4 Privacy and Surveillance Restrictions

Data Minimization: Strong data privacy laws (like GDPR) are a bulwark against authoritarianism. By limiting the amount of data that can be collected and stored (Data Minimization), the potential power of surveillance algorithms is reduced. If the data doesn’t exist, it cannot be processed by an AI to find dissenters.70

Ban on Biometric Scoring: Legislation should explicitly ban the use of AI for “social scoring” or predictive policing based on biometric or behavioral data, preventing the emergence of a social credit-style system in democratic nations.3

9. Conclusion

The convergence of Large Language Models, Computer Vision, and Graph Neural Networks has provided authoritarian regimes—and increasingly, any entity with control over digital platforms—with a toolkit of unprecedented sophistication. No longer reliant on crude blackouts or mass arrests, the modern digital autocrat can surgically excise dissent, isolate activists, and manufacture consensus through automated, invisible, and scalable means.

The “Architecture of Silence” described in this report is robust, self-reinforcing, and increasingly exportable. The “CounterCloud” experiment and the capabilities of systems like SORM-3 and Sharp Eyes demonstrate that the technical barrier to total information control is vanishing. Safeguarding the future of democratic discourse requires not only technical countermeasures but a fundamental re-evaluation of the legal and ethical frameworks governing the algorithms that curate our reality. The “black box” of the algorithm must be opened to public scrutiny, lest it becomes the invisible cell of the future.

Table 2: Comparative Analysis of Regime Survival Strategies

Note: This report synthesizes findings from numerous academic papers, technical reports, and investigative journalism pieces referenced in the provided research material. The analysis aims to provide a comprehensive technical and sociopolitical overview of the current state of AI-driven digital repression.

Works cited

Digital Repression in Autocracies - V-Dem, accessed January 26, 2026, https://www.v-dem.net/media/publications/digital-repression17mar.pdf

Full article: The rise of authoritarian informationalism: escalating surveillance, manipulation, and control - Taylor & Francis, accessed January 26, 2026, https://www.tandfonline.com/doi/full/10.1080/13510347.2025.2579105?src=

The Dangers of Unregulated AI in Policing | Brennan Center for Justice, accessed January 26, 2026, https://www.brennancenter.org/our-work/research-reports/dangers-unregulated-ai-policing

From the President: Predictive Policing: The Modernization of Historical Human Injustice, accessed January 26, 2026, https://www.nacdl.org/Article/September-October2017-FromthePresidentPredictivePo

Data-Centric Authoritarianism: How China’s Development of Frontier Technologies Could Globalize Repression - NATIONAL ENDOWMENT FOR DEMOCRACY, accessed January 26, 2026, https://www.ned.org/data-centric-authoritarianism-how-chinas-development-of-frontier-technologies-could-globalize-repression-2/

Advancing Content Moderation: Evaluating Large Language Models for Detecting Sensitive Content Across Text, Images, and Videos - arXiv, accessed January 26, 2026, https://arxiv.org/html/2411.17123v1

Large Language Models for Cyber Security: A Systematic Literature Review - arXiv, accessed January 26, 2026, https://arxiv.org/html/2405.04760v1

Qwen3Guard Technical Report - arXiv, accessed January 26, 2026, https://arxiv.org/html/2510.14276v1

From Judgment to Interference: Early Stopping LLM Harmful Outputs via Streaming Content Monitoring - arXiv, accessed January 26, 2026, https://arxiv.org/html/2506.09996v1

MACE: A Hybrid LLM Serving System with Colocated SLO-aware Continuous Retraining Alignment - arXiv, accessed January 26, 2026, https://arxiv.org/html/2510.03283

Optimizing LLM Serving on Cloud Data Centers with Forecast Aware Auto-Scaling - arXiv, accessed January 26, 2026, https://arxiv.org/html/2502.14617v3

Characterizing the Implementation of Censorship Policies in Chinese LLM Services - Anna Ablove, accessed January 26, 2026, https://www.ablove.dev/assets/cn-llms-ndss26.pdf

An Analysis of Chinese Censorship Bias in LLMs - Privacy Enhancing Technologies Symposium, accessed January 26, 2026, https://petsymposium.org/popets/2025/popets-2025-0122.pdf

An Analysis of Chinese Censorship Bias in LLMs - ResearchGate, accessed January 26, 2026, https://www.researchgate.net/publication/396060536_An_Analysis_of_Chinese_Censorship_Bias_in_LLMs

The party’s AI: How China’s new AI systems are reshaping human rights - AWS, accessed January 26, 2026, https://aspi.s3.ap-southeast-2.amazonaws.com/wp-content/uploads/2025/11/27122307/The-partys-AI-How-Chinas-new-AI-systems-are-reshaping-human-rights.pdf

Asian Video Cultures: In the Penumbra of the Global 9780822372547 - DOKUMEN.PUB, accessed January 26, 2026, https://dokumen.pub/asian-video-cultures-in-the-penumbra-of-the-global-9780822372547.html

Rethinking Jailbreak Detection of Large Vision Language Models with Representational Contrastive Scoring - arXiv, accessed January 26, 2026, https://arxiv.org/html/2512.12069v2

Divide-and-Conquer Attack: Harnessing the Power of LLM to Bypass Safety Filters of Text-to-Image Models - arXiv, accessed January 26, 2026, https://arxiv.org/html/2312.07130v3

Exploring Human Crowd Patterns and Categorization in Video Footage for Enhanced Security and Surveillance using Computer Vision and Machine Learning - arXiv, accessed January 26, 2026, https://arxiv.org/pdf/2308.13910

Improving trajectory continuity in drone-based crowd monitoring using a set of minimal-cost techniques and deep discriminative correlation filters - arXiv, accessed January 26, 2026, https://arxiv.org/html/2504.20234v1

Density Estimation and Crowd Counting - arXiv, accessed January 26, 2026, https://arxiv.org/html/2511.09723v1

Person detection and re-identification in open-world settings of retail stores and public spaces Identify applicable funding agency here. If none, delete this. - arXiv, accessed January 26, 2026, https://arxiv.org/html/2505.00772v1

Interactive Viral Marketing Through Big Data Analytics, Influencer Networks, AI Integration, and Ethical Dimensions - MDPI, accessed January 26, 2026, https://www.mdpi.com/0718-1876/20/2/115

Ideological Isolation in Online Social Networks: A Survey of Computational Definitions, Metrics, and Mitigation Strategies - arXiv, accessed January 26, 2026, https://arxiv.org/html/2601.07884v1

Community detection in bipartite signed networks is highly dependent on parameter choice, accessed January 26, 2026, https://arxiv.org/html/2405.08203v1

Social Networks Analysis and Mining | springerprofessional.de, accessed January 26, 2026, https://www.springerprofessional.de/en/social-networks-analysis-and-mining/50530104

Detection of Rumors and Their Sources in Social Networks: A Comprehensive Survey, accessed January 26, 2026, https://arxiv.org/html/2501.05292v1

Credibility-based knowledge graph embedding for identifying social brand advocates, accessed January 26, 2026, https://www.frontiersin.org/journals/big-data/articles/10.3389/fdata.2024.1469819/full

Detection of Malicious Social Bots: A Survey and a Refined Taxonomy - ResearchGate, accessed January 26, 2026, https://www.researchgate.net/publication/339931673_Detection_of_Malicious_Social_Bots_A_Survey_and_a_Refined_Taxonomy

Network polarization, filter bubbles, and echo chambers: An annotated review of measures and reduction methods - arXiv, accessed January 26, 2026, https://arxiv.org/pdf/2207.13799

Content Regulations by Platforms: Enduring Challenges - ResearchGate, accessed January 26, 2026, https://www.researchgate.net/publication/386078835_Content_Regulations_by_Platforms_Enduring_Challenges

Artificial Intelligence and the Operationalization of SESTA-FOSTA on Social Media Platforms - UC Berkeley, accessed January 26, 2026, https://escholarship.org/content/qt5w68t85d/qt5w68t85d.pdf

The State of Free Speech Online | Big Brother Watch, accessed January 26, 2026, https://bigbrotherwatch.org.uk/wp-content/uploads/2021/09/The-State-of-Free-Speech-Online-1.pdf

A COMPUTER-BASED APPROACH TO ANALYZE SOME ASPECTS OF THE POLITICAL ECONOMY OF POLICY MAKING IN INDIA ANIRBAN SEN, accessed January 26, 2026, https://www.cse.iitd.ac.in/~anirban/Thesis_final_Anirban.pdf

Online government disinformation, censorship, and civil conflict in authoritarian regimes | Request PDF - ResearchGate, accessed January 26, 2026, https://www.researchgate.net/publication/394495694_Online_government_disinformation_censorship_and_civil_conflict_in_authoritarian_regimes

America’s Digital Shield - Senate Judiciary Committee, accessed January 26, 2026, https://www.judiciary.senate.gov/imo/media/doc/2023-12-13_pm_-_testimony_-_epstein.pdf

Text - H.Res.1051 - 118th Congress (2023-2024): Recognizing the importance of the national security risks posed by foreign adversary controlled social media applications., accessed January 26, 2026, https://www.congress.gov/bill/118th-congress/house-resolution/1051/text

TikTok Generation: A CCP Official in Every Pocket | The Heritage Foundation, accessed January 26, 2026, https://www.heritage.org/big-tech/report/tiktok-generation-ccp-official-every-pocket

Supreme Court of the United States, accessed January 26, 2026, https://www.supremecourt.gov/DocketPDF/24/24-656/336098/20241227135716235_24-656%2024-657bsacFormerNationalSecurityOfficials.pdf

Transcript: TikTok CEO Testifies to Congress | TechPolicy.Press, accessed January 26, 2026, https://www.techpolicy.press/transcript-tiktok-ceo-testifies-to-congress/

The Promises and Perils of Predictive Policing, accessed January 26, 2026, https://www.cigionline.org/articles/the-promises-and-perils-of-predictive-policing/

Technical Aspects - Information Integrity Lab - University of Ottawa, accessed January 26, 2026, https://infolab.uottawa.ca/IIL/Category/Technical-Aspects.aspx

Defending Canada: The battle against AI-driven disinformation - Policy Options, accessed January 26, 2026, https://policyoptions.irpp.org/2024/09/defending-canada-the-battle-against-ai-driven-disinformation/

Written evidence submitted by Logically Executive Summary Logically is a British-based tech company that tackles online misi - UK Parliament Committees, accessed January 26, 2026, https://committees.parliament.uk/writtenevidence/128453/pdf/

Social Media Bots: Detection, Characterization, and Human Perception - ProQuest, accessed January 26, 2026, https://search.proquest.com/openview/ba17bd659e1b1aaa5d8527caefd1dfd4/1?pq-origsite=gscholar&cbl=18750&diss=y

LLMs Among Us: Generative AI Participating in Digital Discourse - ResearchGate, accessed January 26, 2026, https://www.researchgate.net/publication/380772412_LLMs_Among_Us_Generative_AI_Participating_in_Digital_Discourse

AI-driven disinformation: policy recommendations for democratic resilience - PMC, accessed January 26, 2026, https://pmc.ncbi.nlm.nih.gov/articles/PMC12351547/

NATO StratCom COE - THE ROLE OF AI IN THE BATTLE AGAINST DISINFORMATION, accessed January 26, 2026, https://stratcomcoe.org/publications/download/The-Role-of-AI-DIGITAL.pdf

Chapter: 2 The Landscape of Proactive Policing, accessed January 26, 2026, https://www.nationalacademies.org/read/24928/chapter/4

Fourteenth United Nations Congress on Crime Prevention and Criminal Justice - Unodc, accessed January 26, 2026, https://www.unodc.org/documents/commissions/Congress/documents/written_statements/Individual_Experts/Takemura_Big_data_V2100865.pdf

CompanionCast: A Multi-Agent Conversational AI Framework with Spatial Audio for Social Co-Viewing Experiences - arXiv, accessed January 26, 2026, https://arxiv.org/html/2512.10918v1

Mapping the Design Space of Teachable Social Media Feed Experiences - arXiv, accessed January 26, 2026, https://arxiv.org/html/2401.14000v2

BLUEPRINT ON - Prosocial Tech Design Governance - Keough School of Global Affairs, accessed January 26, 2026, https://keough.nd.edu/assets/619669/blueprint_on_prosocial_tech_design_governance_aa.pdf

Friction-In-Design Regulation as 21st Century Time, Place, and Manner Restriction, accessed January 26, 2026, https://digitalcommons.law.villanova.edu/context/facpubs/article/1125/viewcontent/friction_in_design_regulation.pdf

Artificial Intelligence and Democratic Norms - National Endowment for Democracy, accessed January 26, 2026, https://www.ned.org/wp-content/uploads/2020/07/Artificial-Intelligence-Democratic-Norms-Meeting-Authoritarian-Challenge-Wright.pdf

Student Thesis Promoting digital authoritarianism - Diva-Portal.org, accessed January 26, 2026, https://www.diva-portal.org/smash/get/diva2:1578528/FULLTEXT01.pdf

Russia: Freedom on the Net 2024 Country Report, accessed January 26, 2026, https://freedomhouse.org/country/russia/freedom-net/2024

RUSSIA’S STRATEGY IN CYBERSPACE - NATO Strategic Communications Centre of Excellence, accessed January 26, 2026, https://stratcomcoe.org/cuploads/pfiles/Nato-Cyber-Report_11-06-2021-4f4ce.pdf

Russia is weaponizing its data laws against foreign organizations - Brookings Institution, accessed January 26, 2026, https://www.brookings.edu/articles/russia-is-weaponizing-its-data-laws-against-foreign-organizations/

FAQs: DSA data access for researchers - European Centre for Algorithmic Transparency, accessed January 26, 2026, https://algorithmic-transparency.ec.europa.eu/news/faqs-dsa-data-access-researchers-2025-07-03_en

Using the DSA to Study Platforms - Verfassungsblog, accessed January 26, 2026, https://verfassungsblog.de/dsa-platforms-digital-services-act/

Exploring Algorithmic Amplification: A new essay series - | Knight First Amendment Institute, accessed January 26, 2026, https://knightcolumbia.org/blog/exploring-algorithmic-amplification-a-new-essay-series

Full article: Toxic recommender algorithms: immunities, liabilities and the regulated self-regulation of the Digital Services Act and the Online Safety Act - Taylor & Francis, accessed January 26, 2026, https://www.tandfonline.com/doi/full/10.1080/17577632.2024.2408912

Amplification and Its Discontents - | Knight First Amendment Institute, accessed January 26, 2026, https://knightcolumbia.org/content/amplification-and-its-discontents

Generative AI Meets Section 230: The Future of Liability and Its Implications for Startup Innovation | The University of Chicago Business Law Review, accessed January 26, 2026, https://businesslawreview.uchicago.edu/print-archive/generative-ai-meets-section-230-future-liability-and-its-implications-startup

The federal A.I. landscape - BGR Group, accessed January 26, 2026, https://bgrdc.com/wp-content/uploads/2025/12/The-Federal-AI-Landscape-How-the-Federal-Government-Shaped-AI-in-2025.pdf

Risk Management Profile for AI and Human Rights - United States Department of State, accessed January 26, 2026, https://2021-2025.state.gov/risk-management-profile-for-ai-and-human-rights/

Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations - NIST Technical Series Publications, accessed January 26, 2026, https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-2e2023.pdf

Using AI as a weapon of repression and its impact on human rights - European Parliament, accessed January 26, 2026, https://www.europarl.europa.eu/RegData/etudes/IDAN/2024/754450/EXPO_IDA(2024)754450_EN.pdf

Power dynamics of information control, censorship, and digital transparency shaping human rights discourse in the information society - GSC Online Press, accessed January 26, 2026, https://gsconlinepress.com/journals/gscarr/sites/default/files/GSCARR-2021-0283.pdf

·

17:33

AI-enabled mass censorship and “invisible” manipulation at scale (2021–2026)