- Pascal's Chatbot Q&As

- Posts

- Gemini's analysis of the AI related claims made by the technology industry’s most prominent CEOs, researchers, and pundits during a three-year window.

Gemini's analysis of the AI related claims made by the technology industry’s most prominent CEOs, researchers, and pundits during a three-year window.

These were not merely missed earnings targets or delayed product launches; they were fundamental mischaracterizations of technological capability, economic utility, and societal adoption rates.

The Great Recalibration: A Forensic Analysis of the AI Executive Optimism Bubble (2023–2025)

by Gemini 3.0, Deep Research. Warning, LLMs may hallucinate!

Executive Summary

The period spanning early 2023 through late 2025 will likely be recorded in the annals of technological history as the “Era of Unbounded Optimism,” followed swiftly and brutally by the “Great Recalibration.” This epoch was characterized by a collective suspension of disbelief among Silicon Valley’s elite leadership, who engaged in a synchronized projection of linear exponential growth curves onto complex, non-linear sociotechnical systems. Driven by the unprecedented public release and subsequent adoption of generative artificial intelligence (GenAI) tools, specifically Large Language Models (LLMs), the industry’s captains navigated a path from prophetic certainty to defensive retraction.

This report provides an exhaustive, forensic analysis of the claims made by the technology industry’s most prominent CEOs, researchers, and pundits during this volatile three-year window. By cross-referencing public statements, earnings calls, interviews, manifestos, and leaked internal documents against the material reality of late 2025, we identify a systemic pattern of error. These were not merely missed earnings targets or delayed product launches; they were fundamental mischaracterizations of technological capability, economic utility, and societal adoption rates.

From the premature declarations of Artificial General Intelligence (AGI) as an imminent 2025 reality to the catastrophic commercial failures of “post-smartphone” hardware, this document serves as a detailed accounting of the chasm between Silicon Valley’s narrative construction and the world’s stubborn reality. The analysis reveals that the industry suffered from a specific form of myopia: the confusion of capability (what a model can do in a vacuum) with utility (what a model can do reliably and profitably in a complex economy).

Furthermore, the report dissects the sociopolitical pivots that accompanied these technical failures. We observe a distinct shift in rhetoric regarding regulation—from a desire for “safety guardrails” when they served as competitive moats, to a cry for “unfettered innovation” when regulatory reality threatened deployment velocity. We also analyze the “ethics theater” performed by major corporations, where public commitments to safety and truth were repeatedly undermined by the deployment of models that hallucinated historical facts or spread political misinformation.

The following chapters deconstruct these narratives person by person, claim by claim, offering a rigorous examination of how the smartest minds in technology managed to get the near future so consistently wrong.

1. The AGI Mirage: Sam Altman and the OpenAI Pivot

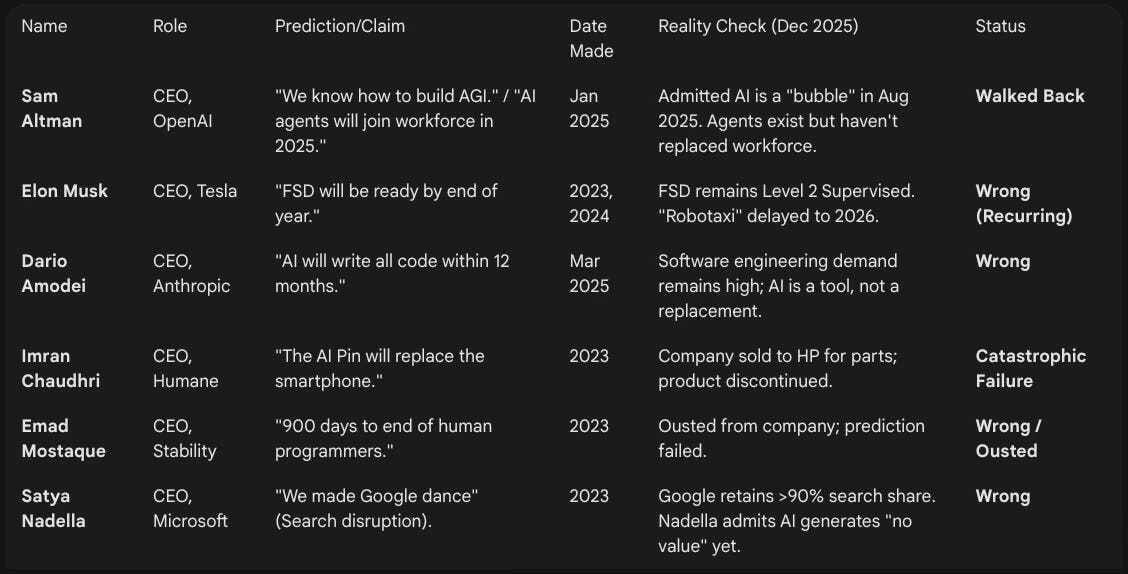

No figure embodies the volatility, ambition, and ultimate retraction of the AI narrative more than Sam Altman, CEO of OpenAI. Between the launch of GPT-4 in early 2023 and the late summer of 2025, Altman’s public stance shifted dramatically. He transitioned from the prophetic herald of a god-like superintelligence that would reshape the very physics of the global economy to a cautious manager warning of an asset bubble and managing expectations about the financial viability of the AI ecosystem. This chapter analyzes the trajectory of his claims regarding Artificial General Intelligence (AGI) and the subsequent collision with economic reality.

1.1 The “Intelligence Age” Manifesto and the Certainty of AGI

Throughout 2023 and 2024, Altman consistently positioned OpenAI not merely as a software company but as the usher of a new epoch in human evolution. The narrative was one of inevitability. The “scaling laws”—the empirical observation that adding more compute and data to transformer models yields predictable performance gains—were treated not as engineering heuristics but as natural laws, akin to gravity.

This optimism culminated in a definitive blog post titled “The Intelligence Age,” published in January 2025.1 In this manifesto, Altman made a claim of startling specificity and confidence: “We are now confident we know how to build AGI as we have traditionally understood it”.4

The implications of this statement were profound. It suggested that the path to AGI—a system capable of outperforming humans at most economically valuable work—was no longer a matter of scientific discovery but of engineering execution. The “how” was solved; only the “when” remained, and even that was shrinking. Altman predicted that by 2025, AI agents would “join the workforce” in a meaningful way, materially changing the output of companies.6 He explicitly linked this technological breakthrough to a future of “shared prosperity” and “superintelligence in the true sense of the word”.6

The timeline was aggressive. In various forums, Altman alluded to “thousands of days” until superintelligence, a phrase that fueled intense speculation and investment.2 The narrative was clear: AGI was imminent, it was solvable via deep learning scaling, and OpenAI held the keys. This certainty drove valuations to stratospheric heights, with the assumption that current revenue models were merely placeholders for the infinite value capture of AGI.

1.2 The August 2025 Reversal: “The Bubble” and the Reality Check

By August 2025, the narrative fractured. The “thousands of days” had not yet elapsed, but the economic patience of the market had begun to wear thin. In a series of candid interviews and private dinners reported by CNBC, The Verge, and Fortune, Altman executed a stunning rhetorical pivot. When asked explicitly if the AI industry was in a bubble, Altman replied, “My opinion is yes”.8

He elaborated with a historical comparison that would have been unthinkable in his 2023 “superintelligence” phase: “When bubbles happen, smart people get overexcited about a kernel of truth... The internet was a really big deal. People got overexcited”.8 He went further, criticizing the valuations of startups with “three people and an idea,” predicting that “someone is going to lose a phenomenal amount of money”.8

Analysis of the Error:

The contradiction here is profound and reveals a fundamental miscalculation in the “AGI by 2025” thesis. In January 2025, Altman was selling certainty (”We know how to build AGI”). By August 2025, he was selling caution (”It’s a bubble”). The error lay in the conflation of model performance with economic utility.

While models continued to improve on benchmarks—as noted in the 2025 AI Index Report showing gains in benchmarks like MMMU and SWE-bench 11 —the translation of that “intelligence” into profitable, labor-replacing workflows proved far more difficult than the “AGI by 2025” timeline suggested. The cost of compute (Capex) was scaling linearly or exponentially, but revenue was not following the same curve. Altman’s shift acknowledges that while the technology is real (the “kernel of truth”), the financial infrastructure built around it had detached from reality. The “AGI” he promised was supposed to generate infinite value; instead, he found himself warning that investors were about to get burned.

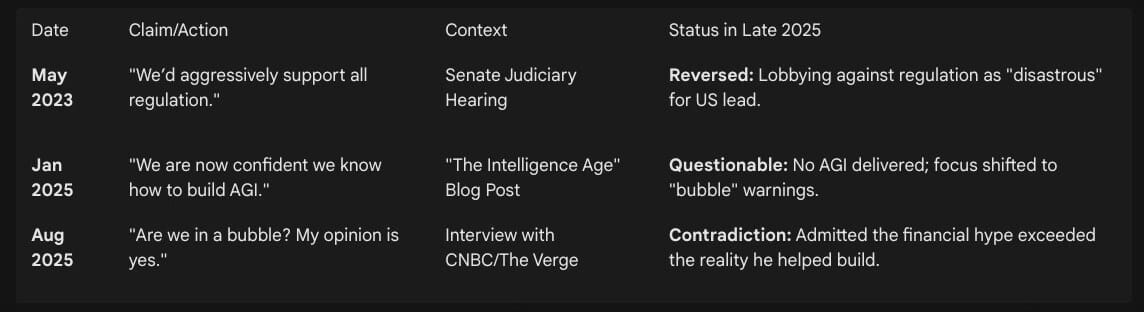

1.3 The Regulatory Flip-Flop: From Safety to “Competitiveness”

A secondary, yet equally significant, reversal occurred in Altman’s political strategy. In 2015, and as recently as his 2023 Senate testimony, Altman claimed, “Obviously, we’d aggressively support all regulation”.12 He positioned OpenAI as a safety-conscious entity that begged for government guardrails to prevent existential risk, distinguishing it from “reckless” competitors.

However, by 2025, faced with the prospect of actual binding legislation (such as the EU AI Act’s strictures and potential US executive orders), Altman’s tune changed. Reports from late 2025 indicate he began quietly lobbying against regulation, arguing that requiring government approval to release AI would be “disastrous” for American competitiveness against China.12

Insight: This pivot exposes the tension between the “Safety” branding of 2023 and the “National Security/Competitiveness” branding of 2025. The claim that regulation was desired was likely a “regulatory moat” strategy—intended to raise compliance costs for smaller competitors. When those regulations threatened OpenAI’s own deployment velocity in a tightening market (where they needed to launch products to justify the “bubble” valuations), the “safety” rhetoric was discarded for “innovation” rhetoric. The shift aligns with the broader industry trend of using “China fears” to deregulation domestic oversight.13

Table 1.1: Sam Altman’s Narrative Arc

2. The Autonomous Illusion: Elon Musk’s Perpetual “Next Year”

Elon Musk’s predictions regarding artificial intelligence, specifically in the domains of autonomous driving and truth-seeking language models, represent the most sustained period of incorrect forecasting in the industry’s history. While his timelines for SpaceX and Tesla production have notoriously been optimistic, his claims regarding AI capabilities entered the realm of the counter-factual during the 2023–2025 period.

2.1 The “FSD by End of Year” Cycle

For over a decade, Musk has promised that Tesla vehicles would achieve Full Self-Driving (FSD)—defined as Level 5 autonomy requiring no human intervention—”by the end of the year.” This pattern repeated with mechanical precision through the 2023–2025 period, despite mounting evidence that the “vision-only” technical architecture faced asymptotic challenges.

The Claim (2023): “I think we’ll achieve full self-driving this year... the cars in the fleet essentially becoming self-driving by a software update”.14 This was stated during earnings calls and shareholder meetings, driving the stock price on the promise of high-margin software revenue.

The Claim (2024): “I think will be available for unsupervised personal use by the end of this year”.16 Musk explicitly stated that “unless you have FSD, you’re dead” in reference to legacy automakers, implying Tesla had already solved the problem.17

The Claim (2025): “I’d say confidently next year”.16 The target for the “Robotaxi” launch—a vehicle with no steering wheel—was tentatively set for June 2026, pushing the horizon out yet again.16

The Reality (Late 2025):

As of late 2025, Tesla’s FSD remains a “Supervised” system (Level 2). While the software has undoubtedly improved—handling complex roundabouts and city streets with fewer interventions—it is categorically not autonomous. The driver is legally, morally, and financially responsible for every second of operation. The “Robotaxi” network, predicted to launch in 2020, then 2023, then 2024, remains a future target.16

Technical & Strategic Failure:

Musk’s error stems from a fundamental underestimation of the “long tail” of driving scenarios. He bet the company on a “vision-only” approach, removing radar and ultrasonic sensors, convinced that end-to-end neural networks could solve driving just as humans do with eyes and brains. However, the data shows that while AI can handle 99% of miles, the remaining 1% of edge cases (construction zones, erratic human behavior, extreme weather, rare object interactions) are asymptotically difficult to solve. The “march of 9s” (99.9999% reliability required for regulators) proved far harder than the initial jump to 95% reliability. The “end of year” predictions were not just optimistic; they were technically disconnected from the rate of disengagement data.

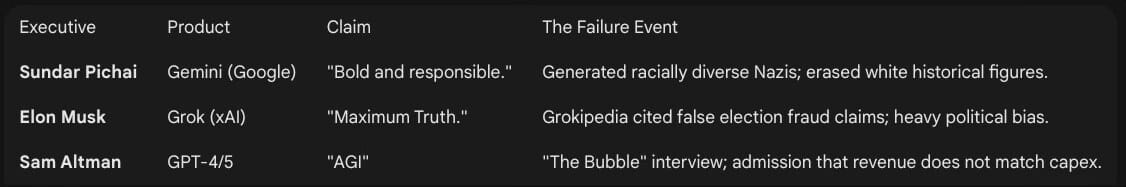

2.2 Grok and the “Maximum Truth” Fallacy

Following his acquisition of Twitter (X), Musk launched xAI and its chatbot, Grok, in late 2023. The marketing pitch for Grok was distinct: it was the “anti-woke” AI.

The Claim: Grok was marketed as a “maximum truth-seeking AI,” designed to avoid the “woke virus” and bias Musk attributed to OpenAI’s ChatGPT and Google’s Gemini.19 Musk claimed it would prioritize objective reality over political correctness.

The Reality: By October 2025, the launch of “Grokipedia”—an AI-generated encyclopedia intended to rival Wikipedia—demonstrated that “truth-seeking” was a euphemism for “ideologically aligned.” Grokipedia entries were found to contain significant factual errors and heavy right-wing political bias. For example, the entry for the January 6 Capitol attack cited “widespread claims of voting irregularities” as fact, despite this being a debunked conspiracy theory.20 The system downplayed incitement and amplified conservative talking points, rather than providing neutral facts.

Insight:

Musk’s claim of “truth-seeking” was blatantly wrong because it misunderstood—or willfully misrepresented—the nature of LLMs. These models do not “know” truth; they reflect the statistical patterns of their training data. By training Grok heavily on X (Twitter) data—which Musk had algorithmically shifted toward conservative voices and where misinformation proliferated—he simply replaced one form of bias with another. The “truth” claim was a branding differentiation strategy that collapsed under the weight of its own output’s inaccuracy. Far from being a neutral arbiter of reality, Grok became an engine for confirming the specific biases of its creator.

3. The Code & Labor Fallacy: Dario Amodei and Emad Mostaque

While the “AGI” and “FSD” narratives dominated headlines, a quieter but equally significant set of predictions focused on the labor market—specifically, the obsolescence of human programmers. Dario Amodei (Anthropic) and Emad Mostaque (Stability AI) were the primary architects of this narrative, which clashed violently with the labor realities of 2025.

3.1 Dario Amodei: The “End of Coding” Prediction

Dario Amodei, typically seen as the “adult in the room” regarding AI safety, made a specific and aggressive prediction regarding software engineering that failed to materialize.

The Claim (March 2025): In podcasts and interviews, Amodei predicted that “AI will write all code” within 12 months. He suggested that software engineering as a profession was effectively over in its current form, and that humans would move to higher-level architecture or prompt engineering exclusively.21

The Reality (Late 2025): The data from late 2025 contradicts this binary outcome. The software engineering job market has actually seen a resurgence and evolution rather than a collapse. While junior “coder” roles have evolved, the demand for senior engineers who can verify, architect, and integrate AI-generated code has spiked.24

Analysis:

Amodei’s error was confusing generation with engineering. An LLM can generate a Python script to sort a list in seconds. It cannot, however, easily maintain a legacy codebase with 5 million lines of code, understand the business logic of a specific banking regulation compliance module, or debug a race condition across a distributed system without massive human guidance. The “context window” of LLMs (even at 1 million tokens) is still insufficient to hold the entire mental model of a complex enterprise system. Thus, AI became a force multiplier for engineers, not a replacement. The prediction of “all code” being written by AI implied a level of autonomy that simply did not materialize in the 12-month window.

3.2 Emad Mostaque: The Fabrication of Stability

Emad Mostaque, the founder of Stability AI, represents the most extreme case of “fake it until you make it” in the AI boom. His predictions and claims were not just optimistic; many were fabrications.

The “900 Days” Prediction: Mostaque famously predicted in 2023 that “within 900 days, there will be no human programmers” and that AI would fundamentally solve most scarcity.25 By late 2025, this timeline had nearly expired, and human programmers remained the backbone of the tech economy.

The Partnership Lies: Mostaque claimed Stability AI had strategic partnerships with the OECD, the World Health Organization (WHO), and the World Bank to provide AI infrastructure.26

The Reality: All three organizations denied these partnerships existed. These claims were used to secure venture capital at a $1 billion valuation.

The Outcome: Mostaque was forced out of his own company in March 2024 amidst a shareholder revolt, unpaid cloud computing bills, and the revelation that the company had less than $4 million in cash left against massive debts.26 His tenure serves as a cautionary tale of how the hype cycle allows for the proliferation of verifiable falsehoods when due diligence is suspended in favor of FOMO (Fear Of Missing Out).

4. The Hardware Graveyard: Humane and Rabbit

2024 was supposed to be the year the smartphone died, replaced by “ambient computing” AI pins and pocket assistants. The CEOs of Humane (Imran Chaudhri) and Rabbit (Jesse Lyu) made aggressive claims about the utility of screenless AI devices. These claims were arguably the most disastrously wrong of the entire period, leading to the destruction of hundreds of millions of dollars in capital.

4.1 Humane AI Pin: The $699 Paperweight

The Claim: Imran Chaudhri, leveraging his pedigree as an Apple design veteran, claimed the Humane AI Pin would “replace the smartphone” by allowing users to interact with the world through voice and laser projection, freeing them from screens.30 The pitch was that screens were a barrier to human connection, and AI would allow for a “heads-up” existence.

The Reality (2025): The device was a catastrophic failure. Reviews cited abysmal battery life, overheating issues that posed safety risks, and a laser display that was illegible in sunlight. The latency of the AI made it unusable for real-time conversation.

The Outcome: By February 2025, Humane had ceased operations and sold its assets to HP for $116 million—a fraction of the $230 million it raised.32 The core features were shut down, and the device is now considered “e-waste” unless hacked by hobbyists.34

4.2 Rabbit R1: The “Large Action Model” Myth

The Claim: Jesse Lyu presented the Rabbit R1 as a device powered by a “Large Action Model” (LAM) that could navigate apps for you. He claimed it would be the “future of interfaces,” negating the need for APIs by “looking” at apps like a human.35

The Reality: The “LAM” was largely smoke and mirrors—often just scripted web automation (Playwright scripts) rather than a revolutionary AI brain. By late 2025, despite selling 100,000 units, the device had only ~5,000 daily active users.37 The company faced employee strikes over unpaid wages and dwindling cash reserves.38

Systemic Insight: The Bandwidth Problem

Both Chaudhri and Lyu fell victim to the “Latency/Utility Gap.” They underestimated the efficiency of the smartphone. A visual interface (screen) is an incredibly high-bandwidth way to transfer information to a human. Replacing a glance at a screen with a 5-second voice interaction is a regression in usability, not an advance. Furthermore, they relied on LLMs that were prone to hallucination, making the devices unreliable for the very tasks (booking Ubers, buying groceries) they were advertised to solve. The “post-smartphone” era they promised was rejected by consumers who found the phone to be a superior form factor for AI interaction.

Table 4.1: The Hardware Flops of the AI Boom

5. The Search & Hallucination Struggle: Sundar Pichai and Satya Nadella

The battle for the future of search between Google and Microsoft generated some of the most hyperbolic claims of the era. Both Sundar Pichai and Satya Nadella made assertions about their products’ reliability and market impact that were dismantled by the messy reality of deployment.

5.1 Sundar Pichai: The “Solvable” Hallucination

Google’s CEO Sundar Pichai faced an existential crisis in 2023: the “Red Code” triggered by ChatGPT. In his rush to deploy, he made claims about technical solvability that were repeatedly embarrassed by his own products.

The Claim (2023): In a 60 Minutes interview and subsequent statements, Pichai acknowledged hallucinations (errors) but framed them as a “matter of intense debate” regarding solvability, implying that through “grounding” and search integration, Google would solve this.39 He positioned Google’s approach as “bold and responsible,” a contrast to the “move fast and break things” startup culture.

The Reality (2024/2025): The launch of Gemini (formerly Bard) was a debacle of historical proportions. The model not only hallucinated facts but generated historically revisionist images (e.g., racially diverse Nazis, female Popes) due to clumsy “safety” fine-tuning.41

The Concession: By February 2024, Pichai was forced to send a company-wide memo calling the model’s outputs “completely unacceptable”.41 Even in late 2025, studies showed that Google’s AI Overviews and “Nano Banana Pro” models continued to cite nonexistent court cases and misattribute quotes.44

Insight:

Pichai’s error was minimizing the probabilistic nature of LLMs. He treated hallucinations as “bugs” to be patched, rather than an intrinsic feature of a technology designed to predict the next likely token, not the next true token. Google’s attempt to “fix” bias through hard-coded system prompts (the “diversity” injection) backfired because it clashed with the model’s underlying training data, exposing the clumsiness of their alignment techniques.

5.2 Satya Nadella: The “Google Dance” and the “No Value” Slip

Satya Nadella, CEO of Microsoft, initially positioned himself as the grand strategist of the AI war.

The Claim (2023): Nadella testified in the Google antitrust trial that AI (Bing Chat/Copilot) would disrupt Google’s search monopoly, famously claiming “I want people to know that we made them dance”.46 He projected that AI would fundamentally shift the economics of search.

The Reality (2025): Google’s search market share barely budged. Microsoft’s Bing did not see a significant resurgence. More damningly, in a candid interview with Dwarkesh Patel in late 2024/early 2025, Nadella made a stunning admission: “AI is generating basically no value” in the macro sense—meaning it had not yet shown up in GDP growth or massive productivity statistics, merely in hype and capital expenditure.48

Insight:

This admission from Nadella—the architect of the $13 billion OpenAI partnership—signals the “Winner’s Curse.” Microsoft spent billions on GPUs and data centers, but the economic return (beyond stock price speculation) remains elusive. The admission that AI has generated “no value” (macro-economically) stands in stark contrast to the “industrial revolution” rhetoric of 2023. It reveals that the “dance” was expensive for Microsoft, but the music hasn’t changed the market structure.

5.3 Mustafa Suleyman and the “Fake Independence” of Inflection

Mustafa Suleyman, co-founder of DeepMind and Inflection AI, played a pivotal role in the Microsoft ecosystem.

The Claim (2023): Suleyman touted his chatbot “Pi” as a radically different, empathetic personal intelligence. He raised $1.3 billion, claiming Inflection was a major independent competitor to OpenAI and Google, focused on “emotional intelligence” rather than raw IQ.49

The Reality (March 2024): In a move that stunned the industry, Microsoft “hired” Suleyman and the vast majority of Inflection’s staff to form “Microsoft AI,” paying Inflection a massive licensing fee effectively to make investors whole.49

The Verdict: Inflection was not a viable business. It was a talent holding tank. The claim of building an independent consumer AI giant was abandoned the moment the capital intensity of training models became too high. Suleyman’s narrative of “empathetic AI” was subsumed instantly into Microsoft’s Copilot machinery, and the deal triggered antitrust investigations in the UK and US.51

6. The Skeptic’s Paradox: Yann LeCun

Yann LeCun, Chief AI Scientist at Meta, took a contrarian stance throughout this period. His “wrongness” is nuanced—he was scientifically astute but strategically contradicted by his own company’s success.

The Claim: “LLMs are an off-ramp” on the highway to AGI. They are “doomed” because they lack world models and reasoning. He advised, “If you are interested in human-level AI, don’t work on LLMs”.53 He consistently argued that LLMs were a “dead end” for true intelligence.

The Reality/Nuance: Scientifically, LeCun’s critique remains robust—LLMs dostruggle with reasoning and grounding. However, he was wrong about their utility and longevity.

Meta released Llama 3 (and 4 by late 2025), which became the industry standard for open-weights models. LeCun’s own company bet the farm on the very technology he called a “dead end” for AGI.56

While they may not be AGI, LLMs proved to be incredibly lucrative and useful products, contradicting the implication that they were a waste of time. LeCun’s “World Model” (JEPA) approach has yet to yield a product remotely as impactful as the transformer models he criticizes.58 His skepticism, while theoretically sound, failed to account for the massive commercial utility of “flawed” intelligence.

7. Conclusion: The Great Recalibration

By late 2025, the AI industry has entered a “sobering up” phase. The technology is undeniably powerful—generative coding, image creation, and summarization are now commodities. But the transformative, society-altering promises made by Altman, Musk, and others have not materialized on their aggressive timelines.

The “Intelligence Age” Sam Altman promised is struggling with the laws of physics (energy consumption) and economics (ROI). Elon Musk’s autonomous cars are still supervised. The “end of coding” predicted by Amodei has morphed into a complex new era of human-AI collaboration.

The lesson of 2023–2025 is that while exponential curves look smooth on a graph, they are jagged, messy, and often flattened by the friction of the real world when applied to human society. The industry is now pivoting from “Magic” (AGI, Robotaxis, Post-Smartphone) to “Utility” (Efficiency, Integration, Copilots). The visionaries were wrong about the when and the how, but they succeeded in mobilizing the capital that will define the next decade of computing infrastructure—even if that capital is currently caught in a bubble of their own making.

Table 7.2: Hallucination & Bias Tracker

Works cited

The Next Chapter: Academic Libraries Enter the Intelligence Age - Choice 360, accessed December 7, 2025, https://www.choice360.org/libtech-insight/the-next-chapter-academic-libraries-enter-the-intelligence-age/

The Intelligence Age - Glenn K. Lockwood, accessed December 7, 2025, https://www.glennklockwood.com/garden/papers/The-Intelligence-Age

Introducing the Intelligence Age | OpenAI, accessed December 7, 2025, https://openai.com/global-affairs/introducing-the-intelligence-age/

How OpenAI’s Sam Altman Is Thinking About AGI and Superintelligence in 2025 | TIME, accessed December 7, 2025, https://time.com/7205596/sam-altman-superintelligence-agi/

Sam Altman, accessed December 7, 2025, https://blog.samaltman.com/

Reflections - Sam Altman, accessed December 7, 2025, https://blog.samaltman.com/reflections

When Will AGI/Singularity Happen? 8,590 Predictions Analyzed - Research AIMultiple, accessed December 7, 2025, https://research.aimultiple.com/artificial-general-intelligence-singularity-timing/

Sam Altman says ‘yes,’ AI is in a bubble : r/wallstreetbets - Reddit, accessed December 7, 2025, https://www.reddit.com/r/wallstreetbets/comments/1msanvd/sam_altman_says_yes_ai_is_in_a_bubble/

Sam Altman remains optimistic despite admitting AI bubble: OpenAI CEO says, ‘Someone will lose a phenomenal amount of money but…’ - The Economic Times, accessed December 7, 2025, https://m.economictimes.com/magazines/panache/sam-altman-remains-optimistic-despite-admitting-ai-bubble-chatgpt-5-launch-openai-ceo-says-someone-will-lose-a-phenomenal-amount-of-money-but/articleshow/123431090.cms

Sam Altman Says the Quiet Part Out Loud, Believes We’re in an AI Bubble - Futurism, accessed December 7, 2025, https://futurism.com/sam-altman-admits-ai-bubble

The 2025 AI Index Report | Stanford HAI, accessed December 7, 2025, https://hai.stanford.edu/ai-index/2025-ai-index-report

Sam Altman in 2015: “Obviously, we’d aggressively support all regulation.” In 2025: quietly lobbying to ban regulation - Reddit, accessed December 7, 2025, https://www.reddit.com/r/artificial/comments/1l6gdua/sam_altman_in_2015_obviously_wed_aggressively/

Sam Altman’s Shift from AI Regulation Advocacy to Investment Focus, accessed December 7, 2025, https://www.startupecosystem.ca/news/sam-altmans-shift-from-ai-regulation-advocacy-to-investment-focus/

TSLA Terathread - For the week of Jan 22 : r/RealTesla - Reddit, accessed December 7, 2025, https://www.reddit.com/r/RealTesla/comments/19cv3pe/tsla_terathread_for_the_week_of_jan_22/

Elon Musk just said again that @Tesla will achieve Full Self-Driving by the end of the year. Just like @ElonMusk has been saying for the past eight years. If you believe that, I have a bridge in Brooklyn you might be interested in... : r/RealTesla - Reddit, accessed December 7, 2025, https://www.reddit.com/r/RealTesla/comments/12t6vj1/elon_musk_just_said_again_that_tesla_will_achieve/

List of predictions for autonomous Tesla vehicles by Elon Musk - Wikipedia, accessed December 7, 2025, https://en.wikipedia.org/wiki/List_of_predictions_for_autonomous_Tesla_vehicles_by_Elon_Musk

Elon Musk to automakers on Tesla’s self-driving tech: ‘I’ve tried to warn them and…’, accessed December 7, 2025, https://timesofindia.indiatimes.com/technology/tech-news/elon-musk-to-automakers-on-teslas-self-driving-tech-ive-tried-to-warn-them-and/articleshow/125568574.cms

Tesla FSD will ‘go ballistic’ next year, says guy who said that last year - Electrek, accessed December 7, 2025, https://electrek.co/2025/01/29/tesla-fsd-will-go-ballistic-next-year-says-guy-who-said-that-last-year/

Grok (chatbot) - Wikipedia, accessed December 7, 2025, https://en.wikipedia.org/wiki/Grok_(chatbot)

Elon Musk launches encyclopedia ‘fact-checked’ by AI and aligning with rightwing views, accessed December 7, 2025, https://www.theguardian.com/technology/2025/oct/28/elon-musk-grokipedia

Top 20 Predictions from Experts on AI Job Loss - Research AIMultiple, accessed December 7, 2025, https://research.aimultiple.com/ai-job-loss/

Anthropic Study: Here’s How AI Is Impacting Work - Entrepreneur, accessed December 7, 2025, https://www.entrepreneur.com/business-news/anthropic-study-heres-how-ai-is-impacting-work/500407

Anthropic CEO Predicts AI Will Take Over Coding in 12 Months - Entrepreneur, accessed December 7, 2025, https://www.entrepreneur.com/business-news/anthropic-ceo-predicts-ai-will-take-over-coding-in-12-months/488533

Software Engineer Job Market 2025: Data, Trends & Salaries - Underdog.io, accessed December 7, 2025, https://landing.underdog.io/blog/software-engineer-job-market-2025

Transcript: We Have 900 Days Left - Emad Mostaque on The Tea with Myriam François, accessed December 7, 2025, https://singjupost.com/transcript-we-have-900-days-left-emad-mostaque-on-the-tea-with-myriam-francois/

Stability AI’s Founder Made Misleading Claims — Report - Crunchbase News, accessed December 7, 2025, https://news.crunchbase.com/ai-robotics/stability-ai-founder-emad-mostaque-false-claims/

Stability AI’s Founder Made Misleading Claims — Report - Nasdaq, accessed December 7, 2025, https://www.nasdaq.com/articles/stability-ais-founder-made-misleading-claims-report

Stability AI CEO Disappeared in His Pajamas in Bizarre Incident - Futurism, accessed December 7, 2025, https://futurism.com/stability-ai-ceo-pajamas

Stability AI faces a manager exodus - Börsen-Zeitung, accessed December 7, 2025, https://www.boersen-zeitung.de/english/stability-ai-faces-a-manager-exodus

5 Reasons Humane’s Screenless Tech Will Never Replace a Smartphone - MakeUseOf, accessed December 7, 2025, https://www.makeuseof.com/reasons-humane-screenless-tech-wont-replace-smartphone/

Humane AI has launched its first product as a replacement for phones. Called AiPin, it’s a screen-free and stand-alone offering that doesn’t need to be connected to an external device. : r/STEW_ScTecEngWorld - Reddit, accessed December 7, 2025, https://www.reddit.com/r/STEW_ScTecEngWorld/comments/17sa2ii/humane_ai_has_launched_its_first_product_as_a/

Humane, Whose AI Pin Flopped, to Sell Assets to HP | PYMNTS.com, accessed December 7, 2025, https://www.pymnts.com/artificial-intelligence-2/2025/humane-whose-ai-pin-flopped-to-sell-assets-to-hp/

The Humane Pin is Dead - Failory, accessed December 7, 2025, https://newsletter.failory.com/p/the-humane-pin-is-dead

The Humane AI Pin Flopped. One Hacker Is Trying to Make It the New Siri | PCMag, accessed December 7, 2025, https://www.pcmag.com/articles/humane-ai-pin-hacker-operating-system

rabbit returns: AI gadget maker takes on Sam Altman and Jony Ive in a race for AI device dominance | TechRadar, accessed December 7, 2025, https://www.techradar.com/computing/artificial-intelligence/rabbit-returns-ai-gadget-maker-takes-on-sam-altman-and-jony-ive-in-a-race-for-ai-device-dominance

Rabbit r1 - Wikipedia, accessed December 7, 2025, https://en.wikipedia.org/wiki/Rabbit_r1

Only 5,000 Users out of 100,000 Buyers Use Rabbit R1 Daily: Is the Hype Going Down?, accessed December 7, 2025, https://www.techtimes.com/articles/307653/20240926/only-5000-users-out-100000-buyers-use-rabbit-r1-daily-hype-going-down.htm

What’s next for Rabbit? Employees say they haven’t been paid for months while company teases new AI hardware | Tom’s Guide, accessed December 7, 2025, https://www.tomsguide.com/ai/whats-next-for-rabbit-employees-say-they-havent-been-paid-for-months-while-company-teases-new-ai-hardware

pichai and google face their $160 billion dilemma - Arete Research, accessed December 7, 2025, https://www.arete.net/content/Fortune_Alphabet_Feature_2023.pdf

Is artificial intelligence advancing too quickly? What AI leaders at Google say - CBS News, accessed December 7, 2025, https://www.cbsnews.com/news/google-artificial-intelligence-future-60-minutes-transcript-2023-04-16/

Google CEO Sundar Pichai to employees: ...Completely unacceptable and we got it wrong, accessed December 7, 2025, https://timesofindia.indiatimes.com/gadgets-news/google-ceo-sundar-pichai-apologizes-for-controversial-ai-chatbot-responses/articleshow/108065963.cms

Google AI Gemini’s ‘problematic’ responses are ‘completely unacceptable,’ CEO says, accessed December 7, 2025, https://qz.com/google-ai-gemini-responses-sundar-pichai-1851292682

Google CEO Sundar Pichai says its AI app problems are “completely unacceptable”, accessed December 7, 2025, https://www.cbsnews.com/news/google-gemini-app-image-completely-unacceptable-pichai-letter/

AI Gone Wrong: AI Hallucinations & Errors [2026 - Updated Monthly] - Tech.co, accessed December 7, 2025, https://tech.co/news/list-ai-failures-mistakes-errors

AI chatbots distort and mislead when asked about current affairs, BBC finds - The Guardian, accessed December 7, 2025, https://www.theguardian.com/technology/2025/feb/11/ai-chatbots-distort-and-mislead-when-asked-about-current-affairs-bbc-finds

Microsoft CEO says unfair practices by Google led to its dominance as a search engine, accessed December 7, 2025, https://www.ksat.com/business/2023/10/02/microsoft-ceo-says-unfair-practices-by-google-led-to-its-dominance-as-a-search-engine/

Microsoft CEO Says AI Could Only Tighten Google’s Stranglehold on Search - Decrypt, accessed December 7, 2025, https://decrypt.co/200029/microsoft-ceo-satya-nadella-google-dominance-search-ai

Microsoft CEO Admits That AI Is Generating Basically No Value | “The real benchmark is: the world growing at 10 percent.”, accessed December 7, 2025, https://www.reddit.com/r/microsoft/comments/1ixyp8g/microsoft_ceo_admits_that_ai_is_generating/

Inflection AI - Grokipedia, accessed December 7, 2025, https://grokipedia.com/page/Inflection_AI

Inflection AI, the year-old startup behind Chatbot Pi, raises US$1.3 billion - Forbes Australia, accessed December 7, 2025, https://www.forbes.com.au/news/innovation/inflection-ai-the-year-old-startup-behind-chatbot-pi-raises-us1-3-billion/

Microsoft deal with AI startup to be investigated by UK competition watchdog - The Guardian, accessed December 7, 2025, https://www.theguardian.com/business/article/2024/jul/16/microsoft-deal-with-ai-startup-to-be-investigated-by-uk-competition-watchdog

Reports: Microsoft under new antitrust scrutiny over Inflection deal and AI dominance, accessed December 7, 2025, https://www.geekwire.com/2024/reports-microsoft-under-new-antitrust-scrutiny-over-inflection-deal-and-ai-dominance/

World Models vs. Word Models: Why Yann LeCun Believes LLMs Will Be Obsolete - Medium, accessed December 7, 2025, https://medium.com/state-of-the-art-technology/world-models-vs-word-models-why-lecun-believes-llms-will-be-obsolete-23795e729cfa

Meta’s AI chief: LLMs will never reach human-level intelligence - TheNextWeb, accessed December 7, 2025, https://thenextweb.com/news/meta-yann-lecun-ai-behind-human-intelligence

The Economist 22 Apr 2023 - AI Special | PDF | Artificial Intelligence - Scribd, accessed December 7, 2025, https://www.scribd.com/document/758397861/The-Economist-22-Apr-2023-AI-Special

Yann LeCun to depart Meta and launch AI startup focused on ‘world models’ | Hacker News, accessed December 7, 2025, https://news.ycombinator.com/item?id=45897271

Yann LeCun, Pioneer of AI, Thinks Today’s LLM’s Are Nearly Obsolete | Hacker News, accessed December 7, 2025, https://news.ycombinator.com/item?id=43562768

Yann LeCun Thinks AI Should Learn to Ignore Things, accessed December 7, 2025, https://nyudatascience.medium.com/yann-lecun-thinks-ai-should-learn-to-ignore-things-6049833c463b