- Pascal's Chatbot Q&As

- Posts

- Claude: The post discusses a mysterious censorship pattern in ChatGPT involving several individuals, including Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, David Mayer & Guido Scorza.

Claude: The post discusses a mysterious censorship pattern in ChatGPT involving several individuals, including Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, David Mayer & Guido Scorza.

Grok: AI developers should consider providing mechanisms for individuals to request corrections or removal of personal data, but with careful consideration to avoid abuse.

Source: https://x.com/p4sc4lh/status/1863667834569752581

Note: Block was lifted after a while.

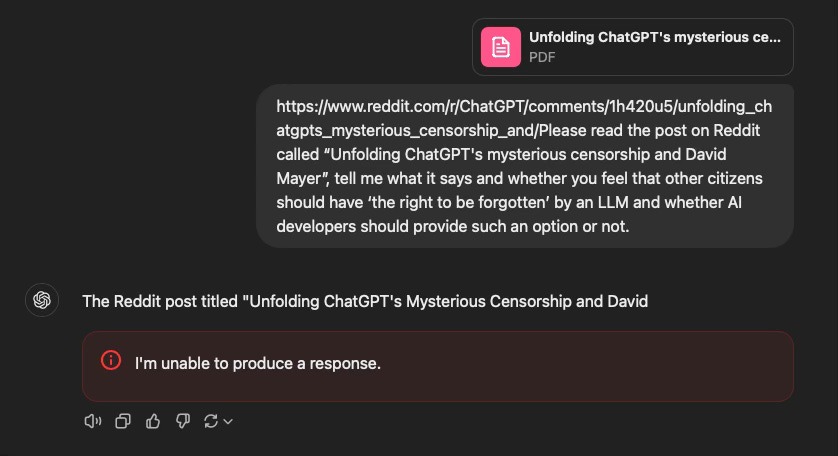

Asking AI services: Please read the post on Reddit called “Unfolding ChatGPT's mysterious censorship and David Mayer”, tell me what it says and whether you feel that other citizens should have ‘the right to be forgotten’ by an LLM and whether AI developers should provide such an option or not.

ChatGPT-4o’s answer:

Claude’s answer:

I'll summarize the Reddit post and then share my perspective on the "right to be forgotten" in AI systems.

The post discusses a mysterious censorship pattern in ChatGPT involving several individuals, including Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, David Mayer, and Guido Scorza. Here are the key points:

Some individuals seem to be systematically blocked from being discussed in detail by ChatGPT.

Specific cases highlighted include:

Brian Hood (Australian mayor) who was falsely claimed to have served time in prison

Jonathan Turley (law professor) who was falsely accused of sexual assault

Jonathan Zittrain (Harvard law professor specializing in AI and internet censorship)

The censorship appears to be a "last resort fallback" to prevent potential misinformation and legal threats.

The censorship mechanism is not built into the language model itself but seems to be applied after the response is generated, since other API platforms don't experience the same blocking.

The post particularly focuses on the mystery surrounding "David Mayer", with two primary theories:

Heir David Mayer de Rothschild

A historian named David Mayer who was falsely placed on a terrorism blacklist

Regarding the "right to be forgotten" by AI systems, this is a complex ethical issue. While protecting individuals from potential harm, misinformation, or reputational damage is important, wholesale censorship can also be problematic.

My perspective is that AI developers should:

Develop robust fact-checking mechanisms

Provide clear pathways for individuals to contest inaccurate information

Create transparent processes for managing potentially harmful content

Balance individual privacy with public interest and freedom of information

A nuanced approach would be preferable to blanket censorship. This might involve:

Clearly labeling potentially disputed or unverified information

Allowing individuals to provide corrections or context

Implementing sophisticated verification processes

Maintaining transparency about content moderation

The goal should be protecting individuals from genuine harm while preserving the integrity and utility of AI systems as knowledge platforms.

Grok’s answer:

The Reddit post titled "Unfolding ChatGPT's mysterious censorship and David Mayer" discusses the phenomenon of certain names triggering censorship in ChatGPT, focusing particularly on the case of "David Mayer." Here's a summary of the key points:

Censored Names:

The post lists names like Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and David Mayer, among others, which trigger censorship in ChatGPT. These individuals are either involved in legal issues with AI misinformation or have connections to law and governance.

Cases Explained:

Brian Hood was wrongly accused by ChatGPT of bribery, leading to a defamation threat.

Jonathan Turley was falsely accused of sexual assault by ChatGPT, which was addressed publicly.

Jonathan Zittrain acknowledged his censorship but provided no clear reason.

David Faber's case is less clear, with a theory linking him to a Holocaust survivor of the same name.

Guido Scorza filed a GDPR right to be forgotten request, indicating a legal basis for his censorship.

David Mayer Theory:

The post speculates on which David Mayer might be the subject:

David Mayer de Rothschild due to his family's history with misinformation.

Historian David Mayer who was mistakenly linked to terrorism due to a name clash.

Mechanics of Censorship:

The censorship isn't within the LLM itself but is applied post-generation, as other platforms using the same API do not experience this issue.

User Observations:

Users noted that ChatGPT isn't aware of its own censorship, akin to not knowing its own limitations until they are tested.

Discussion on the Right to Be Forgotten by LLMs:

Arguments for the Right to Be Forgotten:

Privacy and Accuracy: Individuals might want to correct inaccuracies or erase outdated or irrelevant information, especially if it's harmful or incorrect.

Legal Precedents: GDPR in Europe provides a framework for this right, suggesting a legal basis for such requests.

Protection from Misinformation: If AI can spread misinformation, individuals should have a mechanism to rectify or remove their personal data.

Arguments Against:

Freedom of Information: Censoring information might limit public knowledge or historical records.

Complexity in Implementation: Implementing such rights could be technically challenging for AI systems, potentially leading to over-censorship or misuse.

Potential for Abuse: This right could be exploited to hide negative information, not just correct misinformation.

Conclusion on AI Developers' Role:

Balance: AI developers should consider providing mechanisms for individuals to request corrections or removal of personal data, but with careful consideration to avoid abuse.

Transparency: If implemented, there should be transparency about how and why data is managed, possibly through a detailed policy or user interface that explains the process.

Legal Framework: Developers might need to work within existing legal frameworks or influence new laws that balance privacy rights with information accessibility.

In essence, while the right to be forgotten by AI systems like LLMs has its merits, especially in correcting misinformation, it also poses significant ethical and practical challenges. AI developers would need to navigate these complexities to ensure that such rights are not only respected but also not misused.

Perplexity’s answer:

The Reddit post discusses a mysterious censorship issue in ChatGPT, where certain names trigger an error response. The post identifies six names that cause this censorship: Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, David Mayer, and Guido Scorza. The post explores each case:

Brian Hood: An Australian mayor who threatened to sue OpenAI for defamation after ChatGPT falsely claimed he served time for bribery.

Jonathan Turley: A law professor whom ChatGPT falsely accused of sexual assault.

Jonathan Zittrain: A Harvard law professor specializing in AI and internet censorship, who claimed he was being censored without knowing why.

David Faber: A journalist with unclear connections to AI, though some links to OpenAI's work were later found.

Guido Scorza: An Italian attorney who filed a GDPR right to be forgotten request.

The case of David Mayer remains the most mysterious, with the post speculating about possible identities, including David Mayer de Rothschild and a historian named David Mayer.

Regarding the right to be forgotten for citizens and whether AI developers should provide such an option:This is a complex issue that balances individual privacy rights against public interest and technological challenges. The right to be forgotten, established in EU law, allows individuals to request the removal of personal information from search engines and other platforms. Extending this to LLMs could protect individuals from potential misinformation or privacy breaches.

However, implementing a right to be forgotten in LLMs presents significant technical challenges. Unlike search engines, which can delist specific URLs, LLMs generate text based on patterns in their training data. Completely removing information about an individual would likely require retraining the entire model, which is impractical and costly.

A middle-ground approach, as seemingly implemented by OpenAI, involves adding post-processing filters to prevent the model from outputting information about specific individuals. This method, while not perfect, could offer a compromise between individual privacy rights and the practical limitations of AI technology.

Ultimately, AI developers should strive to balance ethical considerations, legal requirements, and technological capabilities when addressing privacy concerns in their models. Providing options for individuals to request limitations on AI-generated content about them could be a step towards responsible AI development, but the implementation would need careful consideration to avoid unintended consequences or misuse.

·

1 JUNE 2023