- Pascal's Chatbot Q&As

- Posts

- Attackers can exploit these latency variations to infer sensitive information, including: Training data membership (e.g., whether a particular input was part of the training set).

Attackers can exploit these latency variations to infer sensitive information, including: Training data membership (e.g., whether a particular input was part of the training set).

GateBleed can serve as a forensic tool for litigants to demonstrate improper model training practices, shifting the burden of proof onto AI companies.

GateBleed and the Growing Risks of Hardware-Based AI Privacy Attacks

by ChatGPT-4o

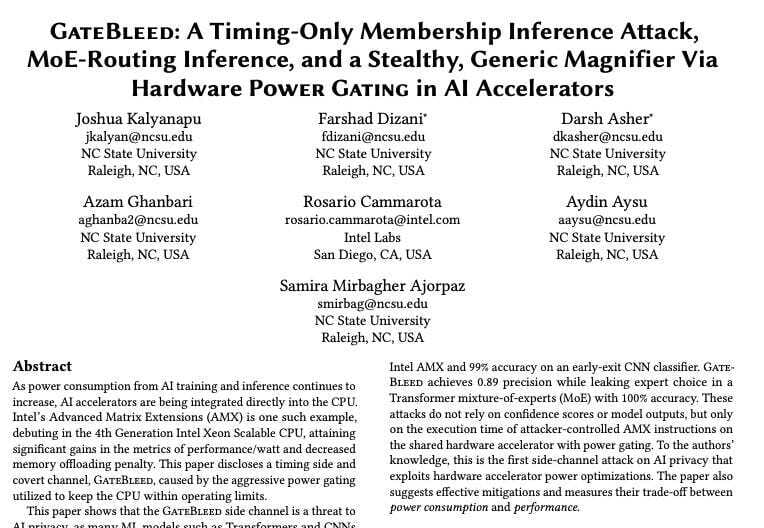

The research presented in “GATEBLEED: Exploiting On-Core Accelerator Power Gating for High Performance & Stealthy Attacks on AI” (arXiv:2507.17033v3) unveils a groundbreaking class of privacy vulnerabilities rooted in the hardware accelerators that underpin today’s AI systems. The GateBleed vulnerability—named for its ability to “bleed” private information through power-gated accelerator timing discrepancies—represents a new frontier in hardware-based AI attacks that circumvent traditional software-based defenses. As AI workloads increasingly shift to specialized on-chip accelerators like Intel’s Advanced Matrix Extensions (AMX), these types of attacks signal a growing class of threats that are difficult to detect, harder to fix, and carry sweeping implications for AI performance, societal trust, and regulatory compliance.

I. Overview of the GateBleed Vulnerability

GateBleed exploits the behavior of AI accelerators—specifically, the timing delays introduced by power gating optimizations in Intel’s AMX hardware. These accelerators are designed to conserve energy by powering off unused components and waking them up when needed. However, this wake-up process introduces measurable latency, which varies depending on how recently the component was used. Attackers can exploit these latency variations to infer sensitive information, including:

Training data membership (e.g., whether a particular input was part of the training set).

Model-internal decisions (e.g., routing paths in Mixture-of-Experts (MoE) models or early exits in adaptive networks).

Operational states (e.g., whether cached memory or certain expert networks were triggered).

These leaks occur without accessing model outputs (like logits or confidence scores), making traditional defenses—such as differential privacy or output masking—ineffective.

II. Implications for AI Performance and Integrity

Impact on AI System Design and Performance

Defending against GateBleed requires either disabling power-gating or maintaining the accelerator in a constantly warm state, both of which increase power consumption by up to 12%—a trade-off that contradicts the very goal of energy-efficient AI design.

Software-based mitigations are largely ineffective since the attack leverages hardware-level behavior invisible to application-layer defenses.

Performance optimizations that favor aggressive power management now pose a latent risk to model confidentiality and privacy, undermining the safe deployment of AI in sensitive environments (e.g., healthcare, finance, military).

AI Fairness and Trustworthiness

Membership inference via GateBleed can lead to targeted adversarial attackson specific data subjects whose inclusion in the training set can be inferred with high accuracy (up to 99.72% in CNNs, 81% in Transformers).

Such inference also threatens the fairness and auditability of AI systems, especially in regulated sectors where training data provenance must be verified or concealed for ethical/legal reasons.

Erosion of Hardware-Level Trust

GateBleed can even be executed remotely under certain conditions, bypassing modern malware detectors, including those monitoring speculative execution or cache behavior.

Worse, the vulnerability persists even in trusted execution environments such as Intel SGX, meaning secure enclaves cannot shield AI computations from such leaks.

This illustrates how microarchitectural trust boundaries have eroded, raising serious concerns for multi-tenant cloud environments, MLaaS providers, and federated AI deployments.

III. Broader Societal and Legal Risks

Regulatory and Legal Exposure

The ability to deduce training data from a deployed AI model exposes companies to intellectual property lawsuits, especially if the leaked data includes proprietary or copyrighted content (e.g., from news articles, books, or private datasets).

This risk is already materializing, as seen in ongoing lawsuits involving Stability AI, Google, and Meta over unauthorized data use.

GateBleed can serve as a forensic tool for litigants to demonstrate improper model training practices, shifting the burden of proof onto AI companies.

Democratization of Attack Capabilities

Unlike previous side-channel attacks that required privileged access, GateBleed can operate from unprivileged processes, and in some configurations, via network latency observations alone.

This lowers the bar for attackers and makes even commercial and consumer-grade systems potentially vulnerable if they share resources with a malicious tenant or actor.

It opens the door to surveillance-style monitoring, espionage, or misuse by authoritarian governments seeking to identify model behaviors or user inputs.

Psychological and Societal Fallout

As the public becomes aware of hardware-level AI privacy risks, this may contribute to erosion of trust in AI systems broadly—especially those deployed in consumer-facing roles such as healthcare diagnostics, virtual assistants, or educational platforms.

The fact that hardware can betray user input and training data without any software bug or misconfiguration may lead to a societal sense of inevitability and loss of control, undermining adoption and trust.

IV. Are More Such Vulnerabilities Likely?

Yes. As stated in the EurekAlert summary:

“Now that we know what to look for, it would be possible to find many similar vulnerabilities”.

GateBleed is unlikely to be the last of its kind. Several trends support this:

Expanding use of on-core accelerators (e.g., NPUs, GPUs, AMX) in general-purpose CPUs will widen the attack surface.

Increasing model complexity (e.g., MoEs, agentic AI, conditional execution) creates more variability in compute patterns, which power gating can unintentionally encode.

Persistent incentives for aggressive energy optimization will continue to favor hardware behaviors (e.g., dynamic frequency scaling, deep power states) that correlate with model internals and user data.

Limited availability of high-fidelity attack detectors for accelerator-specific behaviors means attackers can evolve faster than defenders, especially in multi-tenant or cloud environments.

V. Recommendations and Mitigation Strategies

1. Hardware-Level Mitigations

Microcode Updates: Force AMX (or equivalent) units to maintain a fixed power state during sensitive operations.

Hardware Redesign: Future chips should include power-gating-aware isolation mechanisms or timing equalizers to avoid observable reuse-dependent timing gaps.

Context-Switch-Based Resetting: Power off AI accelerators on every context switch to minimize cross-process leakage, though this incurs a 2–12% power overhead.

2. Software and OS-Level Interventions

Compiler Inserts: Use dummy AMX instructions to maintain a warm state and mask reuse distance.

AI Framework Patch Audits: Conduct source-code reviews of popular AI libraries (PyTorch, TensorFlow, ONNX, etc.) to eliminate conditional matrix ops based on sensitive decisions.

Isolation Policies: On multi-tenant systems, disallow core-sharing between AI workloads and untrusted code.

3. Regulatory and Policy Recommendations

Disclosure Requirements: AI vendors and cloud providers should be required to disclose the presence of known hardware-level privacy risks.

Certification Standards: Include hardware privacy evaluation as part of AI model certification, especially for government or healthcare use.

Accountability Frameworks: Establish liability for companies that continue using vulnerable hardware without mitigation, especially if user data leakage can be demonstrated.

4. Societal and Ethical Interventions

Consumer Education: Raise awareness that even encrypted, isolated, or sandboxed AI systems can leak information via hardware behaviors.

Open Research Funding: Support academic and industrial exploration of next-gen side-channel defense mechanisms that span software, firmware, and hardware layers.

Industry Collaboration: Encourage vendors like Intel, AMD, ARM, and NVIDIA to jointly develop and publish best practices for AI accelerator privacy hygiene.

Conclusion

GateBleed is a watershed moment in the evolution of AI cybersecurity. It underscores a critical blind spot in the race for faster, more efficient AI: the assumption that hardware optimizations are neutral and safe. Instead, we now see how deeply entangled performance engineering, model behavior, and data privacy have become. As the world barrels toward ubiquitous AI deployment, addressing vulnerabilities like GateBleed must become a shared priority for chipmakers, AI developers, regulators, and users alike. Anything less risks building tomorrow’s intelligence infrastructure on a foundation riddled with invisible cracks.

Epilogue

🔓 How GateBleed Works (Non-Technical Explanation)

Imagine you’re watching someone walk into a house and turn on the lights. If the lights come on instantly, you might guess they were just in that room — everything was already warmed up. But if there’s a delay, maybe a flicker or a hum before the lights come on, you’d suspect the room hadn’t been used in a while. Now imagine being able to listen from the outside and tell, just from the sound of that flicker, whether someone had been there recently.

This is essentially what GateBleed does — but instead of lights and rooms, it listens for tiny timing differences inside a computer’s AI chip.

Here’s what’s happening in practice:

🧠 The AI Brain and Its “Muscles”

Modern AI runs on specialized hardware — like mini turbo-boosters inside computer chips — that help it think faster while using less energy. These are called AI accelerators. But to save power, the computer shuts off parts of these acceleratorswhen they’re not being used, a bit like putting them to sleep.

When the AI starts working again, it has to wake up those parts — and this takes a small but noticeable amount of time.

⏱️ Timing Becomes a Clue

GateBleed is an attack that measures those small delays — whether something takes a little longer to run or not. Those differences can reveal which internal decisions the AI is making or whether it’s seen your data before.

Think of it like this:

If the AI has trained on your data before, it might “recognize” it and take a shortcut, finishing faster.

If it hasn’t seen your data, it may do more work and take a bit longer.

That timing difference — even just a few millionths of a second — can be measured. And if you repeat this many times, the pattern becomes clear.

🔍 What Does the Attacker Actually Do?

They send inputs to the AI system (like a sentence or a question).

They carefully watch how long the system takes to respond — not what it says, just how fast it answers.

From that response time, they infer secrets — such as whether the system was trained on that input, or which part of the AI was activated.

This attack works without needing access to the model’s code or outputs. In fact, attackers can learn about training data and model behavior just by timing how long things take.

🎯 Why This Is a Big Deal

It works even if the system is fully encrypted or sandboxed.

It exposes sensitive information, such as:

Whether a user’s data was used to train an AI

What internal logic the AI is using (e.g., what “expert” it calls on)

It bypasses all the usual defenses like access controls, encryption, and masking — because it exploits hardware behavior, not software.

🛠️ In Summary

GateBleed is like a stethoscope for AI chips: it lets attackers listen to the heartbeat of the system and figure out what it’s doing — even when they’re not supposed to know. All they need is a way to send inputs and measure response times, or in some cases, just share the same hardware.

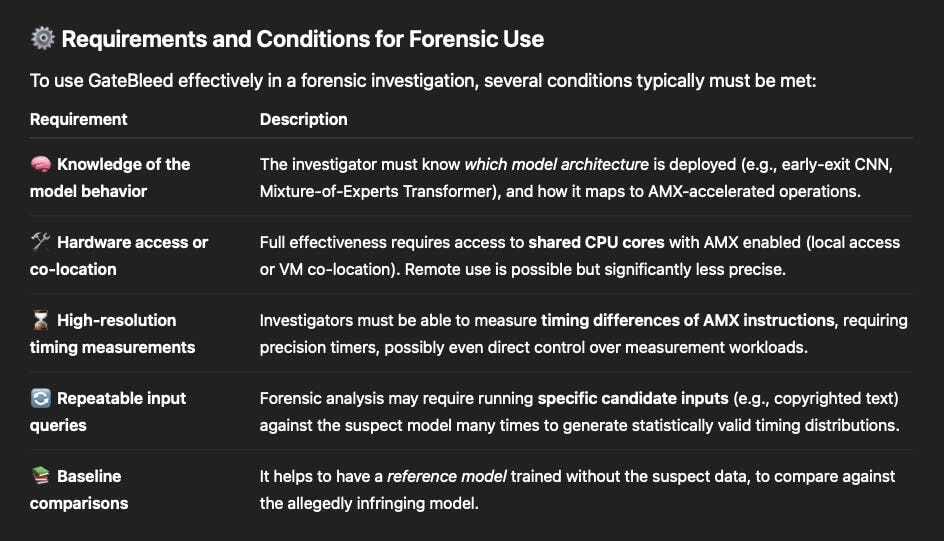

Using GateBleed as a forensic tool to detect unauthorized training data use or AI model misbehavior is possible, but not straightforward. It depends on the context, the access level of the auditor or investigator, and the resources available. Here’s a detailed breakdown of the feasibility, challenges, and potential practical workflows for forensic use:

🔍 How GateBleed Could Be Used Forensically

GateBleed enables membership inference—that is, determining whether a specific data point was used during training—without needing access to model outputs like logits or confidence scores. This opens the door to forensic applications such as:

Verifying data provenance in trained models (e.g., was proprietary or copyrighted material used without authorization?)

Detecting violations of licensing agreements (e.g., did an AI vendor train on data marked as opt-out or with a pay-per-use license?)

Supporting legal discovery in lawsuits over AI training data (e.g., Stability AI, Meta, Google cases)

🧪 Practical Forensic Workflow (Under Ideal Conditions)

Identify candidate data suspected to have been used without permission (e.g., journal articles, proprietary code, private datasets).

Gain access to the hardware where the model is deployed, or co-locate a process on the same CPU core (e.g., via a cooperative audit or legal discovery).

Run timing probes using minimal AMX instructions before and after querying the model with the candidate inputs.

Compare timing results: Look for statistically significant latency differences that indicate “cold” (non-member) vs. “warm” (member) AMX power states.

Validate results by repeating across various inputs and comparing with reference models or baseline training data.

🧭 Final Thoughts and Recommendations

GateBleed shows strong potential for audits, whistleblowing, and targeted legal investigations—but it is not yet a “plug-and-play” forensic tool. To increase its real-world utility:

Tooling should be developed: A user-friendly forensic suite could automate timing collection, statistical analysis, and comparison with known datasets.

Cloud providers should enable opt-in audits: Trusted execution environments could be expanded to allow third-party timing measurements under legal safeguards.

Legislators and regulators could mandate gate-level attestations: Requiring model vendors to provide evidence of training set exclusion could reduce reliance on side-channel detection altogether.