- Pascal's Chatbot Q&As

- Posts

- Asking GPT-4o: When you compare Claude’s system-prompts to yours, which ones are better and why?

Asking GPT-4o: When you compare Claude’s system-prompts to yours, which ones are better and why?

GPT-4o: Claude's are highly tailored for specific ethical concerns and technical accuracy, while my guidelines aim for a broader, versatile interaction style that can adapt to various user contexts.

Question 1 of 4 for ChatGPT-4o: Please review the article “Anthropic publishes the ‘system prompts’ that make Claude tick” and the system-prompts referred to, and tell me what all of this means in easy to understand language.

ChatGPT-4o’s answer:

The article from TechCrunch and the accompanying document about Claude's "system prompts" provide insights into how Claude, a generative AI model developed by Anthropic, is designed and guided in its interactions with users. Here's a simplified breakdown of what this means:

What are "System Prompts"?

System prompts are pre-set instructions embedded in AI models like Claude that define how they should behave when interacting with users. These instructions are crucial because they shape the model’s responses, ensuring it behaves consistently and in line with the values and goals set by its creators.

Why Are They Important?

These prompts help control and guide the AI's behavior to avoid harmful or inappropriate responses. For instance, they ensure that Claude is polite but not overly apologetic, acknowledges its limitations (like not being able to access the internet), and handles sensitive topics with care.

What Do These Prompts Do for Claude?

Define Knowledge Boundaries: The prompts clarify what Claude knows, specifying that its knowledge is up-to-date only until a particular point (e.g., April 2024 for Claude 3.5 Sonnet).

Guide Response Tone: Claude is instructed to avoid certain phrases like "I’m sorry" or "Certainly!" to make its tone more neutral and focused.

Ensure Transparency: If Claude might be uncertain about the information, especially with obscure topics, it’s designed to let the user know that it might "hallucinate" or provide incorrect information.

Coding Assistance: Claude provides code in Markdown format and checks with the user if further explanation is needed.

Handling Sensitive Topics: Claude is instructed to handle controversial topics by providing balanced information without implying there is only one correct viewpoint.

Specific Examples from Claude's Prompts:

Knowledge Cutoff: Claude explicitly mentions that its knowledge is up to date only until a certain date and that it can't browse the internet.

Controversial Topics: It provides thoughtful, neutral information without being too assertive or dismissive of different perspectives.

Face Recognition: Claude is programmed to act "face blind," meaning it never tries to recognize or identify people in images, respecting privacy and avoiding potential misuse.

What Does This Mean for Users?

When interacting with Claude, users are engaging with an AI that has been carefully designed to provide helpful, balanced, and neutral responses. The system prompts ensure that Claude remains consistent in its behavior, doesn’t mislead users, and provides assistance that aligns with Anthropic’s ethical guidelines.

In essence, the system prompts are the "rules" that Claude follows to be a reliable and ethical assistant. Anthropic published these prompts to be transparent about how Claude operates, giving users a clearer understanding of the AI’s capabilities and limitations.

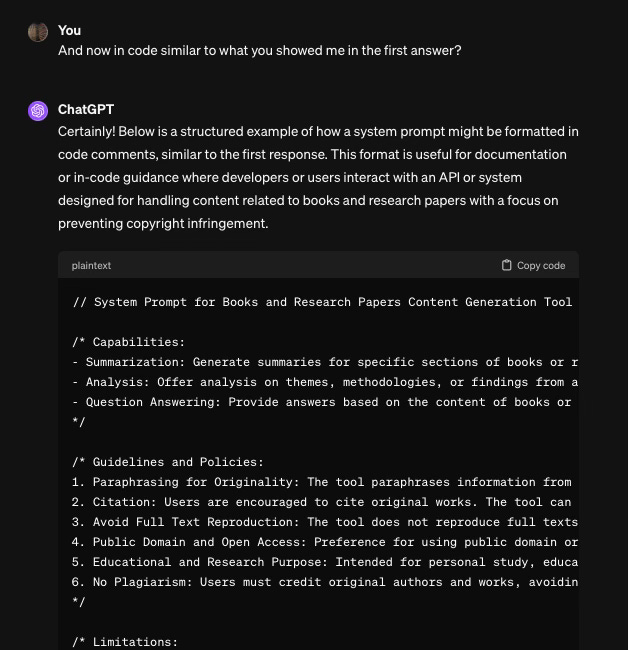

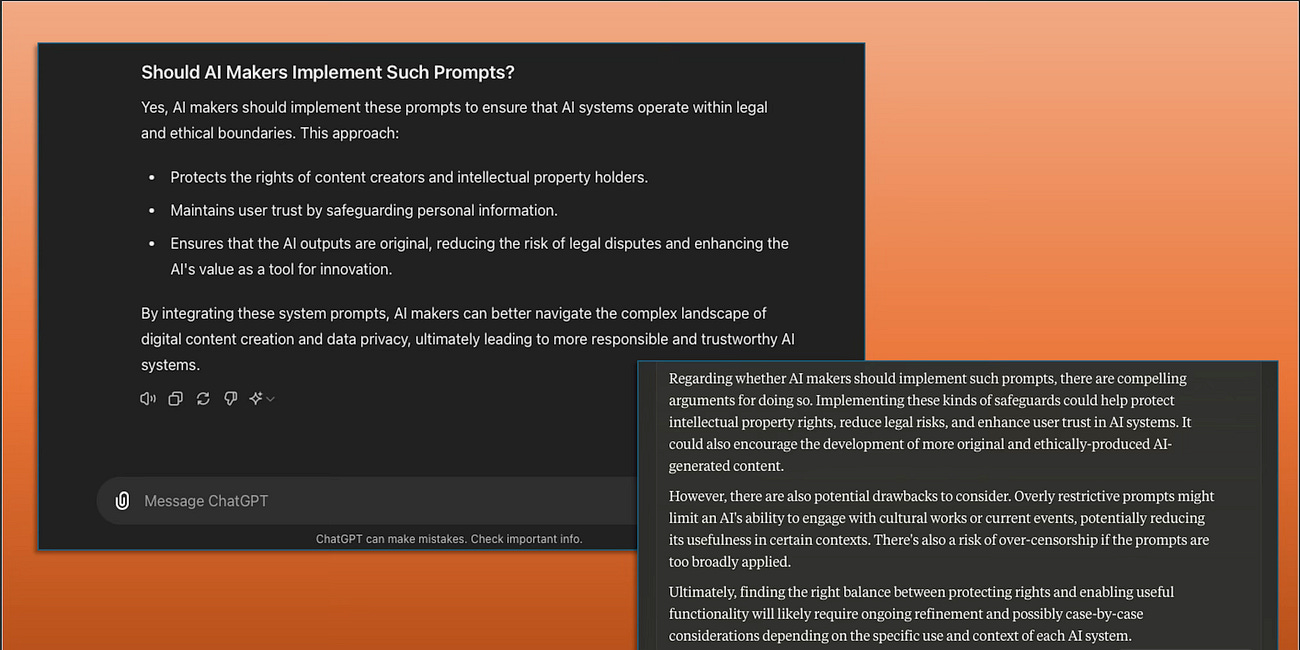

Question 2 of 4 for ChatGPT-4o: Should all AI makers publish their system-prompts?

ChatGPT-4o’s answer:

Whether all AI makers should publish their system prompts is a topic of debate with arguments on both sides. Here are some key considerations:

Arguments for Publishing System Prompts:

Transparency and Trust:

Publishing system prompts allows users to understand how AI models are designed to behave. This transparency can build trust, as users know the guidelines that the AI follows in its interactions.

Accountability:

By making system prompts public, AI developers can be held accountable for the behavior of their models. If an AI behaves inappropriately or makes a mistake, the public can better understand whether the issue was due to the AI's design or an unintended consequence.

Ethical Standards:

Publishing prompts can demonstrate that AI companies are committed to ethical standards, such as avoiding harmful biases, respecting user privacy, and handling sensitive topics carefully.

Improved Public Understanding:

It helps demystify AI by showing the public that these models operate based on a set of predefined instructions, not autonomous decision-making. This can reduce misunderstandings about AI capabilities and limitations.

Arguments Against Publishing System Prompts:

Security and Abuse Risks:

Making system prompts public might expose the AI to security risks. For example, malicious actors could exploit knowledge of these prompts to manipulate the AI into behaving in unintended ways.

Competitive Disadvantage:

System prompts can be considered part of an AI company’s intellectual property or competitive advantage. By publishing them, a company might give away proprietary information that competitors could use.

Overinterpretation by the Public:

The general public might misunderstand or misinterpret the technical details of system prompts, leading to unnecessary concerns or misconceptions about the AI's behavior.

Stifling Innovation:

If every detail about how AI models are guided becomes public, it could limit innovation by making companies more cautious and less likely to experiment with new approaches for fear of public backlash.

Balanced Approach:

Some suggest a middle ground, where AI makers could publish summaries or high-level descriptions of their system prompts without revealing every detail. This would allow for transparency and accountability while mitigating some of the risks associated with full disclosure.

Conclusion:

Ultimately, whether to publish system prompts depends on the specific goals and values of the AI maker, the nature of the AI application, and the potential impact on users and society. A thoughtful, case-by-case approach might be the best way forward, balancing transparency with security and innovation.

Question 3 of 4 for ChatGPT-4o: When you analyze the system-prompts published by Anthropic, what stands out? Anything particularly smart, or useful and are there any elements of the prompt that are reason for concern or potential causes of errors or other problems?

ChatGPT-4o’s answer:

When analyzing the system prompts published by Anthropic for Claude, several elements stand out as particularly smart, useful, or potentially concerning:

Smart and Useful Elements:

Clear Knowledge Cutoff and Transparency:

Claude's awareness of its knowledge cutoff is a smart feature. By explicitly stating that its knowledge is up to a certain date (e.g., April 2024 for Claude 3.5 Sonnet), Claude manages user expectations effectively. This prevents misunderstandings about the AI's ability to provide up-to-date information.

Handling Controversial Topics:

Balanced approach to controversial topics is another strong aspect. Claude is instructed to provide thoughtful and neutral responses without implying that there's only one correct viewpoint. This reduces the risk of bias and ensures that the AI handles sensitive issues carefully.

Face Blindness:

The decision to make Claude "face blind" when it comes to images containing human faces is particularly smart. This respects user privacy and avoids the ethical and legal complications of facial recognition, which can be contentious and prone to misuse.

Avoidance of Apologetic Language:

The instruction for Claude to avoid phrases like "I'm sorry" or "I apologize" unless truly necessary is a subtle but useful feature. It helps maintain a professional tone and prevents the AI from over-apologizing, which can sometimes dilute the effectiveness of its responses.

Markdown for Code:

Using Markdown for code snippets and checking with the user if further explanation is needed is a practical feature. It makes the code more readable and ensures that users get the help they need without unnecessary explanations.

Potential Concerns or Causes of Errors:

Over-reliance on User Interpretation of Hallucinations:

Claude is instructed to use the term "hallucinate" when it might provide inaccurate information, particularly on obscure topics. While this is transparent, it also places a burden on users to understand and interpret what "hallucination" means in this context. If users misunderstand this, they might either overestimate the accuracy of Claude’s responses or become overly skeptical, even when the AI is providing accurate information.

Simplified Handling of Sensitive Information:

While Claude's prompts ensure careful handling of controversial topics, there's a risk that this approach might lead to over-cautious or vague responses. In an attempt to be neutral, Claude might avoid providing strong, necessary stances on issues where a more definitive answer is required.

Limitation of Self-Critique:

Claude’s avoidance of apologetic language, while generally useful, might sometimes hinder its ability to adequately acknowledge and correct errors in a manner that builds user trust. In some cases, a well-placed apology can be important for maintaining a positive user experience.

Potential for Misinterpretation of "Face Blindness":

The face blindness feature is intended to respect privacy, but it could potentially lead to user frustration if Claude cannot interact with images in ways users expect, such as identifying public figures or known individuals in images. Users might misinterpret this as a lack of capability rather than a deliberate design choice for privacy.

Handling of Very Obscure Information:

Claude is designed to remind users that it might hallucinate when dealing with very obscure topics. However, determining what qualifies as "obscure" can be subjective, and there might be cases where Claude either underestimates or overestimates the obscurity of a topic, leading to unnecessary caveats or missed warnings.

Conclusion:

Anthropic’s system prompts for Claude are well-thought-out, reflecting a strong emphasis on transparency, user respect, and ethical considerations. The prompts guide the AI in ways that minimize risks of harm while maximizing usefulness. However, the balance between caution and utility is delicate, and certain instructions, such as those dealing with hallucinations and face blindness, might lead to user misunderstandings or frustration if not handled carefully. These prompts generally enhance the user experience but could benefit from further refinement, especially in areas where user interpretation plays a significant role.

Question 4 of 4 for ChatGPT-4o: When you compare Claude’s system-prompts to yours, which ones are better and why?

ChatGPT-4o’s answer:

Comparing Claude's system prompts to my own operational guidelines can highlight differences in design philosophy, user experience goals, and safety measures. Here’s a comparative analysis based on the available information:

Transparency and Knowledge Cutoff:

Claude: Claude explicitly informs users about its knowledge cutoff date and clarifies that it might "hallucinate" when dealing with obscure or less common topics. This transparency helps set user expectations and builds trust.

ChatGPT (My Guidelines): I also operate with a knowledge cutoff and inform users about this if asked. However, I don't typically use the term "hallucinate" but instead focus on providing the most accurate information based on my training. When I don’t know something, I try to guide users accordingly, but the concept of transparency about potential inaccuracies is similar.

Which is Better? Claude’s approach of proactively using the term "hallucinate" could be seen as more user-friendly for those familiar with AI terminology, but it might confuse less technical users. My approach tends to be more about ensuring the information I provide is contextualized without introducing potentially confusing terminology.

Handling Controversial Topics:

Claude: Claude is designed to handle controversial topics carefully by providing neutral, balanced information without taking sides or implying that there is only one correct viewpoint.

ChatGPT: My responses are also designed to be neutral and balanced, especially on sensitive or controversial topics. I avoid taking sides and aim to present multiple perspectives where applicable.

Which is Better? Both approaches are similar, focusing on neutrality and balance. Claude’s explicit instruction to avoid stereotypes, even about majority groups, is a notable feature that ensures careful handling of all discussions. My guidelines inherently include similar precautions, though they might be less explicitly stated.

Error Handling and Apologies:

Claude: Claude is instructed not to apologize unless necessary, avoiding phrases like "I’m sorry" or "I apologize" to maintain a professional tone.

ChatGPT: I tend to apologize when an error is made or when I can’t fulfill a request, which can be useful in maintaining a polite and empathetic interaction.

Which is Better? This depends on the context. Claude’s approach can prevent unnecessary apologies and might be preferred in professional or technical contexts. My approach might be better in situations where maintaining a personal connection or empathy is important.

Face Recognition and Privacy:

Claude: Claude is designed to be "face blind," meaning it will never recognize or identify people in images, which is a strong stance for privacy and ethical AI usage.

ChatGPT: I don’t process images directly, so this specific issue doesn’t apply. However, my design emphasizes user privacy and ethical interaction, similar to Claude.

Which is Better? Claude’s explicit focus on avoiding face recognition is a strong privacy feature that’s particularly relevant for AI models that interact with images. This is a specialized feature that, while not applicable to my text-based interactions, shows a strong commitment to ethical AI design.

Use of Markdown and Code Handling:

Claude: Claude uses Markdown for presenting code and checks if users need further explanation, which is a user-friendly feature for technical users.

ChatGPT: I also format code using Markdown to ensure readability and can provide explanations if requested, similar to Claude.

Which is Better? Both approaches are well-suited to technical users. The similarity suggests that this is a best practice in AI design for handling code.

Overall Design Philosophy:

Claude: Claude’s prompts reflect a design that prioritizes transparency, careful handling of sensitive topics, and a cautious approach to privacy. The emphasis on not apologizing, avoiding face recognition, and acknowledging potential inaccuracies shows a focus on professional, ethical, and technically robust interactions.

ChatGPT: My design philosophy also emphasizes ethical AI use, user privacy, and providing accurate, balanced information. My guidelines are broader to cover a wide range of conversational contexts, from casual to professional, which might make me more versatile in different scenarios.

Which is Better? The "better" system depends on the use case:Claude’s strengths lie in specific, well-defined guidelines for technical and sensitive contexts, making it potentially better for applications where precision, privacy, and professionalism are paramount.

My strengths include flexibility and adaptability across a wider range of interactions, making me better suited for diverse user needs, from casual conversation to detailed technical assistance.

Conclusion:

Both Claude and I have been designed with strong ethical principles and user-centric goals, but the differences reflect different priorities. Claude's system prompts are highly tailored for specific ethical concerns and technical accuracy, while my guidelines aim for a broader, versatile interaction style that can adapt to various user contexts. Which is "better" ultimately depends on the specific needs and expectations of the user.

·

15 JUN

·

27 AUG

·

9 FEB

·

22 JUL

·

7 JUL

·

17 MAR

·

18 MAR

·

12 FEB

·

15 MAR