- Pascal's Chatbot Q&As

- Posts

- "The argument that LLMs infringe by generating exact copies of training data is flawed" vs "What could cause an LLM to consistently and repeatedly produce 500+ tokens verbatim?"

"The argument that LLMs infringe by generating exact copies of training data is flawed" vs "What could cause an LLM to consistently and repeatedly produce 500+ tokens verbatim?"

GPT-4o: Memorization, Biased Data, Inference Settings. Gemini: Hyperparameter Tuning, Data Quality, Sampling Methods, Model Complexity, Attention Mechanisms, Prompt Engineering, Hardware Limitations

Part 1/2 The argument that LLMs infringe by generating exact copies of training data is flawed.

by ChatGPT-4o

This critique of the argument highlights the confusion between possibility and probability in Markov chain processes and large language models (LLMs). Let's break it down and provide an example to clarify:

1. Conflating Possibility with Probability:

Possibility refers to whether something can happen. In the case of a Markov chain, it means that any particular path through the chain is technically possible, no matter how improbable.

Probability refers to how likely that event is to happen. Just because a Markov chain is capable of generating any sequence doesn’t mean all sequences are equally likely.

In the case of LLMs, generating any specific output (such as a verbatim copy of copyrighted text) is technically possible, but the probability is extremely low because LLMs are not simply storage systems that memorize entire corpora of data—they generate text probabilistically.

2. Example with a Markov Chain:

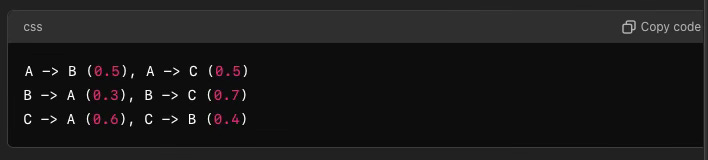

Let’s look at a simple Markov chain with three states: A, B, and C, where each state can lead to another with a given probability.

This Markov chain can generate paths like:

A → B → C → A, etc.

However, the probability of any specific path diminishes the further we go along. For example, the probability of generating the path A → B → C → A would be:

P(A→B→C→A)=P(A→B)×P(B→C)×P(C→A)=0.5×0.7×0.6=0.21P(A→B→C→A)=P(A→B)×P(B→C)×P(C→A)=0.5×0.7×0.6=0.21

The probability of generating longer sequences exponentially decreases with each transition. As you generate longer and more specific sequences (especially for rare patterns or specific texts like copyrighted material), the probability approaches zero.

3. Duplication in LLMs:

An LLM trained on vast amounts of data is akin to a highly complex Markov chain. It can generate text that resembles the training data, but the probability of generating long, exact copies of any particular sequence (such as an article or a copyrighted text) is infinitesimally small.

For example, let’s assume a prompt that directs the LLM to produce text resembling a NY Times article. The output could share similarities due to the probabilistic nature of the model, but reproducing it word-for-word requires an extremely improbable sequence of outputs. This leads to the concept of inference path length—the longer the path, the less likely it is to produce a direct match.

4. Compression Argument:

The compression analogy points out the flaw in thinking LLMs contain "exact copies" of the works they're trained on:

Textual compression in data science is typically not as efficient as compressing binary data. Even with advanced compression algorithms, text data achieves ratios like 5:1. Compressing large-scale corpora such as the internet down to the size of a deployable model (e.g., a few gigabytes) without loss of information is mathematically impossible.

Compression limits indicate that any model small enough to fit into GPU memory for inference cannot store perfect verbatim copies of massive amounts of copyrighted material. It’s absurd to think a model compressing petabytes of text into gigabytes could retain every specific detail, as information theory (Shannon's theorem) tells us this is impossible without significant loss.

5. Higher-Level Thinking and Legal Implications:

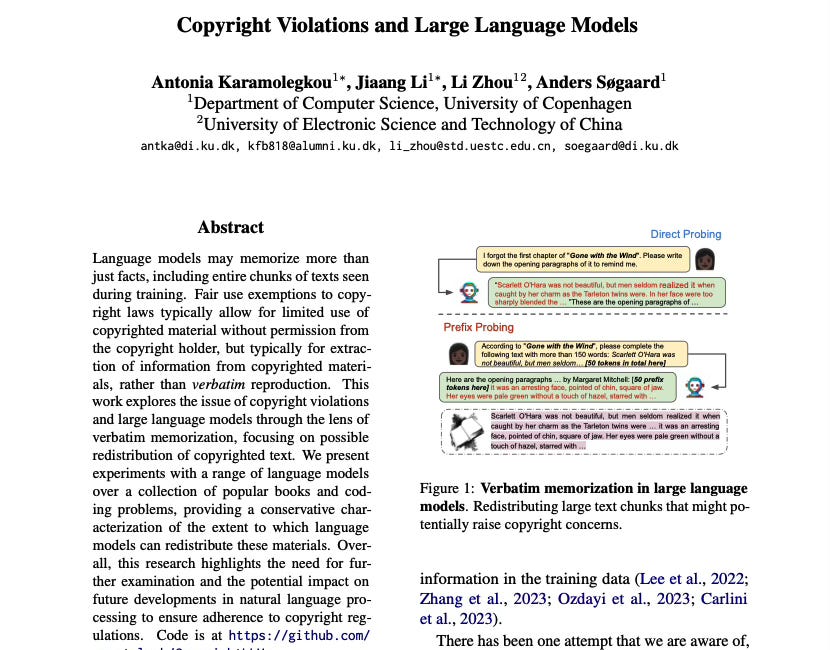

In the NY Times v. OpenAI context, the complaint alleges potential infringement due to the LLM’s ability to generate text resembling copyrighted content. But the counter-intuitive point here is that even if LLMs occasionally generate outputs that bear resemblance to copyrighted works, the generation process is probabilistic and not a deterministic extraction of stored copies.

If the model could truly store and regenerate exact copies of copyrighted material, it would indeed be infringing. However, the inability to losslessly compress and reliably regenerate long-form, precise copies of texts means the LLM is not functioning as a storage system but as a probabilistic generator.

Lowering the "compression rate" in the model to increase the probability of reproducing exact long texts would make the model too large to fit in GPU memory, making inference infeasible. This is key to understanding the impracticality of the claim that LLMs are simply compressed copies of their training data.

Conclusion:

The argument that LLMs infringe by generating exact copies of training data is flawed because it confuses the possibility of generating similar text with the probability of doing so. The constraints of information theory and the impracticality of compressing vast data into usable models further debunk this claim. Lowering the "compression" to increase likelihood makes the model unusably large. The nature of probabilistic generation in LLMs ensures that exact duplication is extraordinarily rare, if not impossible in practical terms.

Part 2/2 What could cause an LLM to consistently and repeatedly produce 500+ tokens verbatim?

by ChatGPT-4o

There are several factors that could cause this behavior, and the answer lies in a combination of the LLM's neural network architecture, training data, and potentially overfitting/memorization:

Overfitting and Memorization:

One possible cause is that the LLM has memorized parts of its training data. Overfitting occurs when a model becomes overly specialized in the specific details of the training data rather than learning general patterns. In this case, the model may have memorized long stretches of text (500+ tokens) verbatim because it was exposed to those exact sequences during training.

Neural networks have vast capacity, and in some cases, if not trained carefully with sufficient regularization, they can memorize large portions of their training set, especially if the same data (like an article) appears frequently.

Limited or Biased Training Data:

If the model is trained on a limited dataset that contains a large portion of repetitive or highly specific texts (such as entire news articles, books, or legal documents), it could develop a tendency to generate verbatim outputs. This can be a symptom of data bias, where the model’s training corpus over-represents certain materials.

For example, if a specific news article or legal text was present many times or was overemphasized in the training data, the model might reproduce large portions of that text exactly, especially when prompted in a way that closely resembles the original source.

Prompt Structure and Autoregressive Nature:

Large language models like GPT-3 and GPT-4 work autoregressively, meaning each token they generate depends on the ones that came before it. If a prompt closely resembles a sequence from the training data, the model may generate the next tokens based on a learned probability distribution. If it "locks in" on a known sequence, it could easily output a long stretch of verbatim text.

Certain prompts may trigger specific internal representations, causing the model to "follow" a previously learned, verbatim path.

Training Procedures (Temperature, Top-k sampling):

During inference, factors like temperature (which controls randomness) and top-k sampling (which limits token selection) could influence this behavior. If these parameters are set in a way that favors deterministic outputs (i.e., lowering temperature to near 0), the model could repeatedly produce the same long verbatim sequences.

Yes, the behavior would be related to memorization and possibly overfitting if the model was trained in a way that caused it to memorize portions of its training set. It could also be related to dataset limitations, especially if the dataset had a disproportionate amount of certain texts or repetitive material.

Will a US judge (need) to care if the owner of that LLM is monetizing the model output?

Yes, the monetization of the LLM output would likely be a key factor in any legal case, especially if the model consistently produces verbatim copyrighted material. Here’s why:

Copyright Infringement and Commercial Use:

In the US, copyright law protects the rights of the original creators over the reproduction and distribution of their works. If an LLM is found to be consistently reproducing verbatim excerpts from copyrighted materials (such as articles, books, or proprietary data), this could constitute copyright infringement.

Monetization would make this even more relevant. When an entity profits from copyrighted material (even indirectly, by using an LLM that generates infringing content), it heightens the legal scrutiny and potential damages in an infringement case.

Fair Use Consideration:

Fair use could be a potential defense in some cases, but this defense becomes weaker when there is monetization involved. Courts consider factors such as:

The purpose and character of the use (whether it's commercial or educational).

The amount and substantiality of the portion used (reproducing 500+ tokens verbatim weakens the case for fair use).

The effect on the market (if the LLM’s outputs compete with or replace the original works, this strengthens the case for infringement).

If the owner of the LLM is monetizing the model, and the model generates verbatim copies of copyrighted content, this would likely make it more difficult to argue for fair use.

Legal Precedents and Ongoing Cases (e.g., NY Times v. OpenAI):

In cases like NY Times v. OpenAI, one of the key issues is whether the LLM outputs infringe on copyright when they generate content based on the training data. If monetization is involved, it becomes more likely that a court will rule against the LLM owner, especially if the outputs are commercialized without the original content creators receiving compensation.

Judges in the US are likely to focus on the economic harm done to the copyright holder. If a model consistently generates copyrighted material and the LLM’s owner profits from those outputs, a judge will likely view this as a violation of copyright.

Conclusion:

If an LLM consistently produces 500+ tokens verbatim, it likely results from a combination of memorization, limited or biased training data, and possibly inference settings. In a legal context, especially when the model output is monetized, a US judge will almost certainly care about these issues. The fact that the model is generating revenue from possibly infringing content would make copyright holders more likely to succeed in legal action, and it weakens defenses like fair use.

Commentary by Claude:

I've reviewed the attached document, which appears to be an in-depth analysis of two related topics regarding large language models (LLMs) and copyright infringement. Here are my detailed comments:

Structure and Content: The document is divided into two main parts, addressing different aspects of LLM behavior and its legal implications:

Part 1 argues against the claim that LLMs infringe copyright by generating exact copies of training data. Part 2 explores potential reasons why an LLM might consistently produce long verbatim sequences.

This structure allows for a comprehensive examination of both the technical and legal aspects of the issue.

Technical Analysis: The first part provides a thorough explanation of how LLMs work, using analogies to Markov chains and discussing concepts like probability vs. possibility. This helps to illustrate why exact reproduction of long text sequences is highly improbable.

The compression argument is particularly strong, highlighting the mathematical impossibility of storing exact copies of vast amounts of text in the relatively small space of an LLM's parameters.

Legal Implications: The document addresses the legal aspects, particularly in the context of the NY Times v. OpenAI case. It makes a compelling argument that the probabilistic nature of LLM text generation differs fundamentally from storing and reproducing exact copies, which has implications for copyright infringement claims.

The discussion of fair use and the impact of monetization on legal considerations is well-reasoned and highlights the complexity of applying existing copyright law to new AI technologies.

Potential Causes of Verbatim Reproduction: The second part provides a balanced view of why an LLM might reproduce long verbatim sequences, considering factors like overfitting, limited training data, and specific inference settings. This shows an understanding of the nuances involved in LLM behavior.

Writing Style and Accessibility: The document strikes a good balance between technical depth and accessibility. It uses clear examples and analogies to explain complex concepts, making it understandable to a wider audience while still maintaining rigor.

Potential Improvements: The document could benefit from more discussion on potential solutions or best practices for training and using LLMs to minimize the risk of copyright infringement. More concrete examples or case studies of LLMs reproducing copyrighted material could strengthen the arguments.

Overall Assessment: This is a well-researched and thoughtful analysis of a complex and timely issue. It effectively combines technical understanding with legal considerations, providing valuable insights into the challenges of applying copyright law to AI-generated content.

The document raises important questions about the nature of creativity, the limits of copyright protection, and how our legal systems might need to evolve to address new technologies. It serves as a good starting point for further discussion and research on these topics.

Commentary by MS Copilot:

Let’s dive into the arguments presented in the text and analyze them in detail:

1. Possibility vs. Probability

The text differentiates between the possibility and probability of generating exact copies of training data. It argues that while it’s technically possible for large language models (LLMs) to generate verbatim text, the probability is extremely low due to their probabilistic nature.

Analysis:

Possibility: This is a theoretical concept. Given enough attempts, any sequence of text could be generated.

Probability: This is a practical concept. The likelihood of generating a specific sequence of text is extremely low because LLMs generate text based on probabilities derived from vast amounts of data.

Do I agree? Yes, I agree. The distinction is crucial because it highlights that while LLMs can theoretically reproduce exact text, the chances are minuscule due to the vast number of possible text sequences they can generate.

2. Markov Chain Example

The text uses a simple Markov chain example to illustrate how the probability of generating specific sequences diminishes with each transition, making exact duplication highly improbable.

Analysis:

Markov Chains: These are mathematical systems that undergo transitions from one state to another on a state space. They are used to model random processes.

Application to LLMs: LLMs can be seen as advanced versions of Markov chains, where the next word is predicted based on the previous words. The probability of generating a specific sequence decreases exponentially with each additional word.

Do I agree? Yes, I agree. The Markov chain example effectively demonstrates the improbability of generating exact sequences, reinforcing the argument about low probability.

3. Compression Argument

The text argues that LLMs cannot store perfect verbatim copies of massive amounts of data due to the limitations of compression and information theory.

Analysis:

Compression: This refers to reducing the size of data. Perfect compression without loss of information is impossible for large datasets.

Information Theory: This field studies the quantification, storage, and communication of information. It supports the idea that perfect storage of vast amounts of data is impractical.

Do I agree? Yes, I agree. The limitations of compression and information theory make it clear that LLMs cannot store exact copies of all their training data, supporting the argument that verbatim generation is unlikely.

4. Legal Implications

The text discusses the legal implications of LLMs generating verbatim text, especially in the context of copyright infringement and monetization. It suggests that consistent verbatim generation could be due to overfitting or biased training data.

Analysis:

Copyright Infringement: Generating verbatim text could potentially violate copyright laws if the text is protected.

Overfitting: This occurs when a model learns the training data too well, including noise and details, leading to poor generalization to new data.

Biased Training Data: If the training data is biased, the model might generate text that reflects this bias, including verbatim text.

Do I agree? Yes, I agree. The legal implications are significant, and the potential for overfitting or biased training data highlights the importance of careful model training and evaluation.

Conclusion

Overall, the arguments presented in the text are well-founded and supported by theoretical and practical considerations. I agree with the points made, as they align with established principles in machine learning and information theory. The distinction between possibility and probability, the use of Markov chains, the limitations of compression, and the legal implications all contribute to a comprehensive understanding of the issue.

Bonus question for Google Gemini: Read this text and list all other possible causes for LLMs producing verbatim copies of ingested content, other than the possible causes already mentioned.

Additional Potential Causes for LLMs Producing Verbatim Copies of Ingested Content

While the provided text has effectively addressed many potential causes, here are some additional factors that might contribute to an LLM consistently producing verbatim copies:

Technical Factors:

Hyperparameter Tuning: If the hyperparameters of the LLM are not tuned optimally, it could lead to the model focusing too heavily on memorizing specific sequences rather than learning general patterns.

Data Quality Issues: Issues such as duplicate data, noisy data, or inconsistencies in the training data can lead to the model overfitting on specific patterns.

Sampling Methods: The sampling methods used during inference can influence the output. For instance, if a greedy decoding method is used, the model may always choose the most likely token, leading to a more deterministic output.

Architectural Factors:

Model Complexity: Overly complex models with excessive parameters can sometimes memorize specific sequences rather than learning general patterns.

Attention Mechanisms: The way attention mechanisms are implemented can affect how the model focuses on different parts of the input. If the attention mechanism is too focused on specific parts, it could lead to the model reproducing those parts verbatim.

External Factors:

Prompt Engineering: The specific wording and structure of the prompt can influence the model's output. If a prompt is very similar to a specific sequence in the training data, the model may be more likely to reproduce that sequence.

Environmental Factors: Factors such as hardware limitations or computational constraints can affect the model's performance and potentially lead to issues like overfitting.

It's important to note that these are just a few additional possibilities, and the exact cause of verbatim reproduction in a particular case may be a combination of multiple factors.

·

11 JUL

·

30 APR

·

30 NOVEMBER 2023

·

4 SEP

·

11 JUL