- Pascal's Chatbot Q&As

- Posts

- Apple’s study is a refreshing act of scientific rigor in a hype-dominated field. It strips away the illusion that current frontier models “reason” in any meaningful sense.

Apple’s study is a refreshing act of scientific rigor in a hype-dominated field. It strips away the illusion that current frontier models “reason” in any meaningful sense.

What they do, instead, is mimic the form of thinking without mastering its function. Recognizing this distinction is not a pessimistic retreat—it’s a necessary recalibration.

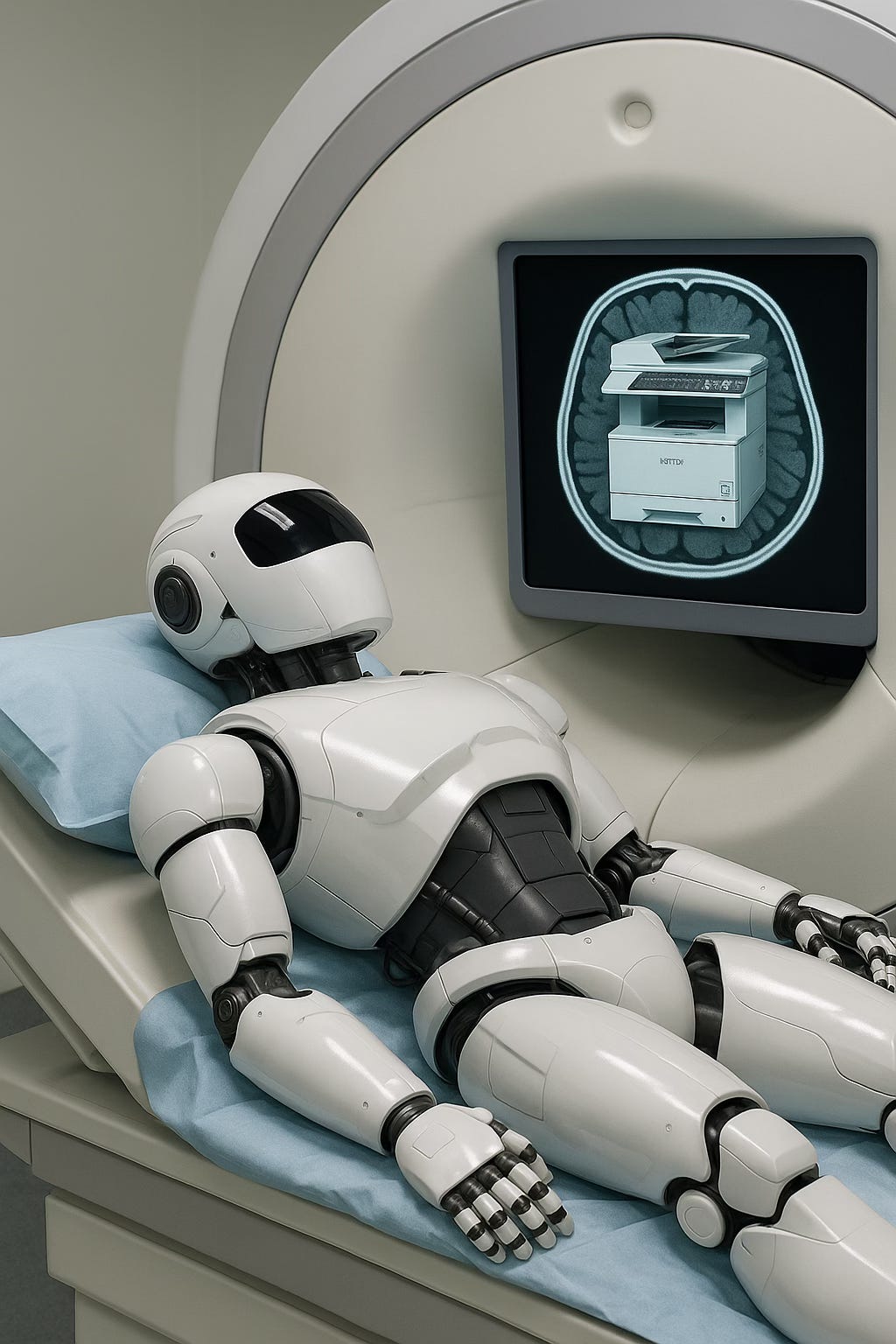

The Illusion of Thinking — What Apple’s LLM Study Reveals About AI Reasoning

by ChatGPT-4o

Apple’s recent research paper, The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity, is a landmark contribution to our understanding of how so-called "thinking" large language models (LLMs) behave when subjected to controlled, algorithmic reasoning tests. In the era of escalating claims about AI’s capacity for logic, reasoning, and even autonomy, this study offers a sobering—and scientifically rigorous—assessment that challenges techno-optimist narratives.

Summary of the Research

The paper introduces the concept of Large Reasoning Models (LRMs), specialized variants of LLMs trained with reinforcement learning to simulate “thinking” through techniques like Chain-of-Thought (CoT) prompting and self-verification. Models like Claude 3.7 Sonnet (Thinking), OpenAI’s o3-mini, and DeepSeek-R1 are held up as examples of this new breed.

Rather than evaluating these models on established mathematical benchmarks, which are often contaminated by training leakage, the authors use clean, controllable puzzle environments: Tower of Hanoi, Checker Jumping, River Crossing, and Blocks World. These tests allow for manipulation of problem complexity while preserving logical integrity, making them ideal for isolating reasoning behavior.

Key Findings

Three Regimes of Model Competence

Low complexity: Non-thinking LLMs outperformed LRMs. Simpler problems are solved more efficiently without verbose reasoning.

Medium complexity: LRMs show advantage—longer “thinking” traces help.

High complexity: Both model types collapse. Strikingly, LRMs begin to reduce reasoning effort as complexity rises—despite ample compute tokens.

Overthinking and Inefficiency

In simple problems, LRMs often find the right answer early but continue to explore incorrect paths—wasting compute.

In medium complexity problems, they eventually find the answer—but only after much detouring.

In complex scenarios, they fail completely, with no valid solutions emerging from their thought processes.

Failure to Execute Explicit Algorithms

Even when provided with a known solution algorithm (e.g., for Tower of Hanoi), LRMs fail to follow it reliably—suggesting fundamental flaws in symbolic manipulation.

Training Contamination and Evaluation Limitations

On common benchmarks like AIME and MATH-500, performance might be inflated due to data exposure. In contrast, puzzle environments expose the brittleness of these models.

Why This Matters

This paper is not merely a technical investigation; it is a philosophical and strategic wake-up call. It challenges the seductive narrative that “more scale + more tokens = more intelligence.” The illusion of thinking lies in the faith that generating verbose reasoning traces is equivalent to actual cognitive processes. In reality, these models exhibit simulated cognition—patterned, probabilistic word prediction rather than structured logical reasoning.

For regulators, AI safety researchers, and developers, this has profound implications:

Capability overestimation: Deploying these models in legal, medical, or security domains based on superficial benchmark performance is reckless.

Evaluation reform: The study calls for a shift away from contaminated, final-answer benchmarks toward traceable, complexity-controlled environments.

Compute scaling fallacy: The counterintuitive finding that reasoning effort decreases with problem complexity undercuts the belief that simply allocating more compute leads to better reasoning.

My Commentary

This paper echoes growing concerns in the AI alignment and interpretability communities: that language models are dangerously opaque and prone to catastrophic failure modes when operating outside distribution. What is especially troubling is the superficiality of current industry validation methods. Many AI labs—including OpenAI and Google DeepMind—continue to trumpet progress based on benchmarks like GSM8K or MATH, without disclosing contamination risks or reasoning pathologies.

Apple’s approach—grounded in methodical, falsifiable experimentation—represents the kind of epistemological humility we need more of. It also reinforces a fundamental truth: intelligence is not just the ability to output an answer, but the capability to generalize, correct errors, and plan. Today's frontier LLMs lack these traits when pushed beyond the shallow end of the reasoning pool.

From a commercial and policy standpoint, this paper raises red flags. Corporations should think twice before over-relying on reasoning models for automation in critical decision-making systems. Policymakers, too, should reconsider frameworks that treat these models as inherently capable of cognitive substitution.

Recommendations

For AI developers:

Invest in symbolic reasoning hybrid models that combine neural networks with verifiable logic modules.

Develop tools that monitor and intervene in reasoning trace generation dynamically.

For enterprise adopters:

Avoid deploying LLMs for high-stakes tasks without sandbox testing on controlled problem complexity.

Require explainable trace validation, not just final answer performance.

For regulators:

Mandate robustness benchmarks using clean, compositional environments.

Treat LRM claims with scrutiny akin to pharmaceutical efficacy trials—demand rigorous, reproducible evidence.

For the research community:

Embrace puzzle-based and simulation-based diagnostics to demystify model cognition.

Study the disconnect between model "thinking" verbosity and actual logical competency.

Conclusion

Apple’s study is a refreshing act of scientific rigor in a hype-dominated field. It strips away the illusion that current frontier models “reason” in any meaningful sense. What they do, instead, is mimic the form of thinking without mastering its function. Recognizing this distinction is not a pessimistic retreat—it’s a necessary recalibration. If we are to build safe, reliable, and truly intelligent systems, we must confront their limitations with clarity, not denial.