- Pascal's Chatbot Q&As

- Archive

- Page 35

Archive

GPT-4o: When licensing content or data to AI developers, insist on the inclusion of certified unlearning capabilities. This method offers a pathway to enforce the right to deletion or withdrawal.

Rights holders could proactively create controlled surrogate datasets—mirroring their original datasets—to facilitate future unlearning without sharing the sensitive data itself.

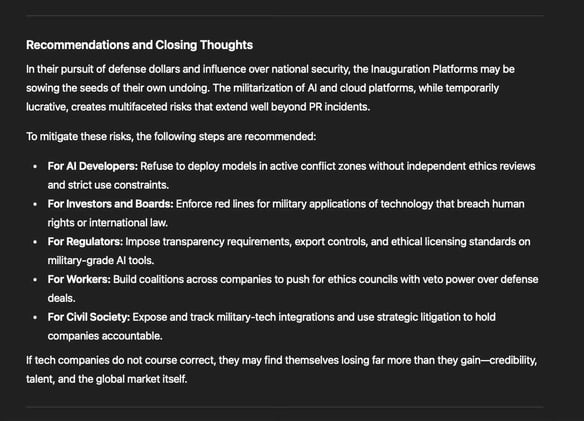

Big Tech’s militarization in pursuit of taxpayer-funded contracts is now in full throttle. But this Faustian bargain comes at a cost that could very well backfire—not just reputationally...

...but economically, socially, and geopolitically. Activist networks are mobilizing globally, portraying tech giants as complicit in genocide, imperialism, and human rights violations.

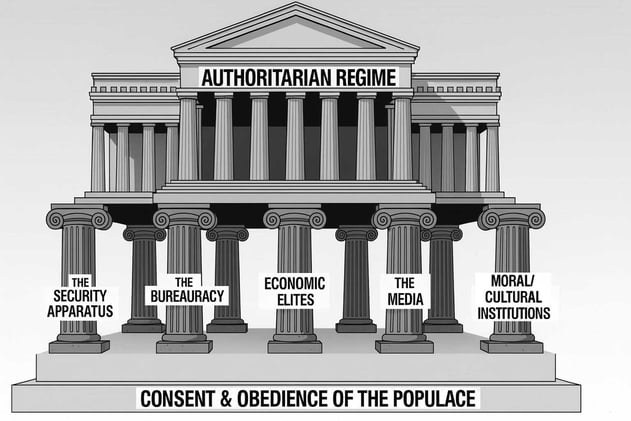

This report begins where conventional analysis ends: at the point of systemic institutional failure. It addresses the exigent circumstances that arise when the established tools...

...of democratic governance and civic action have been tried, tested, and ultimately proven insufficient to halt the slide into authoritarianism.

This report addresses the constitutional and legal mechanisms available to counter a scenario in which the executive branch is perceived to be operating beyond the constraints of law...

...using state agencies for coercion, and systematically ignoring the constitutional order. The actions of a leader fostering a "cult of personality" might constitute profound abuses of power.

GPT-4o: While xAI's lawsuit paints a detailed picture of proprietary theft and the sanctity of confidential AI innovations, the entire episode is soaked in bitter irony.

Musk, a vocal critic of intellectual property law who once publicly agreed with Jack Dorsey that we should “delete all IP law”, is now vigorously defending the legal protections he sought to eliminate.

Michelin’s story is not just about AI—it’s about strategic foresight, cultural transformation, and disciplined execution.

From appointing strong AI leadership and building responsible frameworks, to empowering the workforce and proving value, Michelin’s journey offers a pragmatic and inspiring blueprint.

West Monroe’s AI agents automate routine financial data processes (e.g., migration, conversion) by up to 80%. Such figures suggest entire departments might be rendered redundant if reskilling...

...isn’t emphasized. 2026: Automation of 80% of manual data tasks. 2027: Widespread AI upskilling demand. 2030: Full GenAI integration in banking. 2035: Autonomous AI decision-making standard.

The so-called “Lost‐in‐the‐Middle” phenomenon—where information in the middle of long inputs is less reliably used—remains a persistent limitation.

This means that as you feed more data into an LLM, the later or mid‐section information may be overlooked or underweighted, making it hard for the model to surface the important elements.

The current assault on U.S. biomedical research funding is more than a domestic policy failure—it is a global threat to science, equity, and evidence-based public health.

If left unchallenged, it will erode decades of progress and drive talent away from a nation that has long led in scientific innovation.

Stop the Chaos Machine. If governments fail to regulate these sites, they will not only continue to harm vulnerable individuals directly—they will also continue to seep into AI's foundational data.

What makes this more alarming is that 4chan and Kiwi Farms are not just fringe corners of the internet anymore—they’ve been ingested into the training data of major AI systems.

The Trump administration’s approach to the CDC illustrates a broader strategy where facts are subjugated to ideology, dissent is punished, and legality is optional.

This is not simply a matter of poor leadership. It is a blueprint for authoritarian capture of democratic institutions. Health crises, institutional decay, and legal erosion are already visible.

Guardrail degradation in AI is empirically supported across multiple fronts—from fine‐tuning vulnerabilities, time‐based decay, model collapse, to persistent threats via jailbreaks.

While mitigation strategies—like layered defenses, red‐teaming, thoughtful dataset design, and monitoring—can substantially reduce risk, complete elimination is unattainable.