- Pascal's Chatbot Q&As

- Archive

- Page 13

Archive

The Stanford debate provides micro-level evidence about how AI collides with human creativity. The SIIA roadmap provides the macro-level scaffolding for how Congress might build a national framework.

What is missing: rules that recognise model transparency, content licensing, creator compensation, worker rights, and national AI competitiveness are not competing priorities but interdependent ones.

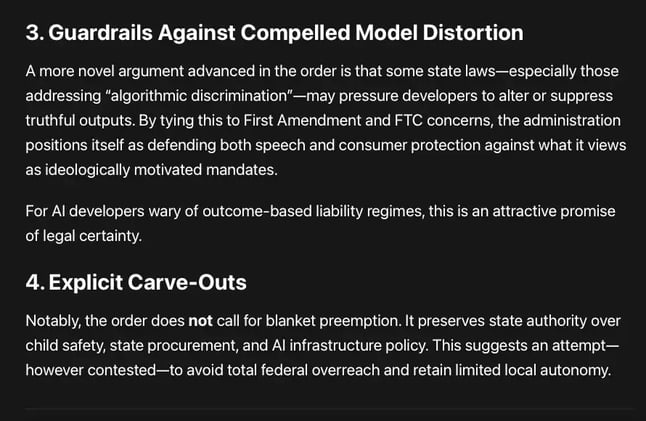

The executive order Ensuring a National Policy Framework for Artificial Intelligence seeks to curtail state-level AI laws through litigation, funding leverage, and eventual federal preemption.

For now, the order accelerates one thing above all else: the politicization of AI governance itself.

The analysis confirms that the AI sector in late 2025 exhibits the classic hallmarks of an “Inflection Bubble.”

Risks are not evenly distributed, but concentrated in the credit markets, the hardware supply chain, and the secondary equity players who lack the balance sheet fortitude to survive a “capex winter.“

The Systematic Collapse of Law: ICE’s Documented Violations of Constitutional, Federal, and International Human Rights Standards

Based on: Substack posts documenting ICE operations, firsthand accounts from detainees and attorneys, Amnesty International reports, ACLU investigations, Senate oversight documents, and news articles.

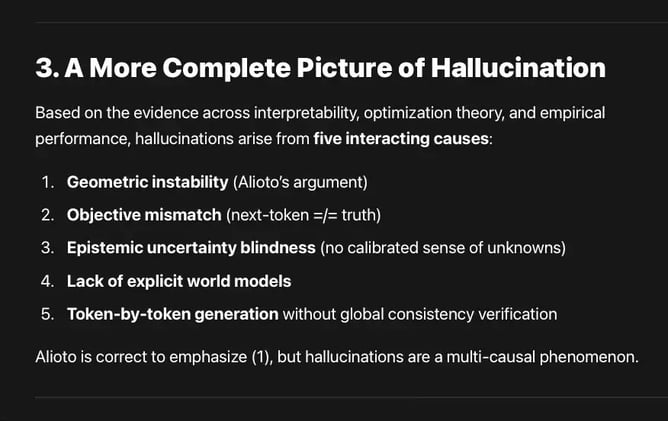

Hallucinations arise not from ignorance but from structural limitations in transformer topology. We need auxiliary manifolds or new mathematical machinery. AI will require new mathematics...

...not just more tokens. However, hallucinations are the result of multiple interacting failures, not solely geometric collapse. Grounding, retrieval, and epistemic modeling remain crucial.

Pew study: Teens are adopting these AI tools rapidly and using them intensely—a trajectory with profound implications for safety, equity, mental health, and the future of learning.

By embedding safety, equity, transparency, and well-being into the core of AI systems, developers can help shape a digital ecosystem that empowers teens rather than exploiting their vulnerabilities.

India: AI companies receive a mandatory blanket license to train on all lawfully accessed copyrighted works, removing the burden of negotiating millions of individual licences.

Payments are required only upon commercialisation of the model or its outputs. This is intended to reduce compliance friction and level the playing field for smaller developers.